No more steps to reproduce. AI where it works best.

Transform debugging with full stack session recordings

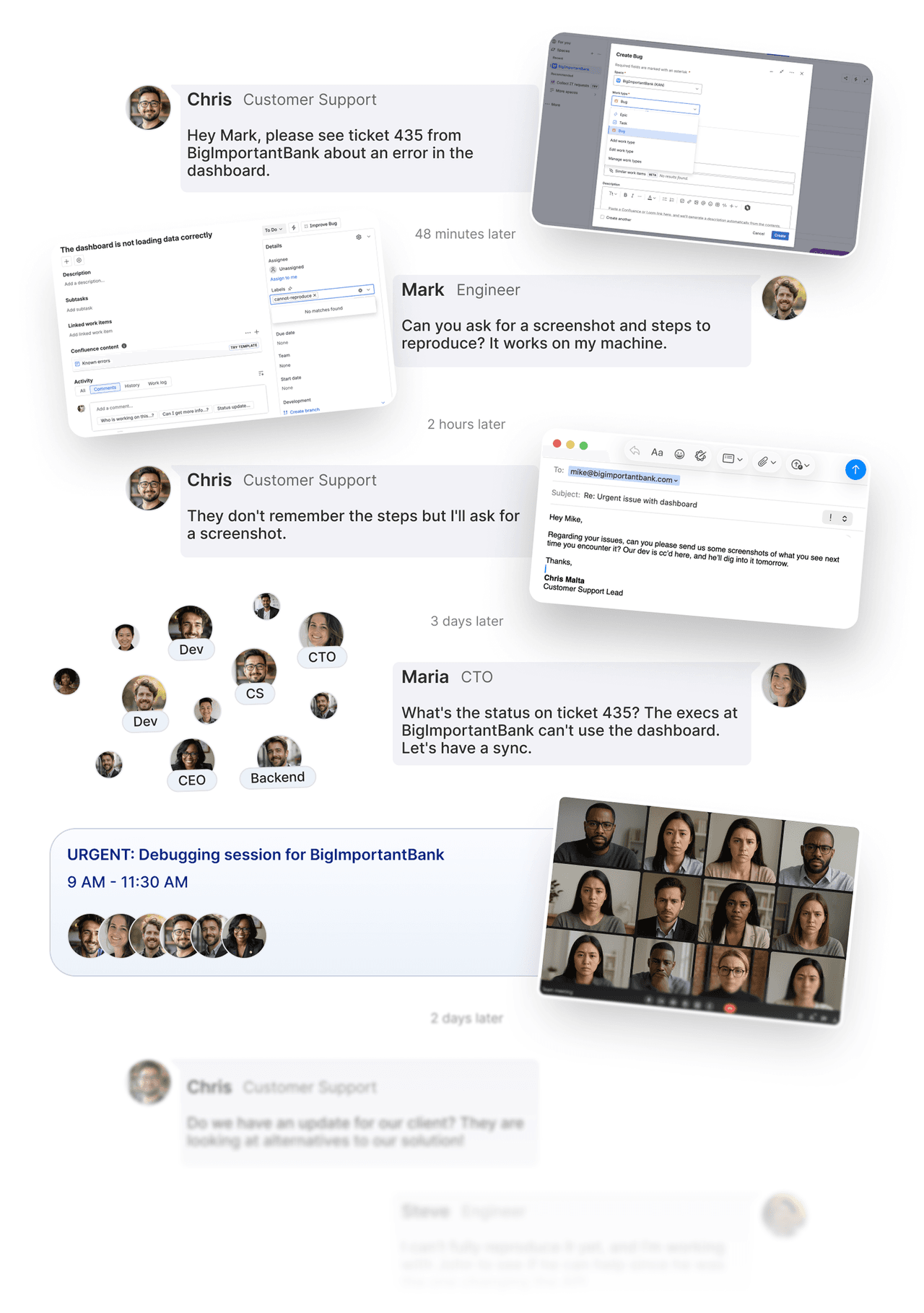

Is this how you fix a bug?

1 bug.

10 days.

5 departments.

No resolution.

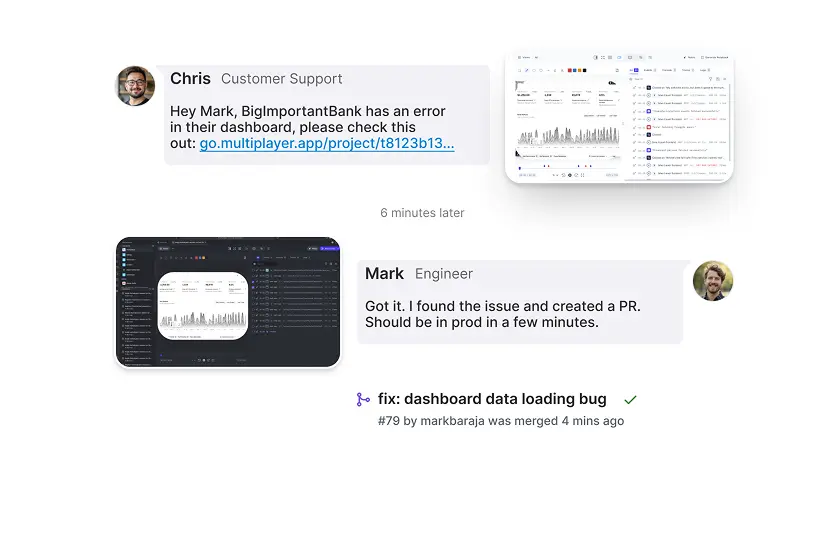

Fix it with Multiplayer

1 bug.

10 minutes.

2 people.

Resolved.

LOVED BY ENGINEERING TEAMS FROM STARTUPS TO ENTERPRISES

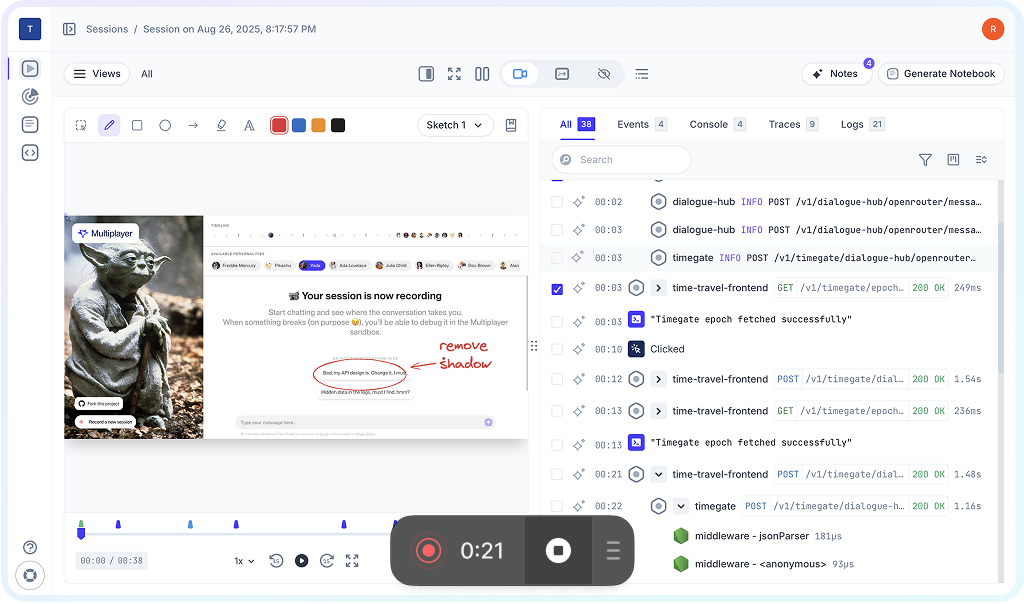

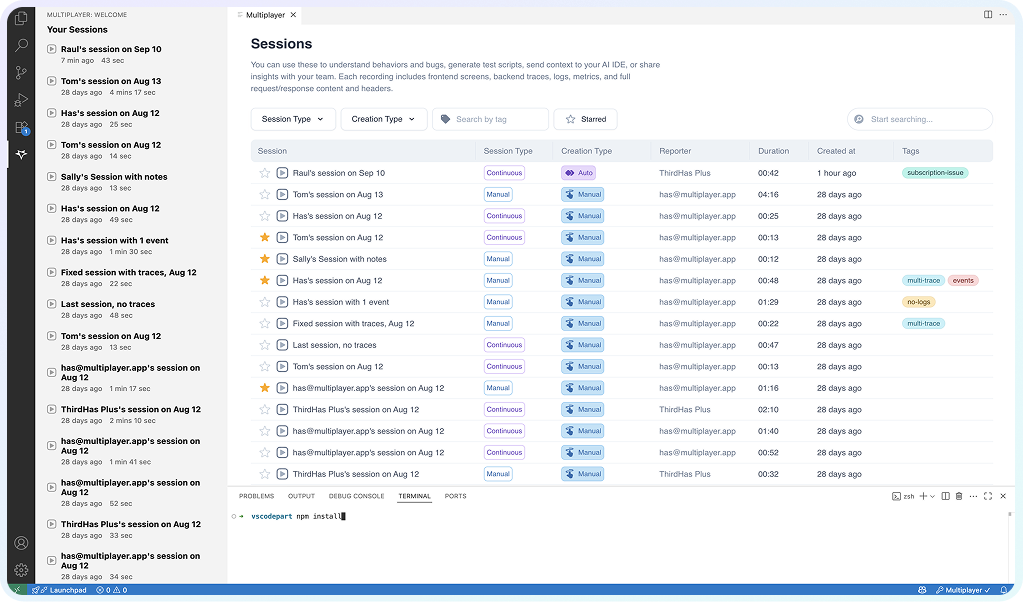

Capture full stack session recordings

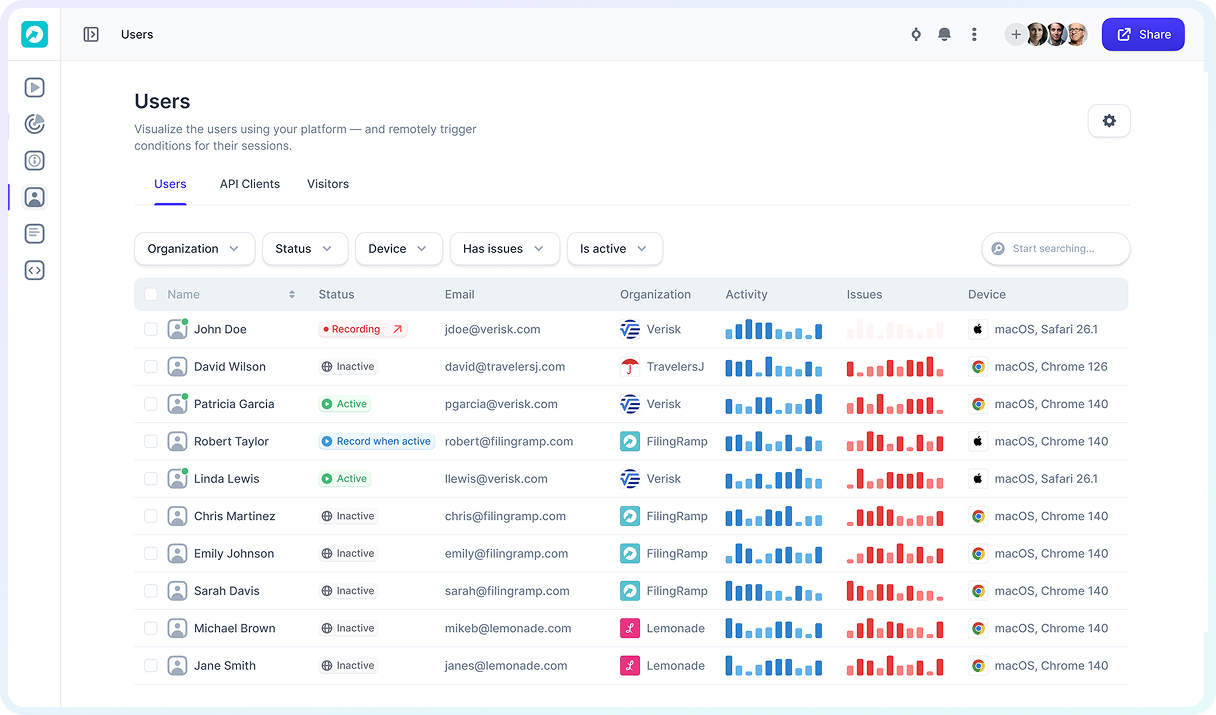

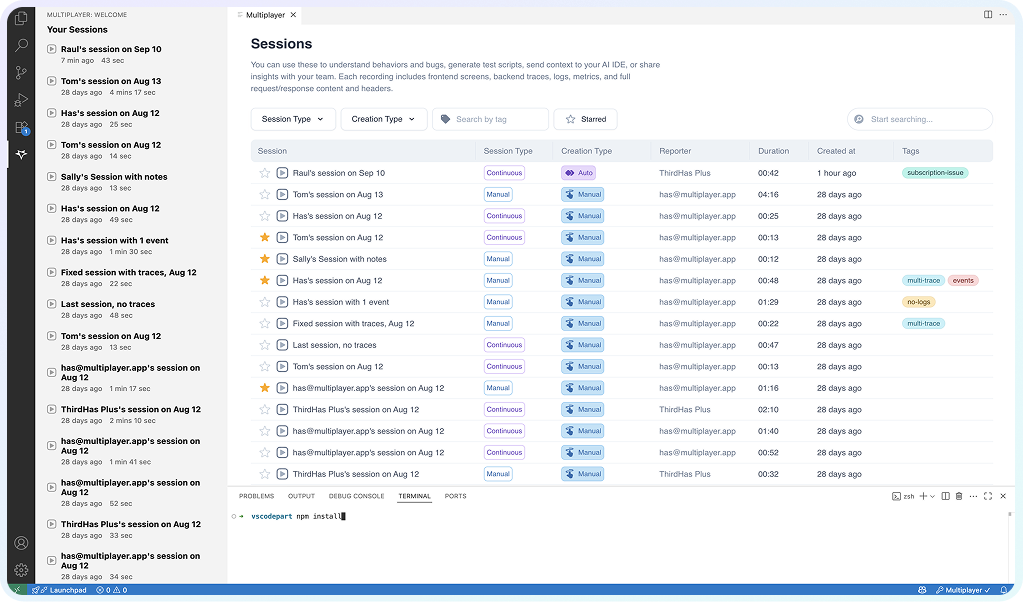

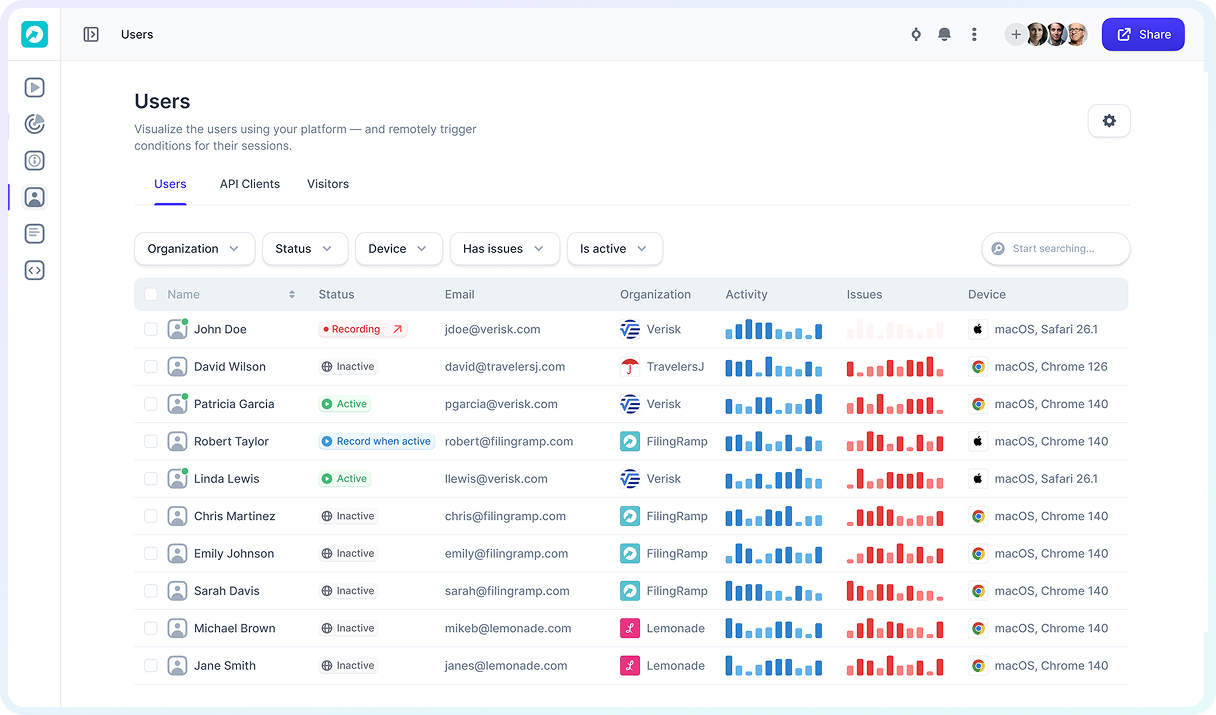

Discover and track user activity and issues

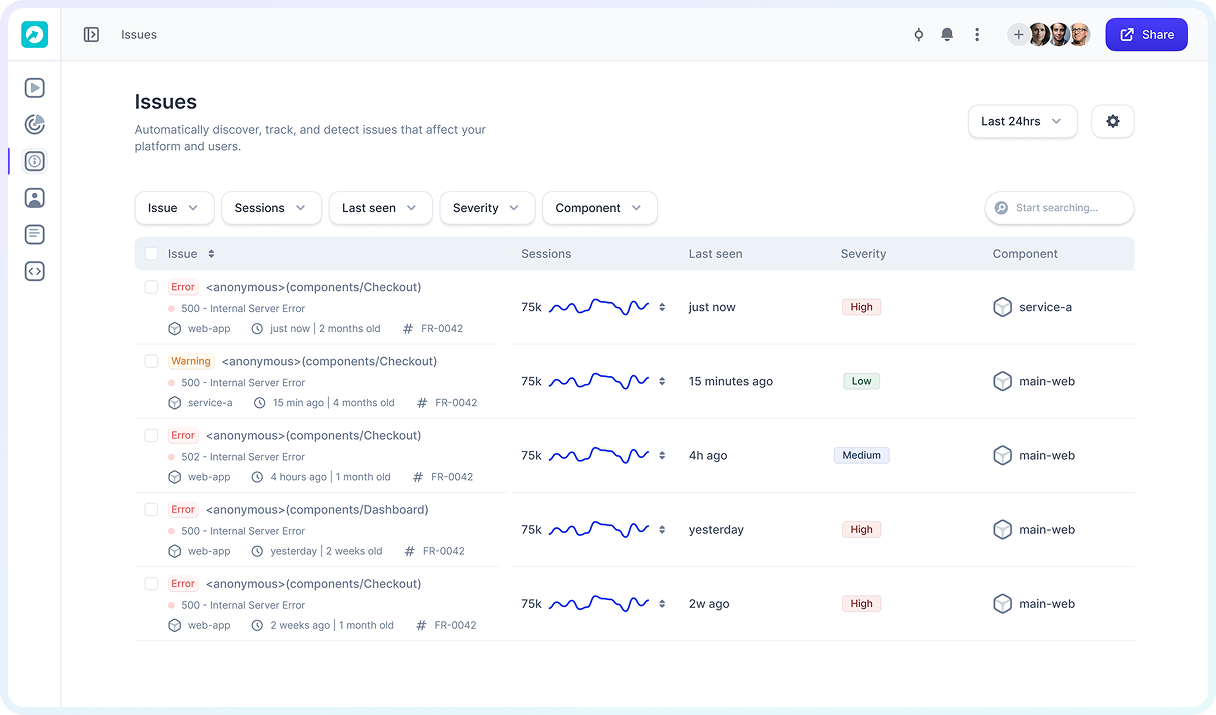

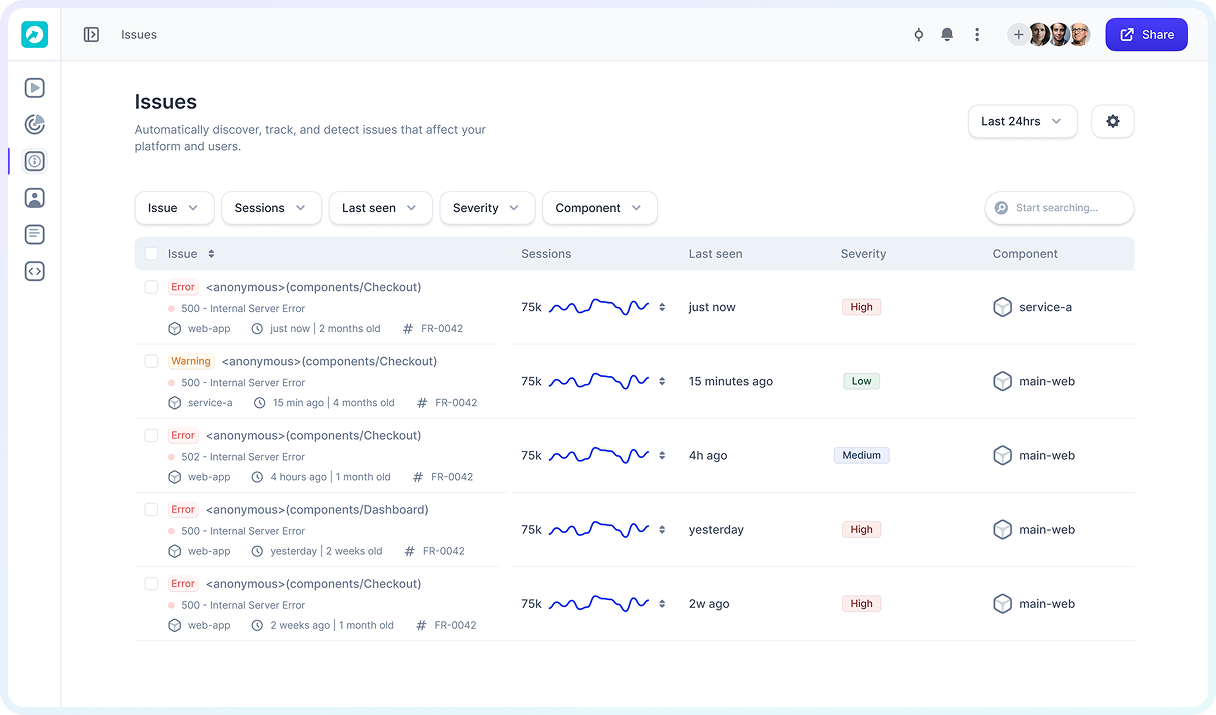

Group user sessions into issues to see customer-impacting problems

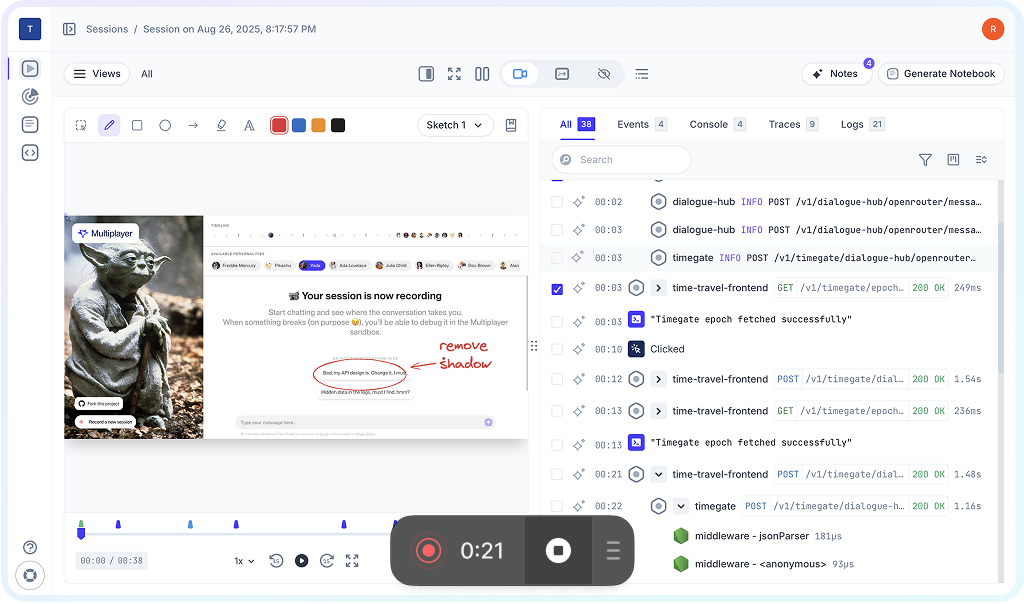

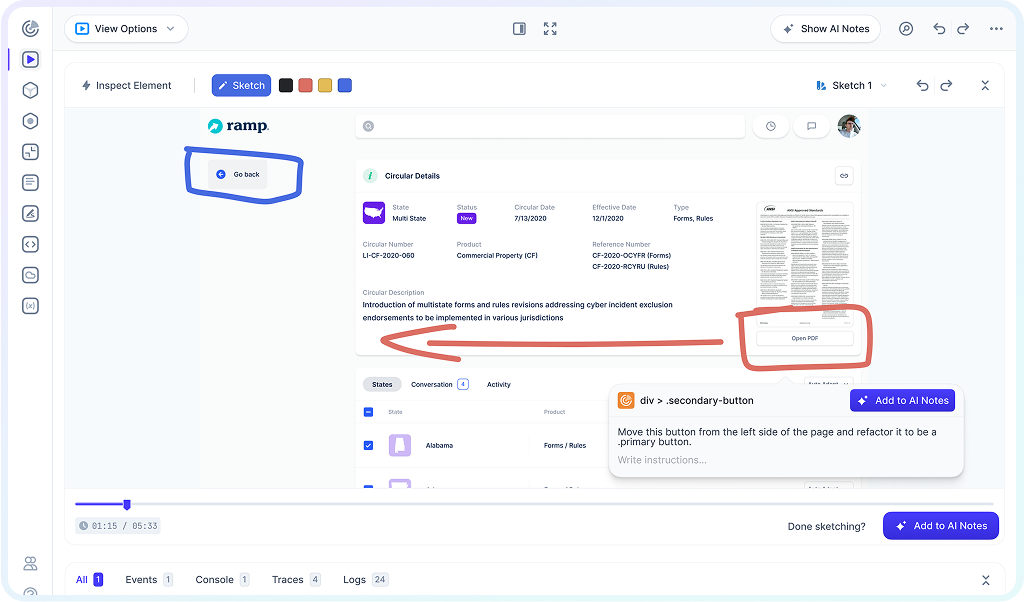

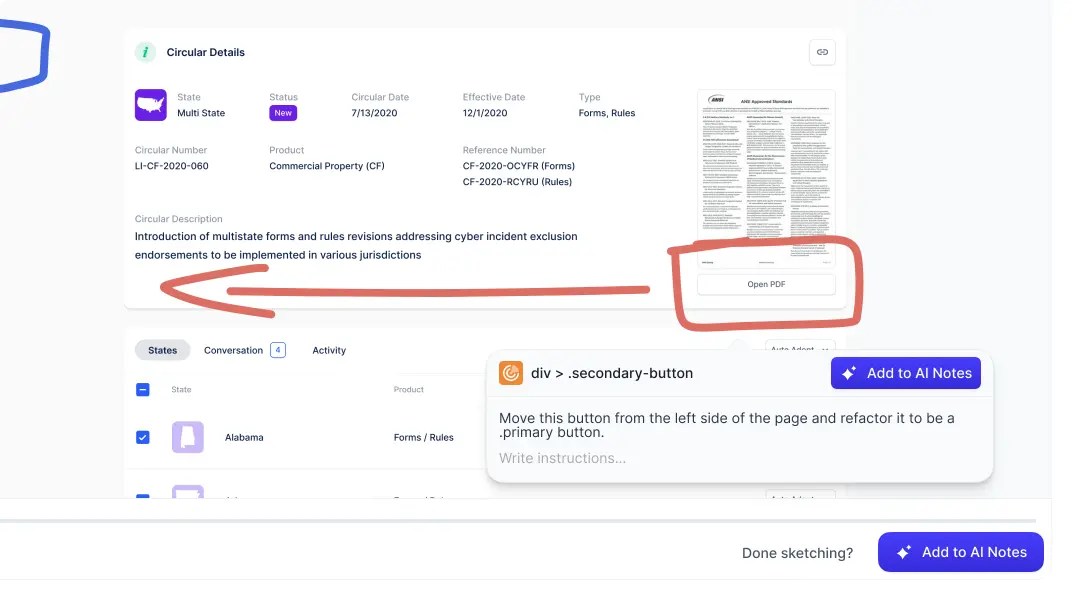

Annotate your replays with sketches and comments

Bring recordings directly into your IDE or AI tool

Capture full stack session recordings

Discover and track user activity and issues

Group user sessions into issues to see customer-impacting problems

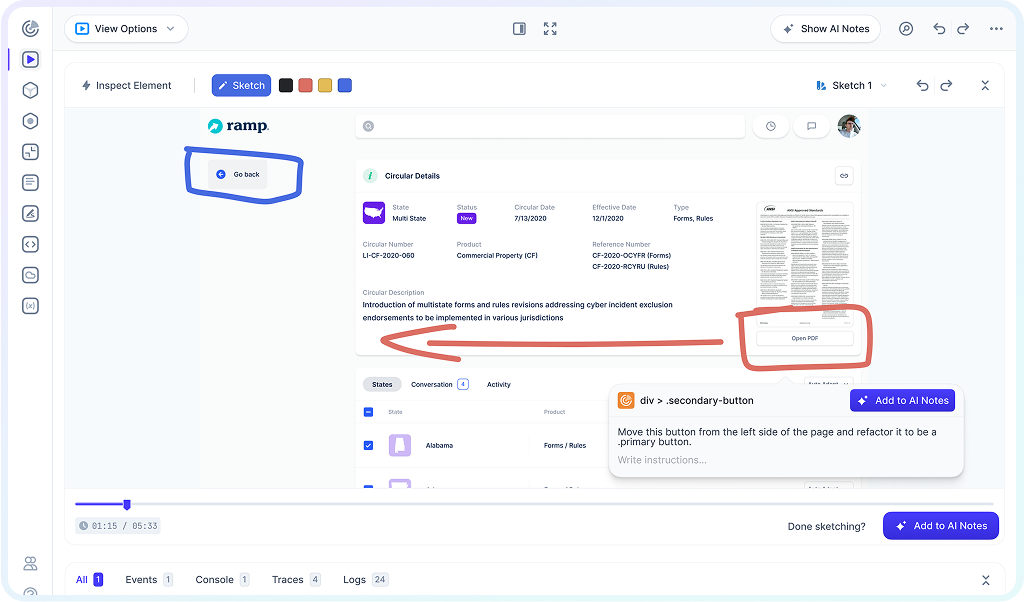

Annotate your replays with sketches and comments

Bring recordings directly into your IDE or AI tool

Quickly solve the deeply technical issues that span services, teams, and tools

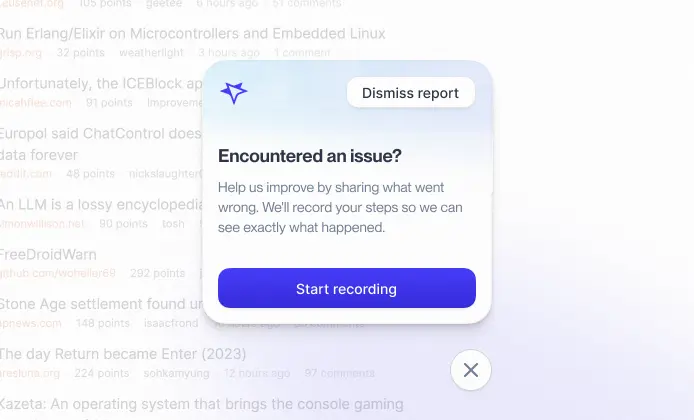

On-demand recording

Capture full stack session recordings on demand with a browser extension, in-app widget, or SDK. Developers, QA, product, support, and even external partners can instantly record and share issues, explore system behavior, and collaborate with sketches and annotations to fix bugs or design new features.

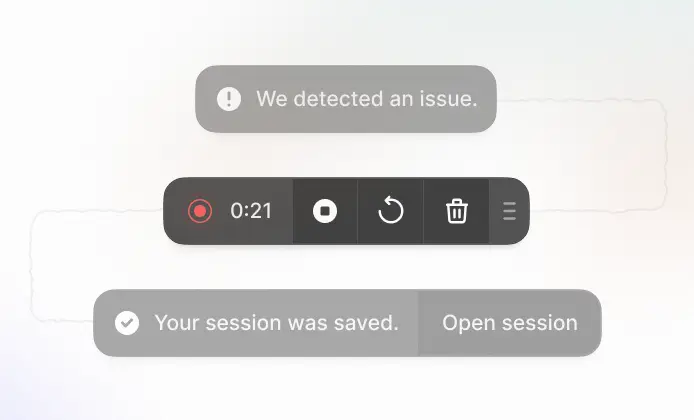

Continuous recording

Record all sessions automatically in the background, so reproducing issues is never required. Multiplayer saves recordings the moment bugs or anomalies occur making it effortless to capture elusive, hard-to-reproduce problems.

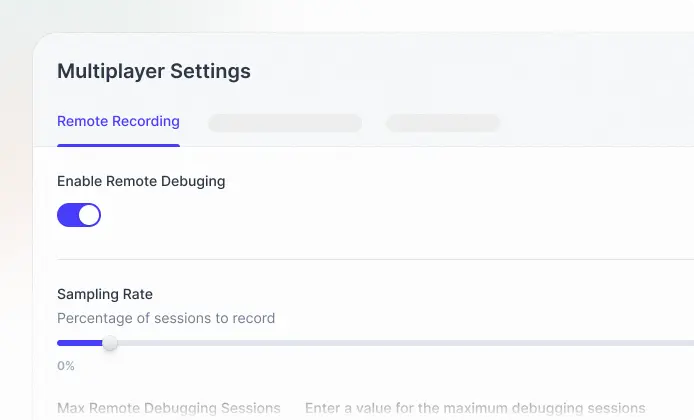

Conditional recording

Silently capture user sessions without any manual steps or bug reports. Multiplayer detects and records issues even when users don't notice or don't report them, giving your team complete context to resolve problems faster and avoid unclear, incomplete tickets.

From bug to fix. From idea to feature. Power all your AI workflows with full stack, annotated session replays.

AI-ready annotated recordings

Turn session recordings into actionable development plans to share with your team or your AI coding assistant. Select traces, API calls, or user interactions, and annotate directly on screenshots: whether it's sketching over a button, specifying new API routes, or listing the reqs of new features. Every bug fix or feature request comes with precise, context-rich instructions.

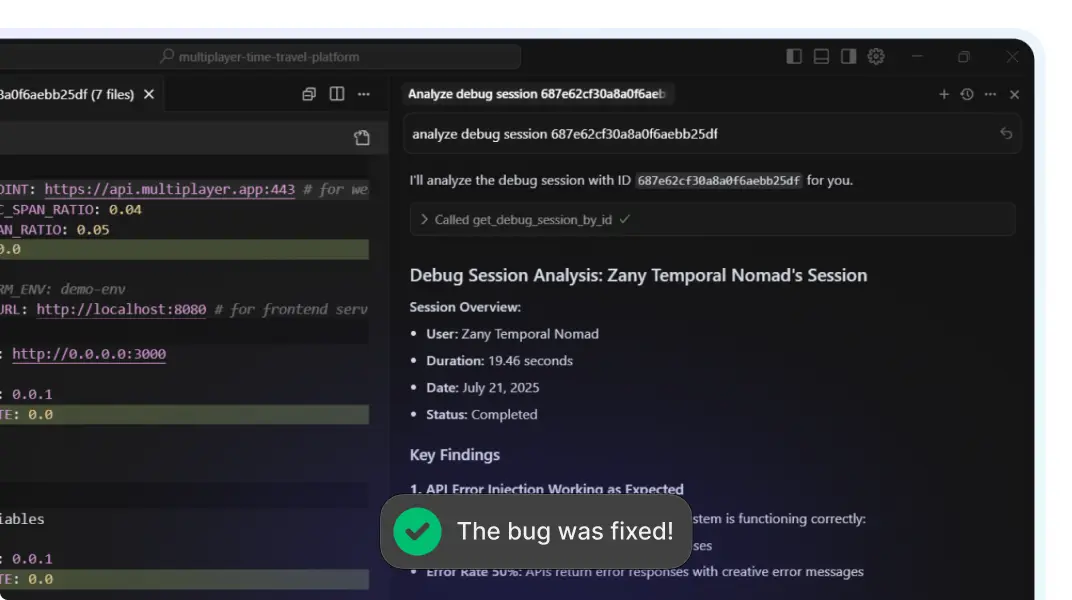

MCP-ready full stack context

Feed your copilots and AI IDEs the complete system context they need: user actions, traces, logs, requests, responses, header data, plus team annotations. No missing data, no guesswork. Your AI tools can generate accurate fixes, tests, and features with minimal prompting.

Do not take our word for it.

Take theirs.

Purpose-built for all engineering workflows

Feed the right data to your AI IDE

Build new features with confidence

Capture every issue, from customer apps to internal tools

Understand why your tests are failing

Share complete bug reports, effortlessly

Getting started with Multiplayer

is easy.

A simple, performant interface so you can start recording in minutes. It fits right into your code with SDKs for your favorite programming languages.

Get started with our client library

Install our Chrome Extension

Install our Visual Studio Code extension

You can install the Multiplayer Session Recorder using npm:

npm install @multiplayer-app/session-recorder-browserQuick start

import SessionRecorder from '@multiplayer-app/session-recorder-browser'

SessionRecorder.init({

application: 'my-web-app',

version: '1.0.0',

environment: 'production',

apiKey: 'MULTIPLAYER_API_KEY',

})More languages and CLI SDKs can be found in our documentation.

Our solution works with your favorite tools

Connect Multiplayer with your favorite tools to streamline your workflow and improve your team's productivity.

OpenTelemetry

OpenTelemetry Honeycomb

Honeycomb New Relic

New Relic DataDog

DataDog SigNoz

SigNoz GitHub

GitHub GitLab

GitLab BitBucket

BitBucket JavaScript

JavaScript Node.js

Node.js Python

Python Java

Java .NET

.NET Go

Go Ruby

Ruby PHP

PHP Rust

Rust Swift

Swift React Native

React Native VS Code

VS Code Cursor

Cursor Claude & Claude Code

Claude & Claude Code Windsurf IDE

Windsurf IDE Zed

Zed Codex CLI

Codex CLI

Book a call with us and

get up and running right away.

Schedule a call with our team to see all of our features and explore if Multiplayer is right for you.

Book a demo