Guide

Debug Logging: Best Practices & Examples

Table of Contents

Traditional step-through debugging is often impractical in applications based on a microservices architecture, as code runs across dozens of services and nodes. Logs become the lifeline for understanding system behavior and diagnosing issues in such environments. Effective debug logging gives engineers a window into the runtime of each service and the interactions between them, allowing trace execution flows that span multiple components.

However, poorly structured or overly noisy logs can turn debugging into a "needle in a haystack" problem. Conversely, well-crafted logs can drastically shorten the time to identify root causes.

This article explores eight actionable best practices for debug logging in distributed systems, each aimed at boosting your team's debugging efficiency and system observability.

Summary of key debug logging best practices

Below is a summary of the eight best practices for debug logging in distributed systems, along with their core benefits:

| Best Practice | Description |

|---|---|

| Standardize your log format and levels. | Use a consistent structure (e.g. JSON) and well-defined log levels to enable efficient parsing and filtering of logs across services. |

| Propagate correlation or trace IDs. | Tag every log entry with a unique request or trace ID to stitch together events across microservices for end-to-end visibility. |

| Avoid logging noise and sensitive data. | Control log verbosity to reduce clutter. Never log sensitive information (like passwords or personally identifiable information) to maintain security and compliance. |

| Capture key contextual metadata. | Enrich logs with context such as user IDs, request IDs, feature flags, and environment info to make each log self-contained and useful. |

| Log transitions and system interactions. | Beyond errors, log important system boundaries and state changes (API calls, DB queries, etc.) to reconstruct complete execution flows. |

| Instrument for replayable sessions. | Design logging and instrumentation to capture sequential events for a session, enabling full replay of issues and better post-incident analysis. |

| Automate test generation from failures. | Leverage debug logs from real failures in production user flows to create reproducible test cases. Turn past issues into future safeguards that reflect actual usage patterns. |

| Enable on-demand deep debugging. | Provide mechanisms (feature flags, dynamic log levels) to temporarily increase logging detail for specific transactions or periods without permanent overhead. |

In the sections that follow, we delve into each best practice in detail.

Standardize your log format and levels

Unstructured, free-form log messages vary between developers or services and become challenging to parse programmatically. This inconsistency makes it nearly impossible to build reliable log queries, alerts, or dashboards. By contrast, structured logging, typically using formats like JSON or key-value pairs, makes it easy for both machines and humans to extract meaning from logs.

For example:

# Unstructured log (difficult to parse)

Feb 03 12:00:00 OrderService - Order 1234 processed for user 567 (total=$89.99)

# Structured JSON log (easy to parse)

{

"timestamp": "2025-02-03T12:00:00Z",

"level": "INFO",

"service": "OrderService",

"message": "Order processed",

"orderId": 1234,

"userId": 567,

"total": 89.99,

"status": "SUCCESS"

}With JSON logs, developers and tools can filter logs by fields like orderId, userId, or status using simple queries, without relying on brittle regex. This is particularly valuable when debugging issues across distributed services where logs must be aggregated and searched by key fields.

Alongside the format, teams must standardize log levels. Common levels include

- DEBUG for detailed diagnostics

- INFO for major flow milestones

- WARN for unusual but non-breaking events

- ERROR for actual failures.

Optionally, TRACE or FATAL may also be included.

However, without consistent use, one service might treat a timeout as INFO, while another logs a similar case as ERROR, which can confuse triage and alerting. Here's a suggested baseline guideline:

- DEBUG: Internal state details (disabled in prod by default)

- INFO: Key business events, state changes, and successful transactions

- WARN: Degraded behavior, retries, or recoverable failures

- ERROR: Crashes, failed external calls, unhandled exceptions

Most modern logging libraries (e.g., Log4j, Logback, Winston, or pino) support structured formats and log levels out of the box or via plugins. Enforce this standard across all services using shared logger configurations or central log wrappers. In microservice environments, consider writing a brief logging style guide and validating output during code review.

Standardization is what turns logging from developer-specific console output into reliable infrastructure data. Without it, logs can't be effectively queried, visualized, or turned into metrics. With it, logs become the backbone of your observability pipeline.

Propagate correlation or trace IDs

In a distributed system, a single user request often touches multiple microservices. Without a common identifier that links these interactions, engineers are left piecing together logs manually, usually by matching timestamps, request paths, or guesswork. This approach doesn't scale. The solution is to generate and propagate a correlation ID or use a Trace ID from distributed tracing tools such as OpenTelemetry.

When a request first enters your system (usually through an API gateway or load balancer), assign it a unique ID, typically a UUID or a trace ID. Ensure this identifier is passed along with the request through HTTP headers like X-Request-ID or traceparent, or in message metadata if using queues or RPC. Every downstream service should extract this ID and include it in every log entry related to the request.

Here's a basic example in pseudocode:

# Middleware to generate and attach correlation ID

correlation_id = request.headers.get("X-Request-ID") or generate_uuid()

logger = setup_logger(context={"correlation_id": correlation_id})Later, during debugging, engineers can search logs for a specific correlation_id and retrieve a complete picture of how the request traveled through the system, which services it touched, and where it failed.

Correlating logs and traces

Correlation IDs are even more powerful when combined with distributed tracing systems like OpenTelemetry. A Trace ID unifies traces and logs in a single UI, enabling engineers to jump from a log line to a full execution timeline. OpenTelemetry supports injecting TraceId and SpanId into logs, so tools like Grafana, Jaeger, or commercial platforms correlate logs with their traces effortlessly.

To implement this:

- Modify your service edge layer (e.g., API gateway or ingress middleware) to generate or extract a correlation ID.

- Pass the ID downstream via headers or message context.

- Configure loggers to automatically include it, often using Mapped Diagnostic Context (MDC) in Java or similar features in other languages.

It's also important to preserve the correlation ID across asynchronous boundaries. For example, when publishing a message to a queue, include the correlation ID in the message headers. The service that consumes this message then extracts and logs the same ID.

If your system uses frameworks like Spring Cloud Sleuth (Java) or built-in OpenTelemetry instrumentation (Node.js, Go, Python), much of this propagation can be automated. These tools manage the creation, propagation, and recording of trace context seamlessly.

Ultimately, correlation IDs transform distributed logs from disconnected fragments into a coherent narrative. They significantly accelerate incident response, especially when systems become large and interconnected.

Even advanced debugging tools like Multiplayer's full-stack session recordings rely on trace stitching using session or correlation IDs, meaning that by implementing this, you're also laying the groundwork for future tooling integration.

In short, make correlation ID propagation a default in every service. Your observability and your on-call engineers will thank you.

Avoid logging noise and sensitive data

Excessive logging is a common pitfall, especially when teams are troubleshooting or debugging under pressure. Developers may leave verbose DEBUG or TRACE logs scattered across the codebase, logging every function call, loop iteration, or cache hit. While these may seem useful during development, in production, they often result in gigabytes of noise, increased I/O overhead, and higher log processing costs. Worse, they make it harder to identify meaningful events like warnings or errors.

A better approach is to tune the verbosity. Use log levels effectively: reserve DEBUG for deep-dive diagnostics, INFO for high-level events, and ERROR for actionable issues. Keep production logging at INFO or WARN level, and only enable more verbose logs temporarily for specific troubleshooting sessions.

Avoid redundancy

Instead of logging every repetitive event, aggregate or summarize where possible. For instance, instead of logging every retry or loop cycle, log a summary like:

Processed 2,000 events in 125 ms with 3 warnings.Another common trap is duplicate or redundant logs. For example, repeatedly logging the same error inside a loop or across layers creates log bloat. In addition, if an exception is thrown and logged in a controller, avoid logging it again in the service or DAO layers unless you're adding meaningful context. Instead, log once, clearly, and at the appropriate level.

Logging sensitive data

Equally critical is ensuring logs don't contain sensitive data. Logs are often stored in external systems, accessed by many roles, or shipped to third-party services. As such, they must be treated as semi-public by default. Never log secrets, passwords, tokens, API keys, PII (personally identifiable information), or payment data. Doing so risks data breaches, compliance violations (e.g., GDPR, HIPAA), and audit failures.

To mitigate this:

- Mask or hash identifying fields like emails or user IDs when needed.

- Use logging libraries that support filtering or redacting known patterns (e.g., credit card numbers).

- Avoid logging raw request/response bodies unless scrubbed or strictly access-controlled.

- Review error messages and stack traces; these may unintentionally leak internal paths, connection strings, or credentials.

Full-stack session recording

Learn moreHere's a simple example using a structured logger with sanitization logic:

const logger = createLogger({

format: format.combine(

format((info) => {

if (info.message.includes('password')) {

info.message = '[REDACTED]';

}

return info;

})(),

format.json()

)

});You can also separate sensitive logs into audit or debug-only channels that are permission-gated. This way, developers can still access important traces when debugging without exposing them broadly.

Finally, adopt the mindset: "Every log line should earn its place." If a log doesn't contribute to understanding the system's behavior or resolving an issue, consider trimming it. And if a log could compromise security or privacy, don't log it in the first place. Your logs should be as clean and focused as your code.

Capture key contextual metadata

Make your logs self-descriptive by including rich context–such as user IDs, request IDs, feature flags, and deployment metadata–so each entry can be understood in isolation without external lookup.

A log message like "Order processed successfully" is unhelpful on its own. Which order? By whom? In what environment? The power of contextual metadata lies in transforming such vague entries into precise, actionable signals. At a minimum, your logs should include:

- Identifiers: userId, requestId, sessionId, orderId, etc.

- Environment metadata: env, region, cluster, hostname, or containerId

- Feature or config flags: newFeatureEnabled=true, configVersion=3.1.4

- Application-specific dimensions: tenantId, moduleName, or shardId

In distributed systems, including contextual fields in every log entry is essential for filtering, correlation, and root cause analysis.

Here's a simple example:

{

"timestamp": "2025-08-02T13:20:00Z",

"level": "ERROR",

"message": "Shipping cost calculation failed",

"orderId": 98765,

"region": "us-east-1",

"featureFlag": "newShipping=true",

"service": "ShippingService",

"userId": 1045

}From just this entry, an engineer could deduce the affected order, region, feature configuration, and who triggered it without digging into a database or external tool. Without these fields, they'd need to manually correlate timestamps or replicate the request, wasting precious time.

Interact with full-stack session recordings to appreciate how they can help with debugging

EXPLORE THE SANDBOX(NO FORMS)

How to add context

Most modern logging libraries support ways to inject context automatically:

- In Java, use Mapped Diagnostic Context (MDC) or ThreadLocal to attach values like userId to the logging context.

- In Node.js, frameworks like Winston support middleware that attaches metadata to logs per request.

- For Go or Python, context propagation via middleware wrappers is a common pattern.

For example, in Java with SLF4J/MDC:

MDC.put("userId", currentUserId);

log.info("Processed user request");The userId appears in every log until MDC.clear() is called.

Multi-tenant and modular systems

In systems with multi-tenant architectures, logs should always include a tenant. This allows you to isolate logs by customer and accelerate support investigations. Similarly, for modular applications, add a module or component field to group logs by responsibility.

Example:

{

"tenantId": "acme-corp",

"module": "billing",

...

}This helps teams isolate problems within their subsystem and avoids noisy cross-team filtering.

Benefits of debugging tools

When logs are structured with rich metadata, advanced tooling becomes more effective:

- Tools like Multiplayer's full-stack session recordings can filter or group sessions by userId, featureFlag, or tenantId.

- Log aggregation systems (like Loki, Elasticsearch, or Datadog) support faceted filtering by indexed fields.

- AI-based log analyzers need metadata to correlate events and identify anomalies.

In short, you're not just helping humans, you're giving machines what they need to analyze logs intelligently.

Log transitions and system interactions

Don't limit logging to just errors. Capture the key transitions, external calls, and state changes that define how your system behaves across components.

In a distributed architecture, many of the most critical insights come not from the errors but from the moments in between. These are the transitions, such as when a request enters a service, when it triggers an external dependency, or when a piece of state changes. Logging these events forms a breadcrumb trail that helps engineers retrace the operational flow of a request.

Imagine an order service logs an error: "Failed to update status." Without logs showing that a payment succeeded just prior, it's unclear if the payment even happened. By logging key interactions and transitions, e.g., "Calling PaymentService," "Payment approved," "Updating order status", you gain visibility into both the cause and the effect.

Here are examples of valuable transition logs:

Received request on POST /checkout - orderId=12345Calling PaymentService for orderId=12345PaymentService responded 200 OK (elapsed=120ms)Order 12345 status changed from PENDING to PAIDThey are high-value INFO-level events that help reconstruct the timeline during debugging. When chained with correlation IDs and timestamps, these logs form a rich diagnostic trail.

Log the boundaries

In microservices, log both inbound and outbound events:

- When a service receives a request (including method, endpoint, userId, requestId).

- When it makes a call to another service, database, or external system.

- When it sends or processes a message (e.g., Kafka, SQS).

- When it completes a meaningful internal state transition.

For example, in a service that processes user signups, you might log:

Received signup request (userId=abc123)

Validated email (valid=true)

Inserted user into DB

Sent welcome email via EmailService

Signup completed successfullyWithout these, if the email fails to send, you won't know whether the signup or DB write succeeded.

Avoid logging every step

While transitions are critical, logging every single iteration or low-level operation becomes noise. For example, in a loop processing 10,000 records, don't log each one. Instead, log a summary:

Processed 10,000 records in 425ms (success=9,998, failed=2)This keeps logs clean while still providing insight.

External interfaces are risk points

The most fragile points in a system are often external interfaces, APIs, third-party services, file systems, or databases. These should be consistently logged:

- Log outbound request metadata (target system, payload size, operation).

- Log response status (e.g., 200 OK, 500 ERROR, timeout).

- Include durations for performance monitoring.

These logs are essential when debugging:

- Latency issues (e.g., DB query spikes)

- Silent failures (e.g., no response from a third-party API)

- External system misbehavior

Trace-friendly, human-friendly

Much of this information overlaps with what tracing systems like OpenTelemetry collect. For example, HTTP client spans, database spans, and message processing spans all mirror what you should log manually. But traces often have limited retention or visibility. Logs last longer and are more accessible during incidents, so consider them a human-friendly complement to your trace pipeline.

Every system tells a story. Transitions are the plot points. If you log them properly, you give your team the ability to replay the story of any transaction, even after it's gone wrong. That visibility is priceless.

Instrument for replayable sessions

Good logs tell you what happened. Replayable logs let you prove it and fix it. Design your logs so you can reconstruct and "replay" a user session or transaction after the fact, step by step, input by input.

Imagine being able to press "play" on a user's journey through your system and observe everything that happened, from API calls and backend logic to the final error. While this level of replay typically requires advanced tooling, the foundation starts with logging. If you capture the right details, with consistent structure, identifiers, and sequencing, your logs can serve as a replayable trail of what happened.

Start with correlation and context

A replayable session begins by tagging every log in the session with a shared correlation ID (covered in Section 2). This allows you to isolate logs for a specific request or user journey.

Then, ensure your logs carry enough contextual data to reproduce the behavior. For example, if a payment fails, logging "Payment failed" isn't enough. But if you log:

{

"event": "payment_attempt",

"orderId": 12345,

"amount": 50.00,

"paymentMethod": "VISA",

"status": "FAILED",

"errorCode": "CARD_DECLINED"

}You now have a complete snapshot. This structure lets engineers (or tools) recreate the failed call or feed similar data into a test environment.

Think in terms of event sequences

Reconstructing a scenario requires event order. Use timestamps with millisecond precision, or add step identifiers like:

- Validate user session

- Fetch cart contents

- Create a payment intent

This is especially helpful in systems with parallel execution or asynchronous processing. If multiple services emit logs at overlapping times, having stepId or eventSequence fields ensures clarity.

Also, consider logging inputs and outputs of key operations:

Input: "Shipping calculation started" with weight=2kg, destination=EU

Output: "Shipping result" = 12.34 EUR (carrier=DHL)These pairs allow you to recreate the function behavior later, even if the failure occurred deep in the logic.

One click. Full-stack visibility. All the data you need correlated in one session

RECORD A SESSION FOR FREEOffline reproduction

One goal of replayable logs is to support reproducing local or test environments. If a bug appears in production, you should be able to extract the relevant logs, reconstruct the key inputs, and run a test to validate the fix. This is only possible if:

- Input parameters are logged (scrubbed if sensitive)

- The sequence of actions is preserved

- Conditional logic or feature flags are recorded

Otherwise, developers are stuck guessing, and the reproduction loop slows down significantly.

Some teams go further and implement request journaling, structured storage of all actions in a session (similar to audit logs). This is like having a flight recorder ("black box") for your app: when things go wrong, you have the full history.

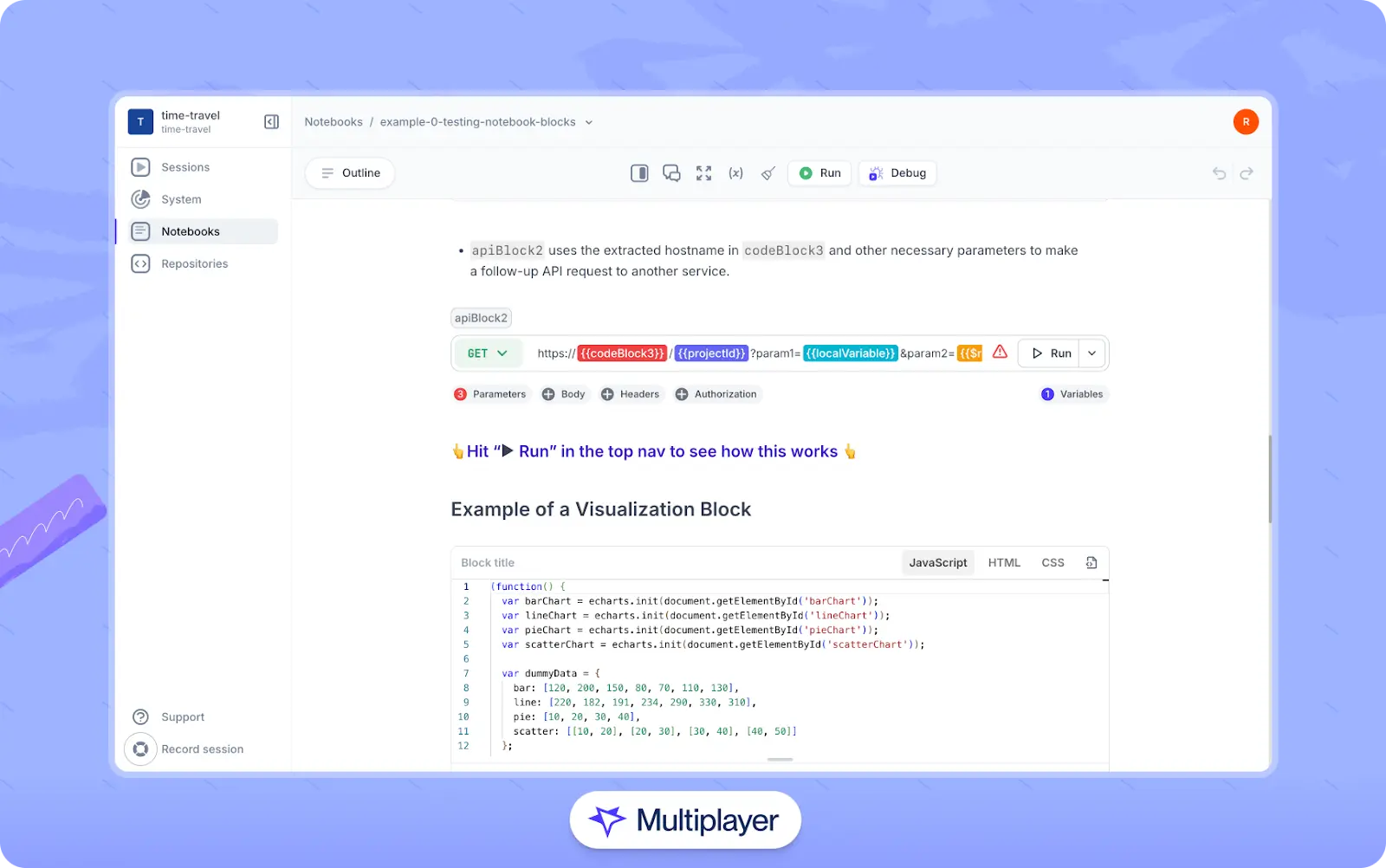

Future-proof for debugging platforms

Modern tools (like Multiplayer) use this exact approach to enable full stack session replay. They combine frontend screens and data, request/response and header content, with logs, metrics, and traces to give a single-pane-of-glass view of what happened in a specific session. If your logs are structured, consistent, and correlated, these tools can ingest them to render step-by-step playbacks and even auto-generate test scripts.

Key takeaways

In short, focus on these core practices to make your logs truly replayable and actionable:

- Use correlation IDs and structured logs to group session activity.

- Include timestamps, sequence numbers, and context in every log.

- Log both what happened and why, decisions, inputs, flags, and output values.

- Avoid excessive verbosity, but ensure you can replay the scenario if needed.

- When possible, align your logs with tooling that supports replay and test generation.

Automate test generation from failures

Treat every production failure as an opportunity to strengthen your test suite. Use your logs to reproduce the issue and write a test that ensures it never happens again.

A core principle in robust software engineering is: once you fix a bug, write a test to catch it next time. Debug logs contain the inputs, context, and error traces needed to recreate failures. When structured and detailed enough, logs allow you to transform a debugging session into a permanent regression test.

Logs as test blueprints

Let's say a null pointer exception occurs in a payment service. A well-logged failure might include:

- The user or session ID

- The payload that triggered the request

- Key parameters (e.g., orderId, currency, paymentMethod)

- Stack trace or error code

With that information, a developer can write a test that simulates the same request. Test engineers can also enhance end-to-end tests for key business flows, using production log data to better simulate the necessary inputs in test environments. This speeds up reproduction, improves test coverage, and helps customer support resolve issues faster.

Example:

{

"event": "payment_failed",

"orderId": "12345",

"amount": 49.99,

"currency": "USD",

"paymentMethod": "VISA",

"error": "NullPointerException at PaymentHandler.java:88"

}From this, you could write a JUnit test that mimics the PaymentHandler logic with the same input and assert that the behavior no longer crashes.

From logs to tests, automatically

Some teams take it further by writing scripts or internal tools that:

- Search logs for specific exception types

- Extract structured payloads

- Generate test case stubs or mock payloads

For example, if logs follow a format like Error in module X: input={json}, a tool could extract that JSON and create a test fixture. This turns logs into real-world test generators, and your production environment becomes a constant feedback loop into your test coverage.

Modern platforms like Multiplayer support this idea natively. Once their full-stack session recordings record a failure session, they can export that sequence into a replayable notebook or automated test script.

Multiplayer's notebooks

Even if your team doesn't use such a tool yet, adopting the logging habits that make it possible (structured logs, consistent metadata, replayable event order) sets you up to integrate these workflows later.

Make it a habit

Your goal is to close the loop:

- Bug happens

- Logs capture enough info to replay them

- The test is written based on that info

- The bug gets fixed and guarded forever

This builds a regression suite informed by actual incidents, not just hypothetical edge cases. You're encoding real-world resilience into your codebase.

Also consider:

- Scrubbing sensitive data from logged payloads (PII, tokens)

- Generalizing the scenario, if it reveals a broader logic flaw

- Documenting complex failures as "living runbooks" using tests or annotated Notebooks

Over time, this practice helps reduce repeat failures and accelerates your fix-and-verify cycle. It also brings QA and engineering closer together, and your system learns from its failures. Better logs lead to better tests, and better tests lead to fewer outages.

Enable on-demand deep debugging

Build mechanisms that let you temporarily increase logging detail for specific components, users, or requests without restarting services or overwhelming your logs.

Running your production system at full debug verbosity all the time is unrealistic because the I/O overhead, log volume, and performance impact make it impractical. It is also costly if an unexpected surge in log data pushes you into a higher cost tier for your monitoring or logging platform.

However, bugs often appear only in live environments. This is where on-demand deep debugging becomes essential: it lets you turn up log detail selectively and temporarily, just enough to diagnose elusive issues.

Adjust logging dynamically

Most modern logging frameworks support runtime log level adjustment:

- Java: via JMX or config reloads in Log4j / Logback

- Python: using logging.setLevel(...)

- Node.js: with environment variables or admin APIs

You can raise a specific logger or class to DEBUG (e.g., com.mycompany.auth) while leaving the rest of the system at INFO. This is useful when you're investigating a specific subsystem without creating noise elsewhere.

To avoid accidental log bloat:

- Set expiration timers for dynamic changes

- Document usage policies

- Monitor the log volume when enabling debug

Enable per-request or per-user debugging

A more precise technique is to enable debug logging for a single request or user session. This is especially powerful when debugging bugs that are hard to reproduce.

Example strategies:

- Add an X-Debug-Mode: true header to API calls

- Use a query parameter like ?debug=true (only for authorized users)

- Pass a debug=true flag in the correlation context

Then, use conditional logic to trigger deeper logging for that request:

if (requestContext.isDebugEnabled()) {

logger.debug("Detailed payload: {}", serialize(payload));

}You can implement this using:

- Mapped Diagnostic Context (MDC) in Java

- AsyncLocalStorage in Node.js

- Custom middleware in frameworks like Flask, Express, or Spring

This approach ensures you only generate detailed logs for the specific session under investigation, keeping the rest of your logs clean.

Stop coaxing your copilot. Feed it correlated session data that’s enriched and AI-ready.

START FOR FREEFeature-flagged verbose logging

You can also deploy logging instrumentation that's controlled by feature flags. This allows you to flip debug mode at runtime, scoped to:

- A specific tenant

- A component or microservice

- A critical feature path

For example, turn on extended logging just for customer_id=acme-corp during their onboarding week.

Many teams use internal admin UIs or configuration services to toggle these flags, sometimes integrated with feature management tools like LaunchDarkly, Unleash, or internal config maps.

Operational tips

- Set timeouts: Automatically disable debug mode after a fixed window (e.g., 15 minutes).

- Track usage: Log when debug mode is enabled/disabled for auditability.

- Guard performance: Wrap heavy logging logic in if (logger.isDebugEnabled()) to avoid unnecessary computation.

- Respect privacy: Even in debug mode, apply the same redaction or filtering policies for sensitive data.

if (logger.isDebugEnabled()) {

logger.debug("User input: {}", sanitize(input));

}Cloud-native support

Some platforms (e.g., AWS CloudWatch, Azure Monitor, Datadog) let you increase logging dynamically via UI or APIs. For example, you might run a command like:

"Set log level to DEBUG on service-x for the next 5 minutes."

These tools often send configuration updates via agents or sidecars. If your infrastructure supports this, it can save you from redeploying just to gain insight.

Think of on-demand debugging as a surgical logging scalpel. It gives you precision access to internals when you need it without compromising baseline performance. Combined with structured logs, correlation IDs, and contextual metadata, you get a powerful workflow where you can zero in on issues fast, without drowning in log noise. When paired with tools like full-stack session recordings, you gain the ability to record and replay just that one troubled session, exactly what you need, nothing more.

Conclusion

Debug logging is a foundational practice when building reliable software. By adopting the best practices outlined in this article, you're not just improving your logs; you're investing in engineering velocity, system resilience, and on-call sanity. Quality logs reduce the time to identify root causes, accelerate recovery, and provide clarity under pressure.

They also serve as real-world documentation, capturing system behavior, operational flow, and edge-case conditions that developers, QA engineers, and SREs will reference long after an incident passes. Over time, your system becomes not just easier to debug, but easier to trust.

Modern tools can amplify these practices. When your logs are structured, consistent, and rich with metadata, tools like log analytics engines, session replayers, and AI-powered debuggers can extract deeper insights automatically. Whether you're using homegrown tooling or dedicated platforms like Multiplayer, these best practices form the foundation that enables deeper, faster, smarter analysis.

In summary, treat logs as first-class outputs of your system, as important as the features you ship. They will protect your uptime, inform your team, and shorten your path to resolution when production gets noisy.