Guide

REST API Testing: Best Practices & Examples

Table of Contents

The REST architectural style defines six constraints that guide API design: statelessness, a uniform interface, client-server separation, cacheability, a layered system, and code-on-demand. When you test a REST API, you should verify both that the endpoints work and that the implementation follows these architectural rules.

Each REST constraint leads to specific testing conditions. For example, statelessness means verifying that servers don't store client context between requests. A uniform interface requires testing that HTTP methods behave consistently across all resources. Resource identification demands validation of URI structures and hierarchies.

This guide examines each constraint through a testing perspective, showing you how to validate compliance. We also cover practical testing strategies that help you build constraint validation into your development workflow. Each section includes real API examples and explains how to identify violations.

Summary of REST API testing best practices

| Best Practices | Description |

|---|---|

| Design tests for constraint compliance | Ensure your test suite covers the six REST architectural constraints, such as statelessness and cacheability, to surface structural issues that functional tests might miss. |

| Prioritize architectural violations over edge cases | Focus testing on scenarios that break REST principles, such as improper state handling or inconsistent cache behavior. |

| Align tests with documentation | Use your API's actual behavior to drive test scenarios rather than relying on static documentation. |

| Maintain test-code alignment through discovery | Continuously scan running systems to detect changes in endpoints, parameters, and schemas to keep tests accurate and up to date. |

| Automate constraint validation | Build automated tests that run with every code change and establish monitoring to track constraint compliance. |

| Establish constraint-focused debugging workflows | When investigating REST API issues, start by identifying which architectural constraint might be violated before diving into functional code. |

| Correlate distributed traces with constraint violations | Use distributed tracing to correlate requests and identify where constraints break down in complex interactions. |

| Build executable validation scenarios | Create interactive tests that combine multiple API calls and let team members experiment with different parameters and boundary conditions to show how REST principles work in practice. |

| Share knowledge across teams | Provide executable examples that illustrate how REST APIs behave across real scenarios. Use collaborative tools to make this knowledge accessible to developers, QA, and operations. |

Overview of testing REST API constraints

REST API testing should include requirements that evaluate the following six architectural constraints:

- Statelessness requires that each request contain all the information required to process it without relying on server-side sessions.

- A uniform interface demands consistent HTTP method behavior across all resources.

- Cacheability ensures that responses include proper headers so proxy servers know when to store responses.

- Client-server separation keeps user interface concerns separate from data storage concerns.

- A layered system supports intermediary servers, like load balancers, between the client and server.

- Code-on-demand is an optional constraint that lets servers send executable code to clients when needed.

When testing a REST API, you send HTTP requests to endpoints to validate both functional behavior and adherence to the constraints above. While functional testing involves verifying that individual endpoints behave as expected, constraint-based testing focuses on the correct use of HTTP methods (e.g., making sure GET doesn't modify data), consistent and predictable resource URIs (uniform interface), proper cache-control headers (cacheability), and ensuring that requests are self-contained (statelessness). In more advanced cases, you may also test support for layered intermediaries or optional code-on-demand features.

In the following sections, we examine each REST API constraint in more detail.

REST API architectural constraints

The six REST constraints work together to create predictable API behavior. Each constraint addresses specific architectural requirements that are important for REST APIs to remain predictable, reliable, and scalable.

Statelessness

Statelessness means each request contains all the information needed for processing, without server-side sessions. The server treats every request independently, relying only on data provided in query parameters, headers, request body, or URI paths. When servers follow statelessness, requests work in any order without depending on previous interactions. Statelessness violations appear when identical requests lead to different results based on previously stored data or when the request order changes.

Session independence testing

Testing statelessness includes detecting when servers depend on data from previous interactions.

For example, you can check session independence using the JSONPlaceholder API. Each request for a post works independently, regardless of what you've requested before. The server doesn't remember that you previously looked at post 1 when you request post 2.

The following tests validate session independence by making multiple requests to the same /posts/ endpoint and comparing responses:

- The first test verifies that identical requests return identical data, catching whether servers store client-specific session data.

- The second test sends requests in different orders to detect sequence dependency.

Any variation in responses for identical resources shows that the server is maintaining state between requests, violating the constraint.

// Test session independence - identical requests should return identical responses

test('should maintain session independence across requests', async () => {

const response1 = await fetch('https://jsonplaceholder.typicode.com/posts/1');

const response2 = await fetch('https://jsonplaceholder.typicode.com/posts/1');

const data1 = await response1.json();

const data2 = await response2.json();

expect(data1).toEqual(data2);

});

// Test request order independence

test('should not depend on request sequence', async () => {

// Send requests in different order

const response2First = await fetch('https://jsonplaceholder.typicode.com/posts/2');

const response1 = await fetch('https://jsonplaceholder.typicode.com/posts/1');

const response2Second = await fetch('https://jsonplaceholder.typicode.com/posts/2');

const data2First = await response2First.json();

const data2Second = await response2Second.json();

expect(data2First).toEqual(data2Second);

});Request context validation

Building on session independence, state management ensures consistent behavior across all operations: Identical requests should produce identical responses regardless of timing or sequence. Statelessness violations occur when update operations depend on server-stored context rather than including complete information in the request.

The following cURL examples show proper stateless update operations. The PUT request includes the complete resource representation of post 1, telling the server exactly what the final resource state should be without knowing the current state. The PATCH request explicitly specifies the title field to modify, providing complete context about the intended change.

# PUT requires complete resource representation

curl -X PUT https://jsonplaceholder.typicode.com/posts/1 \

-H "Content-Type: application/json" \

-d '{

"id": 1,

"title": "Updated Post Title",

"body": "This is the complete updated post content",

"userId": 1

}'

# PATCH specifies exactly which fields to modify

curl -X PATCH https://jsonplaceholder.typicode.com/posts/1 \

-H "Content-Type: application/json" \

-d '{"title": "Partially Updated Title"}'While statelessness improves availability since servers don't maintain session state, it does increase bandwidth usage as clients must send complete context with every request. However, this trade-off becomes practical as your system scales across multiple servers and geographic regions.

Full-stack session recording

Learn moreTo test for statelessness, simulate repeatable requests under varying conditions (e.g., different sequences, no cookies, no prior authentication state) and verify consistent outcomes. Update operations are especially telling: They should succeed based solely on the request body, not any implicit server state.

Uniform interface: HTTP methods and status code validation

The uniform interface constraint ensures that all resources in your API follow consistent patterns. This consistency comes from four key areas: how resources are identified, how clients manipulate them, how messages describe themselves, and how clients navigate between related resources.

Resource identification through URIs

Every resource needs a unique URI that identifies what it represents. Well-designed URIs follow predictable patterns that help clients understand your API structure.

For example, JSONPlaceholder uses these resource identification patterns. Users, posts, and comments each have a URI structure that immediately tells you what resource you're accessing. The following Jest tests validate that HTTP methods behave consistently according to their semantic definitions across all API resources:

- The first test verifies that GET requests are safe operations that never modify server state after multiple GET calls.

- The second test confirms that POST requests return the correct 201 Created status code when successfully creating new resources.

- The third test validates PUT request idempotency by sending identical PUT requests twice and verifying that they produce the same response.

// Test GET requests never modify data

test('GET requests should not modify server state', async () => {

// Get initial state

const initialResponse = await fetch('https://jsonplaceholder.typicode.com/posts/1');

const initialData = await initialResponse.json();

// Make multiple GET requests

await fetch('https://jsonplaceholder.typicode.com/posts/1');

await fetch('https://jsonplaceholder.typicode.com/posts/1');

// Verify state unchanged

const finalResponse = await fetch('https://jsonplaceholder.typicode.com/posts/1');

const finalData = await finalResponse.json();

expect(finalData).toEqual(initialData);

});

// Test POST returns 201 for resource creation

test('POST should return 201 Created for new resources', async () => {

const response = await fetch('https://jsonplaceholder.typicode.com/posts', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({ title: 'Test Post', body: 'Test content', userId: 1 })

});

expect(response.status).toBe(201);

});

// Test PUT idempotency

test('PUT requests should be idempotent', async () => {

const putData = { id: 1, title: 'Updated', body: 'Updated content', userId: 1 };

const response1 = await fetch('https://jsonplaceholder.typicode.com/posts/1', {

method: 'PUT',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify(putData)

});

const response2 = await fetch('https://jsonplaceholder.typicode.com/posts/1', {

method: 'PUT',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify(putData)

});

expect(response1.status).toBe(response2.status);

});Uniform interface violations appear when HTTP methods don't behave consistently across all resources. For example, GET requests that modify data, POST requests that don't return 201 for creation, or PUT requests that aren't idempotent break client expectations and API contracts.

HATEOAS hypermedia controls testing

Hypermedia as the Engine of Application State (HATEOAS) extends REST by embedding navigational links in responses, allowing clients to discover available actions dynamically rather than relying on hardcoded URIs.

For example, GitHub's REST API exposes hypermedia controls that let clients navigate between related resources:

# Get GitHub user info

curl -H "Accept: application/vnd.github.v3+json" https://api.github.com/users/octocatThe response includes fields such as repos_url, followers_url, and following_url, which each provide direct links to related resources:

{

"login": "octocat",

"id": 1,

"url": "https://api.github.com/users/octocat",

"repos_url": "https://api.github.com/users/octocat/repos",

"followers_url": "https://api.github.com/users/octocat/followers",

"following_url": "https://api.github.com/users/octocat/following{/other_user}"

}To test HATEOAS, verify that navigational links are present in responses, accurately represent relationships between resources, and can be followed to retrieve valid data.

Cacheability testing

Caching allows intermediary servers like proxies and CDNs to store responses temporarily to reduce server load and improve response times. When your API supports caching correctly, repeated requests for the same resource can be served from the cache instead of hitting your origin server. This constraint requires specific headers and validations that tell caches when content is fresh and when it needs updating.

ETag validation and conditional requests

ETags provide a fingerprint for each resource version, allowing caches and clients to check if their stored copies match the current version. When content changes, the ETag changes too, indicating stale cached versions.

ETag violations occur when servers don't generate consistent ETags for resource versions or when conditional requests don't work properly. Test ETag functionality by validating that ETags change with content updates and that conditional requests return appropriate 304 Not Modified responses.

Interact with full-stack session recordings to appreciate how they can help with debugging

EXPLORE THE SANDBOX(NO FORMS)

The following cURL examples test ETag-based caching behavior using the HTTPbin ETag endpoint:

- The first request retrieves a resource and captures its ETag value from the response headers.

- The second request includes an

If-None-Matchheader with the previously received ETag. This tells the server to only send the resource if it has changed since the client last saw it.

If the resource hasn't changed, the server responds with 304 Not Modified instead of resending the full content.

# Initial request returns ETag

curl -X GET https://httpbin.org/etag/abc123 \

-H "Accept: application/json"

# Response includes: ETag: "abc123"

# Conditional request using If-None-Match

curl -X GET https://httpbin.org/etag/abc123 \

-H "If-None-Match: abc123"Cache invalidation

Cache invalidation ensures that stale content gets removed when resources change. When you update a resource, related cached entries should become invalid to prevent serving outdated information. This can become complex when resources have relationships that affect multiple cache entries.

For example, HTTPbin's cache endpoint shows how caching headers work with time-based invalidation. The response can be cached for 60 seconds. After that time, the cache becomes stale, and clients must revalidate it with the server.

# Request with cache control headers

curl -X GET https://httpbin.org/cache/60 \

-H "Accept: application/json"

# Response includes: Cache-Control: public, max-age=60In production applications, updating a user resource should invalidate not just that user's cache entry but also user collections, related profiles, and any aggregated data that includes that user.

Client-server separation testing

Client-server separation ensures that user interface concerns remain distinct from data storage and business logic concerns. This separation allows clients and servers to develop independently. For example, you can update your mobile app without changing the server or scale your backend infrastructure without affecting client applications.

Interface boundary validation

The interface boundary defines exactly what clients can and cannot access directly. Boundary violations occur when APIs leak internal implementation details through responses or error messages. Tests must check interface boundaries by validating that APIs abstract implementation details and don't expose server internals to clients.

For example, if your API returns an error like this, it is a boundary violation because the message exposes internal database details.

{

"error": "SQLSyntaxErrorException: syntax error at line 1 near 'FROM users;'"

}Instead, the API should return a generic, user-friendly error:

{

"error": "Invalid request parameters"

}Independent evolution testing

True separation means clients and servers can change without coordinating releases. New client versions should work with existing servers, and server updates shouldn't break existing clients. This independence becomes critical when you have multiple client applications or when clients update on schedules different from those of the servers.

API versioning facilitates this independence by preserving backward compatibility simultaneously with forward progress. The following cURL examples test backward compatibility by accessing different versions of the REST Countries API. The first request uses the legacy v2 endpoint while the second uses the newer v3.1 endpoint.

# Older clients work with legacy version

curl -X GET https://restcountries.com/v2/name/france \

-H "Accept: application/json"

# Newer clients can use enhanced features

curl -X GET https://restcountries.com/v3.1/name/france \

-H "Accept: application/json"Testing should confirm that both endpoints respond correctly with expected data and formats. It is particularly important to include this form of testing in regression test suites.

Layered system testing

Layered systems allow intermediary components like proxies, load balancers, and gateways to sit between clients and servers without breaking the communication flow. These layers can improve performance through caching, add security through filtering, or provide scalability through load distribution. The primary requirement is that clients shouldn't need to know about these intermediary layers.

Validating intermediary proxy behavior

Proxies intercept client requests and forward them to servers, potentially modifying headers or filtering content along the way. Well-implemented proxies preserve the essential meaning of requests and responses while adding their own value. Problems occur when proxies modify requests in ways that break application logic or strip important headers.

The cURL example below tests how requests travel through intermediary layers using HTTPbin's headers endpoint.

# Request that passes through proxy layers

curl -X GET https://httpbin.org/headers \

-H "Accept: application/json" \

-H "Authorization: Bearer token123"The request includes Accept and Authorization headers that are critical for proper API function. Before reaching its destination, the request might pass through load balancers, firewalls, CDNs, or API. The response should show all headers, including any added by intermediaries, like X-Forwarded-For or X-Real-IP. These verify that essential headers weren't stripped and that intermediaries are working transparently.

Load balancer transparency testing

Load balancers distribute requests across multiple server instances to handle high traffic volumes. From the client's perspective, requests should work identically whether they hit server A or server B. This transparency breaks when servers maintain different states or when session affinity isn't handled properly.

Testing load balancer behavior requires sending multiple requests and verifying consistent responses regardless of which backend server processes each request.

# Multiple requests should get consistent results

curl -X GET https://httpbin.org/get

curl -X GET https://httpbin.org/get

curl -X GET https://httpbin.org/getEach response format, header, and behavior should be consistent across all requests. If you were testing against a load-balanced API with actual user data, repeated requests for the same resource should return identical information, confirming that different server instances maintain the same data.

Code-on-demand testing

Code-on-demand is an optional REST constraint that allows servers to extend client functionality by sending executable code along with data. It allows servers to push JavaScript, applets, or scripts to clients when static responses aren't sufficient for complex interactions.

The most common implementation involves servers sending JavaScript code that clients execute to enhance their functionality. For example, payment processors often send client-side validation scripts to check credit card formats before submission. Web applications also commonly use this approach when servers send HTML pages containing JavaScript, which improves the user experience.

Unlike other REST constraints, code-on-demand should only be utilized when necessary. When implemented, applications must work correctly even when clients can't execute the provided code. This means providing fallback mechanisms where core functionality remains available through standard HTTP requests.

To test code-on-demand, verify that the executable code is properly delivered and runs as intended on supported clients. Additionally, test the application's behavior on clients that do not support or disable code execution to ensure fallback mechanisms function correctly and core features remain accessible.

Best practices for REST API testing

The constraint-focused testing approach we've covered outlines the technical requirements for REST API validation. Following best practices will help you implement practical testing frameworks in your development workflows.

Design tests for constraint compliance

Start with constraint-focused test categories instead of standard functional groupings. Create test suites that validate statelessness, ensure uniform interface compliance, and verify cacheability. Each constraint might show a different failing point across your REST architecture.

One click. Full-stack visibility. All the data you need correlated in one session

RECORD A SESSION FOR FREEFor example, use multiple constraint-specific tests instead of evaluating user creation as a single functional test. Verify that POST requests don't depend on previous session state (statelessness), that the URIs follow consistent patterns (uniform interface), and that responses include appropriate cache headers (cacheability).

Prioritize constraint violations over edge cases

Focus your testing effort on scenarios that could violate REST principles. Test what happens when clients send requests in random order, when intermediary proxies modify headers, or when cache validation fails.

To test client-server separation, verify that the API doesn't expose internal implementation details in responses. For example, the following Jest test validates that APIs don't expose internal implementation details through error responses. The test checks both 404 errors (nonexistent resources) and 400 errors (malformed requests) to verify that error responses remain client-appropriate.

// Test client-server separation - error responses shouldn't expose internals

test('should not expose internal implementation details in error responses', async () => {

// Test 404 error doesn't reveal internal details

const notFoundResponse = await fetch('https://jsonplaceholder.typicode.com/posts/99999');

expect(notFoundResponse.status).toBe(404);

const errorText = await notFoundResponse.text();

// Error message shouldn't contain database/server internals

expect(errorText.toLowerCase()).not.toMatch(/database|sql|mysql|postgres|redis|mongodb/);

expect(errorText.toLowerCase()).not.toMatch(/server error|stack trace|internal server/);

expect(errorText.toLowerCase()).not.toMatch(/localhost|127.0.0.1|port d+/);

// Test malformed request doesn't expose internals

const badResponse = await fetch('https://httpbin.org/status/400');

expect(badResponse.status).toBe(400);

});This code explicitly triggers error conditions and inspects the error messages to ensure that they don't reveal database schemas, internal service names, or server architecture and implementation details.

Align tests with documentation

API documentation becomes outdated the moment you add a new functionality to your REST API suite. This creates a gap between what your tests validate and what your API actually does.

Your API's actual behavior should drive your test scenarios, not static documentation. When documentation reflects your system's current state, you can build tests that validate real functionality rather than making assumptions about what the API should do.

Maintaining accurate documentation requires two primary elements: an accurate understanding of the running system and tooling that allows you to create dynamic and sophisticated documentation that captures how systems actually behave in production.

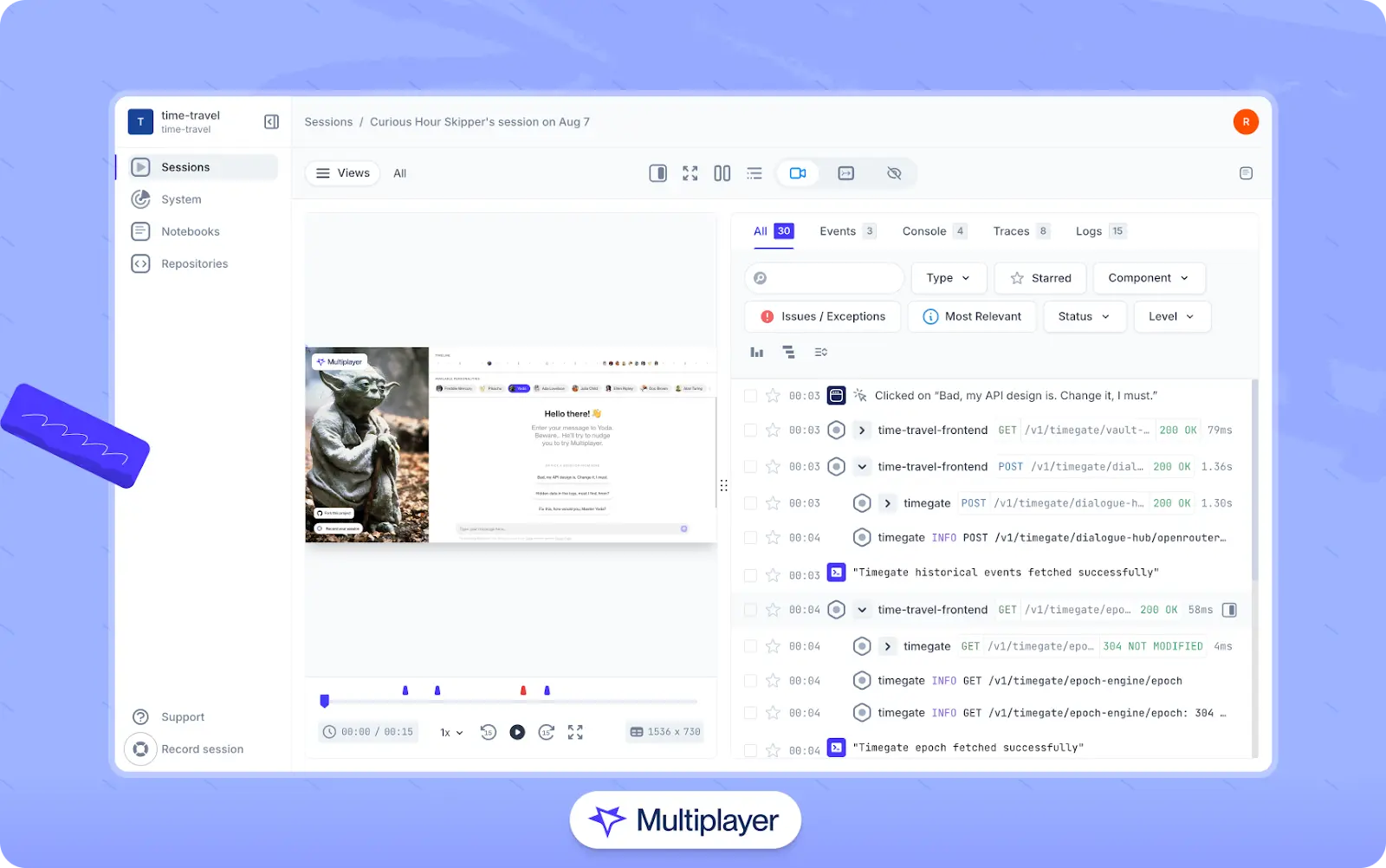

To gain an accurate understanding of the system, full-stack session recordings can help by correlating frontend user actions with backend behavior as requests flow through a distributed stack. If you capture these recordings using a tool like Multiplayer, they can also be used to auto-generate runnable test scripts within notebooks, which serve as documentation for API integrations, a centralized hub for system information, and reproducible blueprints of real user journeys. Unlike static documentation, notebooks reflect real API behavior and stay up to date as the system evolves.

Maintain test-code alignment through discovery

Code changes often break the connection between tests and the methods they validate. Regular system discovery helps you identify when new endpoints appear, when schemas change, or when dependencies change, allowing you to update your tests accordingly.

System discovery often means gaining a bird's-eye view of the running system and cataloging components, APIs, and dependencies. Multiplayer's system dashboard can support this by presenting an up-to-date list of all observed APIs and their relationships. This can help developers discover what has changed and where tests may need to be updated.

System discovery can also mean understanding how the system behaves in practice. Full-stack session recordings are useful in this context because they uncover changes as they pertain to real user behavior. If a developer receives a ticket about a failing workflow, the session recording shows exactly which endpoints were called, what payloads were sent, and how the backend responded. This context can be used manually or fed into AI coding assistants to help reproduce the issue, suggest fixes, or automatically generate updated test cases.

To ensure that these insights are not lost or remain as tribal knowledge, notebooks provide a centralized location to document discoveries through written text blocks and executable, chainable blocks for API calls and code snippets.

Automate constraint validation

REST constraints can degrade over time as you add features and modify existing endpoints. Continuous validation helps you catch these regressions throughout your development lifecycle.

Integrate constraint checks into CI/CD pipelines

Add constraint validation tests to your CI processes along with functional requirements. These checks run automatically with every code change, preventing deployments that violate REST principles.

For example, this GitHub Actions workflow adds statelessness validation in CI/CD pipelines by sending identical GET requests from multiple parallel jobs. The test verifies that all requests return identical responses regardless of timing, sequence, or which runner processes them.

# .github/workflows/statelessness-test.yml

name: REST Constraint Validation

on: [push, pull_request]

jobs:

statelessness-test:

runs-on: ubuntu-latest

strategy:

matrix:

runner: [1, 2, 3, 4]

steps:

- uses: actions/checkout@v4

- uses: actions/setup-node@v4

with:

node-version: '20'

- name: Test statelessness across multiple runners

run: |

node -e "

const fetch = require('node-fetch');

async function testStatelessness() {

const response = await fetch('https://jsonplaceholder.typicode.com/posts/1');

const data = await response.json();

// Output consistent hash of response for comparison

const hash = require('crypto').createHash('md5').update(JSON.stringify(data)).digest('hex');

console.log('Response hash from runner ${{ matrix.runner }}:', hash);

// Write hash to file for comparison

require('fs').writeFileSync('response-hash-${{ matrix.runner }}.txt', hash);

}

testStatelessness().catch(console.error);

"

- name: Upload response hash

uses: actions/upload-artifact@v4

with:

name: response-hashes

path: response-hash-*.txt

verify-consistency:

needs: statelessness-test

runs-on: ubuntu-latest

steps:

- name: Download all response hashes

uses: actions/download-artifact@v4

with:

name: response-hashes

- name: Verify all responses are identical

run: |

# All hash files should contain identical content

if [ $(cat response-hash-*.txt | sort | uniq | wc -l) -ne 1 ]; then

echo "STATELESSNESS VIOLATION: Different runners returned different responses"

exit 1

else

echo "SUCCESS: All runners returned identical responses"

fiThis script runs the same request multiple times concurrently to detect any state leakage or session dependency issues. If responses vary between parallel executions, the script triggers a statelessness violation that fails the build.

Monitor constraint compliance in production

Over time, production environments show constraint violations that testing environments often miss. Load balancers, caching layers, and real user patterns can expose issues that only appear under actual operating conditions.

HTTP method monitoring provides a practical example. Set up alerts when GET requests modify data (detected through database change logs), when PUT requests don't include complete resource representations, or when DELETE requests fail to remove resources properly.

Establish constraint-focused debugging workflows

REST constraint violations often create subtle bugs that are difficult to trace. These issues commonly appear as intermittent failures, unexpected behaviors, or performance degradation under load.

When investigating REST API issues, start by identifying which architectural constraint might be violated rather than diving into functional code. Statelessness violations cause inconsistent behavior across requests, caching issues create stale data problems, and uniform interface violations break client integrations.

Create a debugging checklist that reviews each constraint. For example, in client-server separation, verify that error messages don't expose internal implementation details or database schemas. For layered systems, check that intermediary proxies aren't modifying critical headers or request content.

Stop coaxing your copilot. Feed it correlated session data that’s enriched and AI-ready.

START FOR FREECorrelate distributed traces with constraint violations

REST violations often span multiple services and intermediary layers. Distributed tracing that correlates requests across your entire system can identify where constraints fail.

When debugging a caching issue, trace the complete request path from the client through the CDN, load balancer, gateways, and application servers. Look for points where cache headers get modified or stripped (check for missing Cache-Control or ETag headers), where conditional requests fail (304 responses return 200 instead), or where different servers return different ETags for the same resource (indicating inconsistent hashing algorithms).

Tools like Multiplayer's full-stack session recordings can help capture these distributed traces and correlate them with frontend interactions. This capability creates complete debugging sessions that show how constraint violations affect real users.

Multiplayer's full-stack session recordings

Build executable constraint validation scenarios

Create interactive test scenarios that monitor how REST constraints work in practice rather than just verifying that they exist. Scenarios should combine multiple API calls, show the effects of different requests, highlight failing patterns, and let you modify parameters to analyze boundary conditions.

For example, build an interactive cacheability test confirming ETag behavior by making the same request multiple times with different If-None-Match headers. Show how responses change from 200 OK with full content to 304 Not Modified with empty bodies.

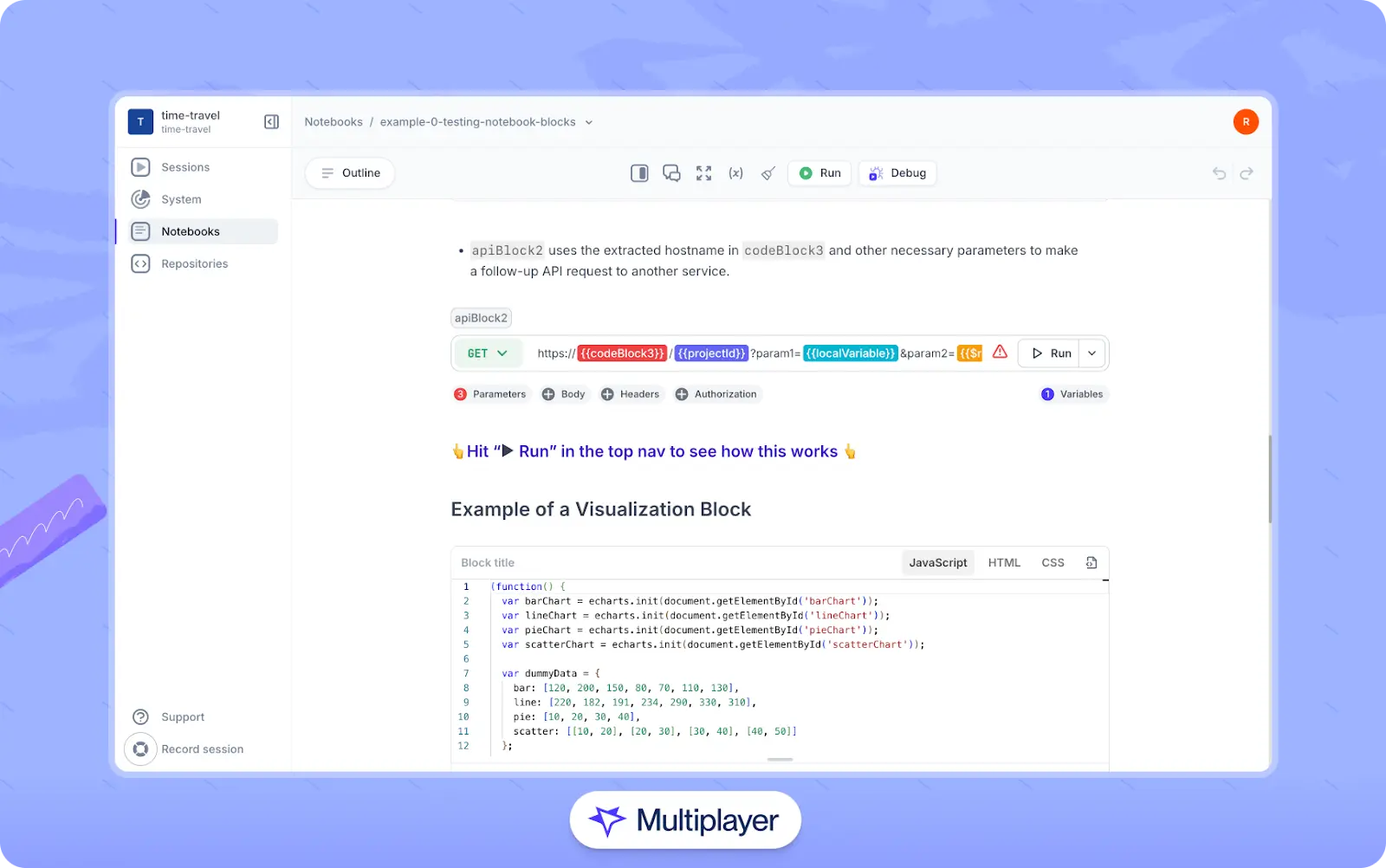

Platforms like Multiplayer notebooks make it easy to create and share these interactive scenarios. They allow you to design dynamic tests that illustrate REST constraints in action, helping teams explore, debug, and validate API behavior collaboratively and in real time.

Multiplayer notebooks

Share knowledge across teams

Different team members need to understand REST constraints for different reasons. For example, developers need to implement them correctly, QA engineers need to test them thoroughly, and operations teams need to monitor them in production. Interactive documentation bridges these knowledge gaps.

Create executable examples that show how caching headers affect response behavior, how uniform interface violations break client code, or how layered system transparency works with proxies. These examples serve as both documentation and validation tools.

Notebooks implement this by combining code execution and collaboration features. You can build constraint validation scenarios that include API calls, code processing, and test guidelines. These notebooks become ready-to-reference documentation.

Conclusion

The six constraints we've discussed offer a framework for practical REST API testing. When you are starting with REST API testing, first identify which constraints matter most for your application's architecture and end-user experience.

If you're running distributed services across multiple servers, prioritize statelessness testing to prevent scaling issues. If your team deals with high-traffic scenarios, prioritize cacheability testing to optimize performance. For APIs consumed by multiple clients, focus on uniform interface validation to ensure consistent behavior.

Once your testing priorities are set, include validation in your development workflow as a standard practice. Consider automating tests to run with each code change, and implement monitoring to track constraint compliance. This approach minimizes reliance on manual testing and sets a foundation for practical automated testing workflows.