Six Modern Software Architecture Styles

Leveraging tried-and-tested solutions saves time, ensures reliability, and helps avoid common pitfalls. We look at six common architectural styles used in distributed systems and talk about how to choose the best one for your use case.

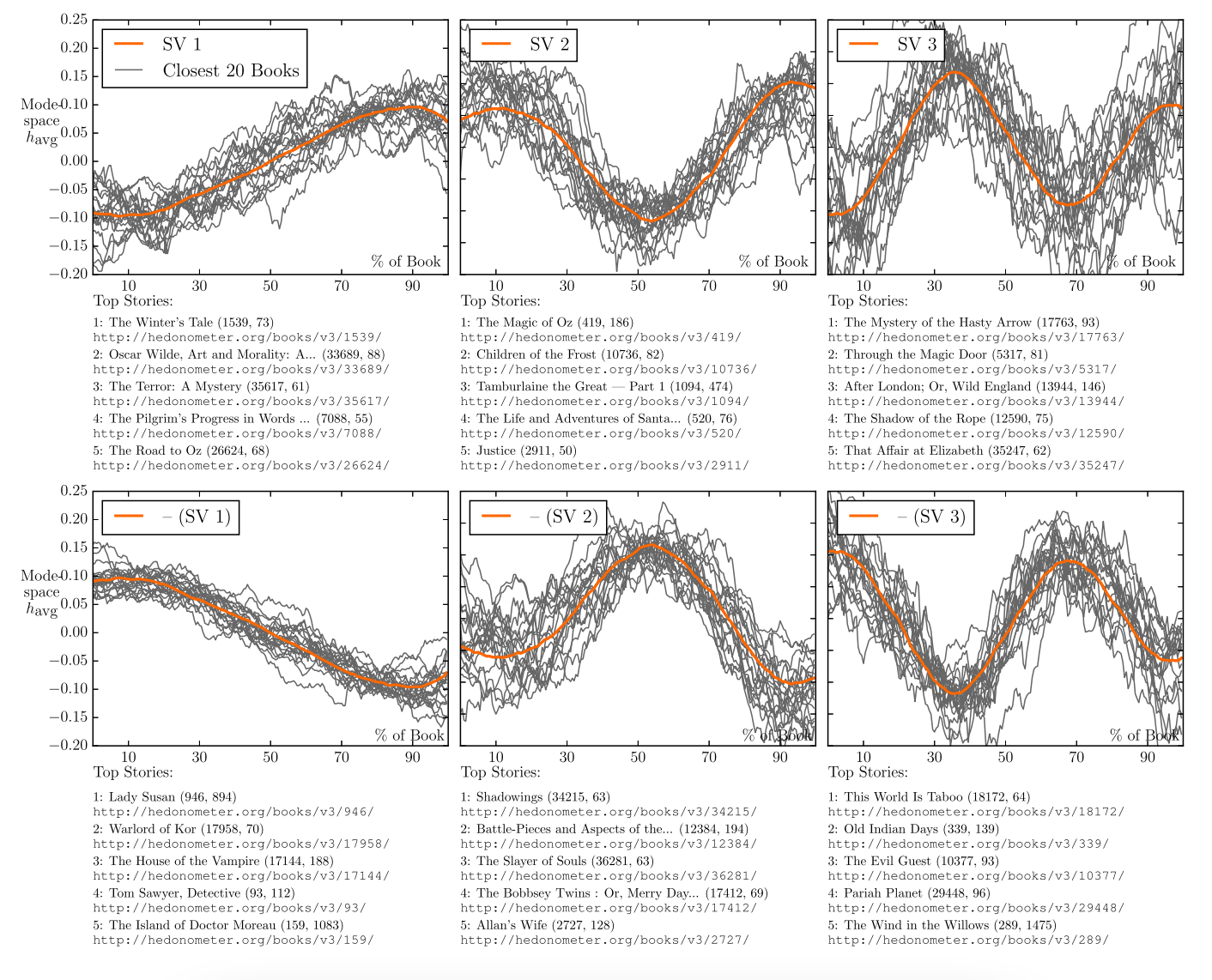

In 2016 researchers at the University of Vermont applied matrix decomposition, supervised learning, and unsupervised learning to a data set of 13,000 popular stories. They discovered that each story followed the pattern of one of six basic plots, although the most popular stories followed the 'fall-rise-fall' and 'rise-fall' arcs.

If we were to analyze the most common software architecture designs used in modern applications, what patterns would emerge?

Just as popular stories tend to have common narrative structures, distributed software systems often exhibit recurring architectural patterns. These patterns emerge because they provide effective solutions to common design and implementation challenges.

However, just as storytellers balance the use of conventional story structures with innovative elements to create unique narratives, system and software architects balance the use of well-established architectural patterns with innovative solutions to craft use case-specific software systems.

In this blog post we’ll look at six common architectural styles used in distributed systems and talk about how to choose the best one for your use case.

Modern Architectural Styles for Distributed Systems

Architectural styles are often characterized in opposition with each other - the monolith vs microservices argument is well-known - but the truth is that each has a specific use case and most often they coexist together in a company’s portfolio of applications.

“I kind of feel like microservice-vs-monolith isn’t a great argument. It’s like arguing about vectors vs. linked lists or garbage collection vs. memory management. These designs are all tools — what’s important is to understand the value that you get from each, and when you can take advantage of that value. If you insist on microservicing everything, you’re definitely going to microservice some monoliths that you probably should have just left alone. But if you say, ‘We don’t do microservices,’ you’re probably leaving some agility, reliability and efficiency on the table.” - Brendan Burns, co-creator of Kubernetes, now corporate vice president at Microsoft.

Leveraging tried-and-tested solutions saves time, ensures reliability, and helps avoid common pitfalls. There is no doubt that businesses can reap these benefits from a well-designed software architecture, along with others, which include:

- Rapid development → Developers can focus on implementing application-specific features rather than struggling with fundamental design decisions.

- Consistency and standardization → Multiple team members can easily maintain a shared understanding of the system's structure and collaborate on it.

- Improved maintainability → Architectural styles promote clean, modular, and organized software, making it easier to maintain and update over time.

Building an evolvable architectural software system is a strategy. It requires experience, intuition, flexibility and foresight to select the right patterns - or the right “story plots” - that will serve your audience best.

In these post, we'll review these six popular architectural styles:

(1) Monolithic

(2) Microservices

(3) Event-Driven

(4) Serverless

(5) Edge Computing

(6) Peer-to-Peer

(1) Monolithic Architectural Style

One-liner Recap:

Everything is in one, easy box.

Background:

In the early days of software development, monoliths were the norm - all components were tightly integrated within a single codebase and runtime environment.

However, in the 2000s the concept of Service-Oriented Architecture (SOA) started to gain traction, because of some of the more painful aspects of monolithic deployments exemplified with the 1999’s Big Ball of Mud description put forth by Professors Brian Foote and Joseph Yoder.

To be clear, the initial idea of the Big Ball of Mud doesn’t match the current image of monoliths: a tower of rigid, inflexible, tightly-coupled processes, where deploying a simple change to one part of the app may inadvertently affect a totally different one. It was actually aiming to describe a chaotic architecture made up of programs haphazardly heaped onto other programs, with data exchanged between them by means of file dumps onto floppy disks.

Nonetheless the assertion that “monolith” unequivocally means “big ball of mud” led to the resurgence in other systems architecture styles. However, the Monolithic Architecture can (and, in some cases, should) still be used in modern distributed systems. Hear us out.

Weaknesses:

In recent years, there has been a stigma associated with starting your project with a straight-forward, simple, performant monolith.

This stems, in part, from traumatizing experiences of trying to work with legacy big-ball-of-mud monoliths, where every test is tedious, understanding the code is hard, and deployments are unreliable. And also, in part, from the need to try the latest technologies and match the industry’s expectations for “modern” approaches.

While it’s absolutely true that some monolith deployments become unwieldy when working with complex applications, the solution is fairly simple and well known (and, arguably, easier to implement than microservices): breaking monoliths down into modules - semi-independent components. A core concept that has been at the heart of most programming languages since the 1970s.

Powers:

“For 95% of the software we build, a simple monolith with easy-to-understand code is the right choice. Could we be more fancy? Sure, but to what end? We have a responsibility to the people that come after us and maintain things. I tell our team all the time that they will never impress me with clever code or architecture. A simple to read and understand chunk of code that solves a problem clearly and effectively; now that is the manifestation of mastery!” - Keith Warren, CEO of Fern Creek Software

If you’re a single small-to-medium team, with a monolithic architecture you can effectively develop, test, deploy, and maintain your app, even if it’s a large code base. By leveraging properly deployed modules, you can keep coupling to a minimum. Since everything is in one place, it’s easier to track down issues and fix them. You can also drastically reduce redundant boilerplate and write DRY (Don’t Repeat Yourself) code - which is not insignificant when this best practice is difficult to apply in a microservices architecture.

If / when you have a multimillion line codebase that starts requiring a few sacrifices to the gods and several days for a deploy, at that point you can break it into services or start considering a microservices architecture. In 2015, in Martin Fowler’s “MonolithFirst” essay, he writes that a reasonable method for arriving at a microservices system is to begin with a monolith and peel away microservices individually and carefully.

Lastly, remember that before we had “microservices” we used to rely on “trunk based development” where the main monolith (trunk) was helped by “branch” services - or as David Heinemeier Hansson, CTO and co-founder of Basecamp, called it “the pattern of The Citadel: A single Majestic Monolith captures the majority mass of the app, with a few auxiliary outpost apps for highly specialized and divergent needs.”

Real-world use cases:

Dropbox, X (Twitter), Netflix, Facebook, GitHub, Instagram, WhatsApp. All these companies and many others started out as monolithic code bases. Many large enterprises still rely on them to this day - for example: Istio, Segment, StackOverflow, and Shopify.

(2) Microservices Architectural Style

One-liner Recap:

Partition your problem into multiple, independent chunks that are scaled independently.

Background:

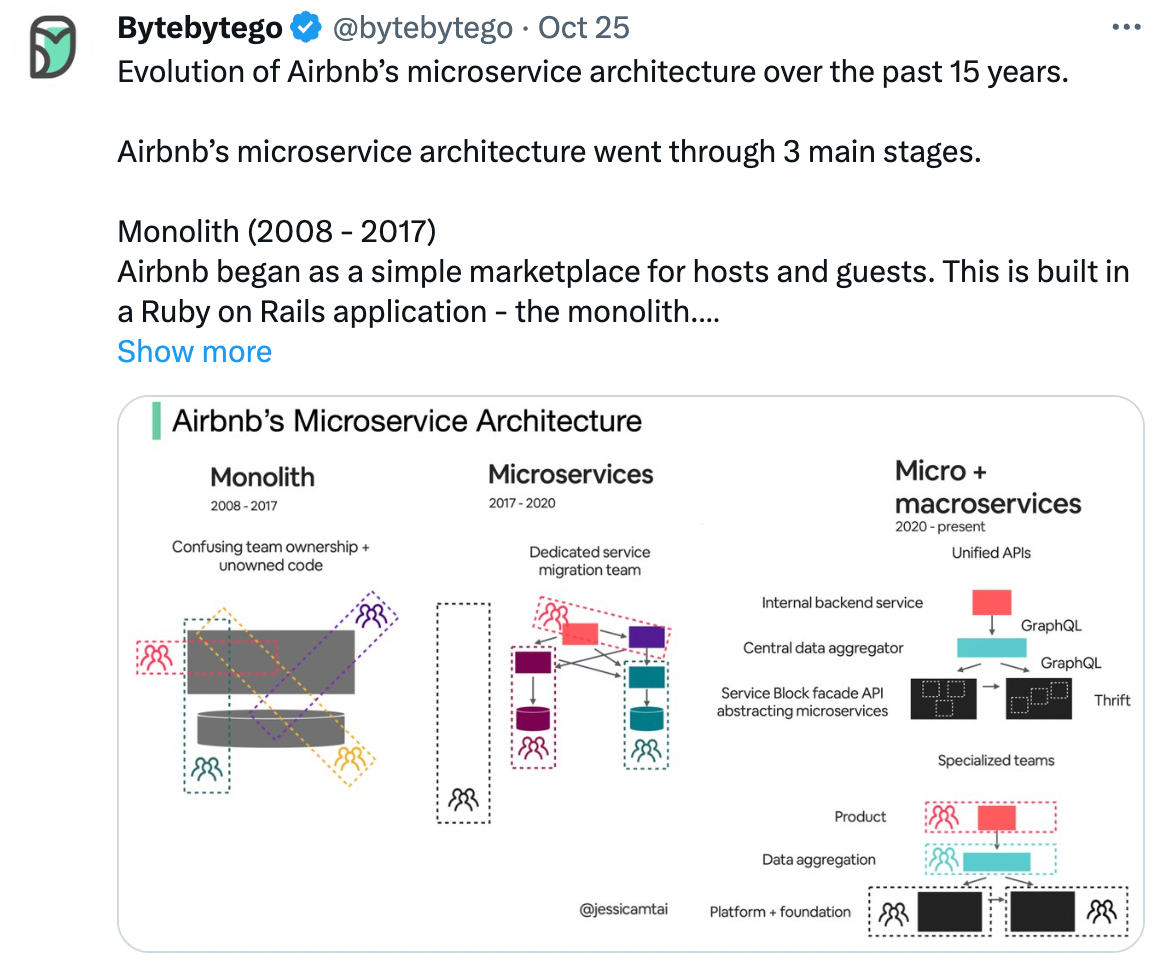

The Microservices Architectural Style is a direct evolution of the Service-Oriented Architecture (SOA), just more prescriptive and emerging from real-world use cases. Microservices are the culmination of modular architecture, where small, autonomous app components (modules) run on different processes that communicate via networks, to form an application.

After gaining traction due to the juxtaposition with big-ball-of-mud monoliths (see above), SOA implementations struggled because of their lack of guidance-defining service boundaries. Microservices architectures address this by breaking a business domain into bounded contexts, collaborating in a decoupled manner and with more sophisticated network communication approaches.

Aside from solving technical issues, it’s important to note the connection between the rise in microservices popularity and the need for organizational clarity, autonomy, and independence in engineering teams.

Companies were struggling to optimize developer velocity due to the cross-team dependencies - most organizations were organized in “skill-centric” departments. This meant common development woes such as: waiting on the infrastructure team to procure a server, waiting on the DBA team to make a schema change, waiting on the QA team to build a test to find a bug, etc.

Empowering individual teams by giving them the full ownership of the entire lifecycle of a service was the solution that companies like Amazon proposed. In fact, when they first openly discussed the Microservices Architectural Style, it was to try to push the idea of an independent development team with few and far between blockers - and not necessarily to promote a specific software architecture.

Weaknesses:

In March of this year, Amazon Prime Video’s engineers made waves when they announced that they had migrated the service quality monitoring application from a Microservices Architecture to a Monolithic Architecture, with considerable benefits. Turns out that they had just migrated from AWS Step Functions to a fully-fledged microservice… or better yet, a “macroservice”.

In fact “microservice” can be a misnomer - they can be quite large and a better description would be a “component service” or “correctly sized service.”

However, the discussions sparked by this article are indicative of the frustration with some common pitfalls when working with microservices:

- The premature adoption of microservices by following a design by buzzword approach, rather than focusing on the business outcomes you’re trying to achieve.

- Creating unneeded complexity, especially when decomposing something too far. Microservices Architectures are notorious for becoming gigantic behemoths that, nonetheless, require engineers to have an accurate mental map of the entire system to know which services to bring up for any particular task - i.e. the “you have to know everything before doing anything” principle. It’s not a surprise that multi-billion dollar companies like Spotify found it necessary to invest significant internal resources to build tools that catalog their endless systems and services (although, we think we can help with that! 😉).

- Underestimating the substantial investment in infrastructure. Implementing this architecture successfully requires a substantial investment of time, resources, money and infrastructure. Not being able to develop and sustain a sophisticated DevOps setup to maintain the delicate balance that a Microservices Architecture demands is one of the most common causes of failure.

Ultimately, many organizations end up replacing the big ball of mud with distributed little balls of mud … and then blaming the architectural style itself instead of their poor architectural decisions.

Powers:

When implemented correctly, the Microservices Architecture can give you and your team magic powers to work more effectively and build applications at extraordinary scale.

When you have multiple teams, with hundreds of engineers, coordinating any release in a monolith application becomes incredibly painful: each new change requires the deploy or release of your entire code at once (and version updates can have many changes!), which is very risky and time consuming.

Microservices liberate your development process, fostering flexibility that, in turn, accelerates your teams' velocity. Teams gain autonomy, deploying their code independently and at their own pace. This agility results in not only a quicker time to market but also the freedom to experiment with innovative solutions. Teams can select the most suitable technologies for their specific services, unhindered by standardized, one-size-fits-all approaches.

Another huge benefit lies in the enhanced system reliability. When a component experiences a failure, it does not propagate to other parts of the system. A single service can go down, be rolled back, or undergo maintenance without disrupting the entire system, ensuring isolated issues don't cascade into system-wide outages. The result is a more robust and fault-tolerant system architecture.

Real-world use cases:

DoorDash, Airbnb, Uber - the list of companies that have adopted the Microservices Architecture is extensive and continues to grow. These companies leverage microservices to enhance scalability, streamline development, and offer seamless, responsive services to their global user base.

(3) Event-Driven Architectural Style

One-liner Recap:

Your components or services communicate through events.

Background:

“With hybrid cloud-native implementation and microservices adoption, EDA gets a new focus by helping to address the loose coupling requirements of microservices and avoid complex communication integration. The adoption of the publish/subscribe communication model, event sourcing, Command Query Responsibility Segregation (CQRS), and Saga patterns help to implement microservices that support high scalability and resiliency for cloud deployment.” - Jerome Boyer, Distinguished Engineer, IBM Cloud and Cognitive Software

Event-Driven Architecture is not a new concept; it has been around for decades, in fact Gartner proclaimed it the “next big thing” almost 20 years ago when faster networks, general-purpose event management software tools and the emergence of standards for event processing were paving the way for its adoption.

It’s important to underline that EDA is a complementary architecture for Microservices and SOA: it originates from the need for modern digital businesses to respond immediately to events as they occur, providing users with up-to-date information and rapid feedback.

Traditional architectures usually use a command-based communication pattern: they deal with data as batches of information to be inserted and updated (at some defined interval) and respond to user-initiated requests rather than new information.

In an Event-Driven Architecture events are used as a means of communication between software components: each component responds to events that are relevant to its function, and events are used to trigger actions in other components. In summary EDA enables real-time, asynchronous, multicast, fine-grained, and filtered communication, both for predictable and unpredictable events.

Beyond real-time monitoring and responsiveness, this architectural style addresses various use cases, including data replication, parallel processing, and ensuring redundancy during service outages.

In recent years, the rise of cloud-native architectures, container-based workloads, and serverless computing has underscored EDA's value in enhancing the resilience, agility, reactivity, and scalability of modern applications.

Weaknesses:

According to a survey conducted by Solace in 2021 Event-Driven Architecture is in widespread use for 72% of global businesses. However, only 13% of organizations have reached the “gold standard of Event-Driven Architecture maturity”. Communicating using events seems like a straightforward idea, but it’s deceptively simple.

Implementing how events are identified, captured, and produced based on specific triggers or conditions requires careful consideration. When you evaluate the solutions for event generation, communication, error handling, consumption, event deployment, governance, etc. you realize that there’s much more complexity than you might have initially thought.

This type of architectural style also presents other challenges:

- Difficult testing and debugging. With the distributed and decoupled nature of event-driven applications it’s very hard to test the system as a whole and, likewise, hard to trace an event from source to destination. Since you don’t have a statement of the overall behavior of the system (i.e. you can’t just check the code to see how the events will be consumed), you need to watch the path that the individual event takes to understand what is occurring.

- Trade offs in data consistency. When multiple components subscribe to and process events independently, ensuring data consistency across all services and databases can become complex. Implementing mechanisms like event sourcing and distributed transactions may be necessary to maintain data integrity in such scenarios.

- Complex documentation and maintainability. As with any distributed, highly decoupled application, you need a proper design to understand how the components interact with each other. Maintaining a clear, up-to-date system documentation becomes crucial but can be difficult to achieve in practice. Developers often grapple with the need for effective solutions to document the intricate architecture, making comprehensive documentation and visualization tools essential.

Powers:

The main benefits of the Event-Driven Architecture are the business outcomes that come from improved responsiveness and agility: the more quickly you can get information about events where they need to be, the more effectively your business can seize opportunities to delight users, shift production, and re-allocate resources.

EDA's decoupled nature allows for independent component updates without impacting the entire system, and higher resiliency to service failures.

Another significant advantage lies in scalability: because events are broadcast to multiple components of the system, it is possible to process large volumes of data and transactions in parallel. This makes it easier to handle high traffic and spikes in demand.

Real-world use cases:

Leading companies like Heineken, Schwarz Group, Unilever, and Wix have embraced the Event-Driven Architecture to revolutionize their operations. The applications are numerous from sharing and democratizing data across applications, to connecting IoT devices for data ingestion/analytics, to event-enabling microservices.

(4) Serverless Architectural Style

One-liner Recap:

Build and run services without having to manage the underlying infrastructure.

Background:

The Serverless Architectural Style is a further evolution of cloud-native architectures - which were designed to leverage the uniqueness of cloud platforms for scalability, reliability, and agility requirements.

Like all other Architectural Styles, the concepts that underpin the Serverless Architecture have been around for a long time but have become a fixture in mainstream software development since the rise of cloud computing, and, more precisely, since Amazon introduced the first mainstream Function as a Service (FaaS) platform - AWS Lambda - in 2014.

With FaaS, developers write their application code as a set of discrete functions, which perform specific tasks when triggered by an event. These functions are then deployed on cloud providers which fully abstracts away the infrastructure management.

The ‘on-demand’ nature of functions allows them to be scaled up to meet a spike in demand and scaled back down to zero on completion of the task - this makes them ideal for unpredictable loads and for quick (fail-fast) experimentation.

It’s important to note that the Serverless Architecture is closely related to the Microservices Architecture and the choice between the two is far from a binary one. Many modern applications combine serverless and microservices and, in fact, AWS Lambda functions typically play a crucial part in microservices and are invoked through event sources.

This has often led to the misconception that functions are microservices themselves - which ultimately caused some unfortunate, massively complex architectural designs when AWS Lambda functions first came out (see ‘Nano services’).

Weaknesses:

One of the biggest drawbacks of the Serverless Architecture is vendor lock in.

Intellectually, we all know the benefits of a cloud-agnostic deployment, however, it’s never as evident as when things go south and you suddenly need to migrate to a different cloud provider. The ability to switch cloud providers and services allows you to select the one with the best solution price, performance and stability for your use case.

Unfortunately, FaaS providers natively support only certain technologies (i.e. programming languages, frameworks and tools) and while there are workarounds, they can be complicated and problematic. Therefore it’s not possible to run the same function with different providers and any migration will require reconfiguring it to some extent.

Another common hurdle is right sizing services: you need to achieve the right level of cohesion of functionality but maintain low coupling. Developers need to find a happy medium between the extremes of nano services and monolithic services - in other words, we want to avoid small microservices with noisy communication between them but also avoid lumping all functionality into one microservice!

Powers:

The Serverless Architecture it’s great at enabling fast, continuous software delivery. You don’t have to think about managing infrastructure, provisioning or planning for demand and scale!

More specifically, these are the four main benefits:

- Easy Scalability: While microservices are generally used for continuous, constant workloads, serverless functions excel in scenarios where tasks are utilized occasionally and for a limited time. For instance, this architectural style is well-suited for resource-intensive tasks like image uploads.

- Cost Efficiency: By adopting a pay-as-you-go model, you only pay for actual usage and scale back down as soon as the task is complete.

- Reduced Operational Overhead: By abstracting infrastructure management, it enables developers to focus on writing and deploying the application code. Serverless functions can also be reused across multiple applications, streamlining development efforts. For example, a serverless function for user profile picture uploads can be applied to various applications within an organization.

- Reliability and Availability: The loosely coupled nature of serverless functions reduces dependence across components, enhancing reliability. If one function fails, the overall application can continue working seamlessly. The underlying infrastructure management by the provider further minimizes potential points of failure, ensuring enhanced reliability and availability for applications.

Real-world use cases:

An O’Reilly serverless survey in 2019 found that nearly 40% of companies worldwide are using serverless functions in some form. In fact, while it’s possible to build entire applications in a pure serverless architecture, in the majority of cases, only specific services are serverless - those that are not in permanent use and have a relatively short run-time, especially if it is resource intense.

For example, services particularly suited to being run as serverless functions include: rapid document conversion, predictive page rendering, log analysis on the fly, processing uploaded S3 objects, bulk real-time data processing, etc.

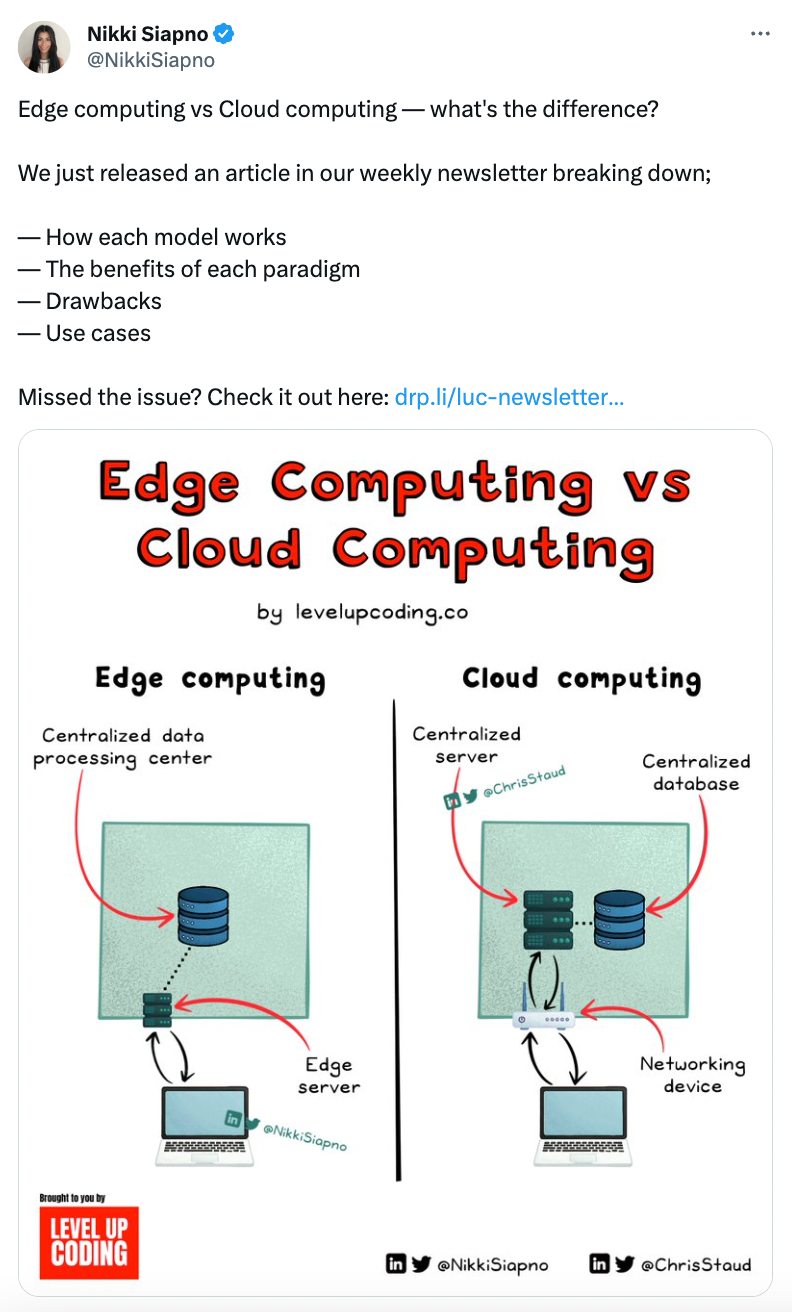

(5) Edge Computing Architectural Style

One-liner Recap:

Bring computation and data storage closer to the end user.

Background:

Edge computing is yet another evolution of cloud computing and a “variation” of the Serverless Architecture, which responds to applications’ requirements of high-speed, high availability, and near-instantaneous processing of data.

Unlike the traditional cloud model, which relies on centralized data centers, edge computing brings data and computational power closer to the sources of the produced data - hence, closer to the applications and the users that consume it - significantly reducing bandwidth and response times.

Edge computing can be viewed as a layered approach: the top layer is comprised of cloud data centers (including central and interconnected regional data centers), while the edge layer is composed of the edge data centers, edge devices (e.g. smartphones, tablets, etc.) and edge things (e.g. scanners, sensors, beacons, etc.).

Databases are embedded within the individual elements of the edge layer and the captured data is replicated and synchronized across the entire system (i.e. between the cloud databases, the edge data centers, and the embedded databases on the devices) ensuring it is always consistent and available.

Weaknesses:

Managing data at the edge isn’t always easy, not only because it’s a relatively recent paradigm shift in cloud computing that's still lacking sophisticated orchestration and automation tooling. But also because of the following:

- Limited computational resources: Edge devices often face limitations in processing power, memory, and storage, which impacts the speed and complexity of the data processing tasks.

- Data security and privacy concerns: Edge devices don’t often have the same security measures as centralized data centers or the capability to leverage existing security standards or solutions, making them more vulnerable to breaches. (Although, there’s a counter benefit to not having to move sensitive data away from the relevant edge nodes).

- Inconsistency from cold starts: This is a bane for any serverless system and the price you have to pay for not having to maintain and deploy your own instances. However, in Edge Computing Architectures, the problem can be worse because the computing workload is spread out across more servers and locations (i.e. there is very low likelihood that the that code will be "warm" on any particular server when a request arrives).

On a smaller scale, although Edge Computing Architectures are designed to function in situations of intermittent connectivity, it can still cause disruptions to the transmission of data to the central cloud servers or other edge nodes. However, the impact of intermittent connectivity is not nearly as significant as in other architectural styles that rely solely on the cloud for storing and processing data.

Powers:

“Today, I’m convinced that we were wrong when we launched Cloudflare Workers to think of speed as the killer feature of edge computing, and much of the rest of the industry’s focus remains largely misplaced and risks missing a much larger opportunity. I'd propose instead that what developers on any platform need, from least to most important, is actually: Speed < Consistency < Cost < Ease of Use < Compliance.” - Matthew Prince, Co-founder & CEO of Cloudflare

Edge Computing Architectures empower use cases that were previously impossible due to the inability of critical systems to operate irrespective of connectivity. Just think that in the case of catastrophic network failure, where the layers of the cloud data center and the edge data center become unavailable, the edge devices with embedded data processing would continue to run in isolation, with 100% availability, and real-time responsiveness until connectivity is restored.

But keeping data near the edge of the network has many critical advantages beyond high availability. And even beyond faster data analysis and cost-effectiveness.

Another key benefit is that sensitive data never has to leave the “edge”: the physical area where it is gathered and is used within the local edge (e.g. within a country’s borders). This is particularly interesting given the increasing trend of countries pursuing regulations that ensure their laws apply to their citizens’ personal data.

Real-world use cases:

The Edge Computing Architecture Style finds diverse applications in real-world scenarios and it’s particularly beneficial for systems requiring real-time responses such as IoT devices, autonomous systems, and immersive technologies like virtual and augmented reality. Think for example, of connected homes, autonomous vehicles, robotic surgery, and advanced real-time gaming.

(6) Peer-to-Peer Architectural Style

One-liner Recap:

Liberté, Égalité, Fraternité for all the peers!

Or, technically speaking, decentralized communication and resource sharing directly between devices.

Background:

It can be argued that the basic concepts of Peer-to-Peer (P2P) computing had already been envisioned starting from the earliest discussions on software systems and networking - in fact, it’s mentioned in the RFC 1 “Host Software” by Steve Crocker and it’s echo’ed in Tim Berners-Lee's vision for the World Wide Web.

P2P is a concept that sparked conversations outside of software engineering - with heavy social and political implications - and it’s still today one of the core topics in the network neutrality controversy. It’s not difficult to see why since it’s based on the idea of decentralization, promoting a more “egalitarian” model where each node (peer) is an equal participant in the network.

In contrast to the classic centralized client/server architecture, in P2P, nodes play a dual role, acting as both a client and a server. This means that peers can directly interact with each other, sharing resources such as processing power, disk storage, and network bandwidth, without the need for a central server.

Although P2P had previously been used in many application domains, it’s the rise of the file-sharing app Napster in 1999 that brought it to the attention of the wider public - both for the innovative idea of sharing music files for free with strangers and the legal controversy that followed!

P2P Architecture is still very much relevant today: it’s a complementary and critical technology for building scalable distributed systems, especially when it comes to edge computing applications. By integrating P2P into Edge Computing Architectures, you leverage its established communication model to facilitate direct communication and data sharing, thereby enhancing interoperability and enabling real-time, decentralized decision-making.

Weaknesses:

Embracing P2P architectures inherently presents a complexity challenge due to the decentralized nature of this type of network. It’s not just a question of scale due to the number of nodes and the challenge of coordinating and managing communication among them. It’s also complicated by the dynamic nature of P2P architectures - with peers joining and leaving frequently.

The management of network topology requires robust algorithms for discovering, connecting, and maintaining peer relationships (and robust tooling for documenting the system architecture).

This type of architecture also presents security challenges: without a central authority to manage security, P2P networks can be vulnerable to attacks (e.g. each peer is responsible for implementing their own layer of network security, and peers themselves might share malicious files or launch attacks against other peers).

Not to mention that P2P systems can face more legal and ethical issues than centralized or client-server systems due to the lack of a central authority or responsibility.

Powers:

On the positive side, P2P architectures boast enhanced resilience, scalability, fault tolerance, and reduced dependency on a central point of failure. Also, since each peer can act as a server, the system has more capabilities than a client-server architecture. Each server peer adds its capabilities to the overall system.

Real-world use cases:

The P2P Architectural Style is used for many types of applications, with content distribution being the most popular - e.g file-sharing à la Napster. This includes software publication and distribution, content delivery networks, streaming media and peercasting for multicasting streams.

An interesting project in this space is SETI@home - which, until March 2020, used crowdsourced computers to analyze radio signals with the aim of searching for signs of extraterrestrial intelligence.

Besides content sharing, other applications of the P2P architecture typically see VoIP / communication platforms (e.g. Skype), blockchain technology (e.g. Bitcoin), distributed Machine Learning, and distributed social networks (although this is not to be confused with the “fediverse” conversation).

Choosing the Best Architectural Style for You

"… the startups we audited that are now doing the best usually had an almost brazenly ‘Keep It Simple’ approach to engineering. Cleverness for cleverness sake was abhorred.” - Ken Kantzer, Co-founder and CTO of Truss, former VP of Engineering at FiscalNote

Building distributed systems is not easy: everything - from development, debugging, deployment, testing - is more challenging and time-consuming. When given a choice, the best approach is to keep it simple - boring even!

Even when leveraging tried and tested architectural styles, there is no default approach to building a distributed system: it will always depend on your application. You will have to clarify the business outcomes you’re looking to achieve, considering the various tradeoffs of one decision over another, and calculating the design benefits.

Ultimately, you’ll likely end up using a hybrid architecture, combining elements of multiple architectural styles as they make most sense for specific parts / features of the application. For example, you may start with a well-modularized monolith, add a few microservices for new features or design them as serverless due to their spiky workloads.

One immutable truth of system design is that systems are mutable and ever evolving. You should always seek to refine, simplify, and improve the architecture of the system, as the needs of your organization change, the IT landscape evolves, and the capabilities of selected providers expand.

Regardless of the software architecture style you choose, remember that software systems live and breathe and change. A dead, ossifying software system will rapidly bring an organization to a standstill, preventing it from respond to new threats and opportunities.

Multiplayer allows you to design, develop, and manage your distributed software with a visual and collaborative tool. Visualize your system architecture, regardless of its style or complexity, from a 10,000 foot view down to the individual components and dependencies. Sign up for free to see for yourself! 😉