Being a backend developer today feels harder than 20 years ago

All the reasons why backend development today feels harder, or, at the very least, vastly more complex than 20 years ago.

It’s very hard to objectively compare the experience of a backend developer today vs. 20 years ago (i.e. late 90s, early 00s), given that we’re solving different problems, using different tools and have exposure to different methodologies. Yet, this role today feels harder, or, at the very least, vastly more complex.

There are many reasons why modern software development is more accessible. For example, the barriers to entry are much lower, due to the vast number of high-quality, freely available online resources, and extensive communities eager to share knowledge.

Free tooling and compilers have also seen seen substantial improvements: just think of how Git (introduced in 2005) and platforms like GitHub (launched in 2008) have revolutionized version control and collaborative coding practices. Not to mention all the new tooling for visualizing, monitoring, mapping, bug reporting, documentation, etc.

However, today's engineers face the challenge of developing larger and more complex systems, compounded by the vast array of choices in software development tools, languages, platforms, and methodologies. Deciding on the right combination to meet a project’s needs is daunting, with each option carrying its own set of advantages and limitations.

Furthermore, the task of creating systems that are not only robust and scalable but also secure and compliant with strict data regulations significantly increases the intricacy of backend development. These systems must support high user traffic with consistent reliability, making the role of the backend developer more demanding than ever.

And while backend developers benefit from over two decades of industry learnings, it also means that they need to navigate a sea of standard solutions, each with their trade-offs, known and unknown problems and likely relatively short shelf-life due to the pace of innovation.

This article explores how backend development has transformed over the last two decades, highlighting the key factors that have made the field increasingly complex.

(1) Higher User Expectations

As computing power increased, so too did user expectations for what software could achieve.

Consider a midrange computer from the late 90s: a 233MHz single-core processor, 32MB of RAM, a 3GB hard drive, and a 2MB video card with no substantial independent computing power. Some users had a modem, but most didn’t have a persistent internet connection.

There’s only so much such a machine can support, limiting even the most innovative programmers.

In stark contrast, today's computers boast exponentially greater memory and processing power, alongside capabilities that were previously unimaginable. This technological leap enables the development of far more complex and powerful software.

Users now expect software that's accessible everywhere, works seamlessly across all devices, has responsive design, and real-time updates and collaborative features. They want software that performs flawlessly, is always available, and regularly introduces new features to cater to their changing needs.

The assumption that platforms can instantly modify specifications is only made possible by the virtualization of hardware and the complex layers of middleware and Software as a Service (SaaS) necessary for running code efficiently.

As software requirements evolve and increase, achieving them becomes more challenging. Backend engineers must leverage a multitude of technologies to architect intricate distributed systems. This complexity, in turn, not only complicates the development process but also introduces new testing, operational, and security challenges.

(2) Scale & Complexity of Systems

Today's software challenges greatly exceed those of the past, with the development of extensive computer networks handling immense volumes of transactions and expanding systems to serve millions of users.

We could bring many examples of how modern systems differ significantly from those of the '90s (e.g. introduction of caching, increased expectations for test coverage, exception handling, compiler optimization, etc.). However, we’ve opted to highlight the following which clearly show how modern backend development is extraordinarily complexity, and demands teamwork from large, distributed teams.

Distributed Computing

With the rise of cloud computing and the demand for scalable and resilient applications, distributed computing became the norm. This paradigm shift in the design and deployment of computer systems, brings additional complexities in terms of managing data consistency, ensuring fault tolerance, and designing for scalability.

Polyglot Large Codebases

It's not uncommon to encounter codebases exceeding 50 million lines. This is in part due to the growth in complexity of languages and development environments.

See as an example the evolution of languages like C++ (already complex in 1998). Today, C++ is three times as big and it encompasses multiple programming paradigms and abstraction layers, making true expertise nearly unattainable for a single individual. This trend extends across languages and ecosystems, where no modern language can be thoroughly taught in less than a thousand-page textbook. And if you were to include the standard templates and libraries, the book size could double again.

But the size of codebases is also a reflection of the fact that you need to juggle multiple languages within a system to meet all its requirements, resulting in a polyglot codebase that could include half a dozen languages.

Multithreading & Multiprocessing

Two decades ago, managing multiple concurrent threads was an exotic challenge, while today, it's a standard practice.

Furthermore, the advent of multi-core CPUs has revolutionized the approach to multiprocessing.

Unlike the single-core processors of the '90s, which imposed limitations on concurrent task execution, today's multi-core architectures facilitate the parallel processing of CPU-intensive tasks like mathematical computations and image processing. This shift from single to multi-core processing has dramatically enhanced performance capabilities, making multithreading and multiprocessing indispensable tools for modern computing.

Abstractions

Since the 90s, the way we use abstractions in software development has evolved significantly, influenced by advances in technology and changes in programming paradigms. For example, from just using object-oriented programming (OOP) to model real-world entities, we now have a wide array of abstractions, from high-level programming languages and frameworks to architectural patterns like microservices and serverless computing.

Abstractions play a crucial role in managing the complexity of modern distributed systems by allowing developers to focus on the bigger picture without getting bogged down in intricate details.

However, the increased reliance on abstractions comes with its challenges. Debugging and fully understanding a system becomes a much more daunting task, partly because traditional visualization tools might not provide a clear picture of the system's architecture.

Despite this, the shift towards more dynamic and automated tools is promising, offering developers real-time insights into the architecture of their systems, moving beyond traditional methods like whiteboards and static diagrams.

APIs & Dependencies

Today's software development landscape is markedly different from that of the '80s and '90s, primarily due to the integral role of APIs and the complex web of dependencies they introduce. Gone are the days of direct hardware manipulation, replaced by an environment where leveraging APIs for third-party services, microservices, or cloud-based resources is the norm.

The growth of APIs necessitates meticulous dependency management to prevent the creation of systems that are convoluted, fragile, or opaque, making them challenging to understand, maintain, or expand.

Consequently, a significant portion of development efforts is now devoted to managing these dependencies. This involves careful version control of APIs, ensuring system compatibility, and proactively addressing potential API changes or deprecations to safeguard the application's functionality.

System Recovery

Given the current complexity of software applications, designing a resilient system that can withstand failures gracefully and recover quickly is much more challenging.

With multiple nodes and potential network failures, developers need to implement techniques like replication, where data is duplicated across multiple nodes, and using distributed consensus algorithms to ensure that the system can continue operating even if some nodes fail.

Even if you subscribe to Werner Vogels' mantra of "everything fails all the time" and follow all the best practices, there’s still a possibility that low-probability problems become consistent failures as you scale, and that a wrong recovery approach could have non-obvious second-order effects leading to bigger problems.

Security

Today, cybersecurity is not just about protecting systems from malware (as was mostly the case 20 years ago). It encompasses a broad spectrum of considerations including data breaches, ransomware, and threats from nation-states and advanced persistent threats (APTs). The complexity, size and interconnectedness of modern systems, coupled with the reliance on a myriad of APIs and external services, has expanded the attack surface exponentially.

Protecting against a diverse array of threats requires rigorous validation of external inputs, meticulous management of software dependencies, and an ever-vigilant approach to potential vulnerabilities across all components of a system.

The cost of security breaches has also escalated, with implications extending far beyond financial damage to include significant impacts on user trust, regulatory compliance, and the very sustainability of businesses.

(3) An Overwhelming Amount of Choice

The technological landscape is advancing rapidly, presenting developers with an overwhelming array of programming languages, frameworks, tools, and platforms to choose from. This abundance of options, coupled with the vast amount of information available online, makes it challenging for developers to make well-informed decisions.

This situation can often lead to decision paralysis, a phenomenon highlighted by Barry Schwartz in his discussion on the paradox of choice, where too many options can actually hinder productivity rather than enhance it.

A very simple example is choosing a programming language. A few decades ago, the choice of a programming language was often predetermined by the limited options taught in universities (i.e. QBasic, Turbo Pascal, Fortran, C, and eventually C++) and the scarcity of learning resources. If you were particularly motivated, you could save up to buy the specific programming book that both your university and library didn’t carry.

Today “what programming language should I learn” is the first question that autofills on Google when you start typing it and it presents you with 667M results.

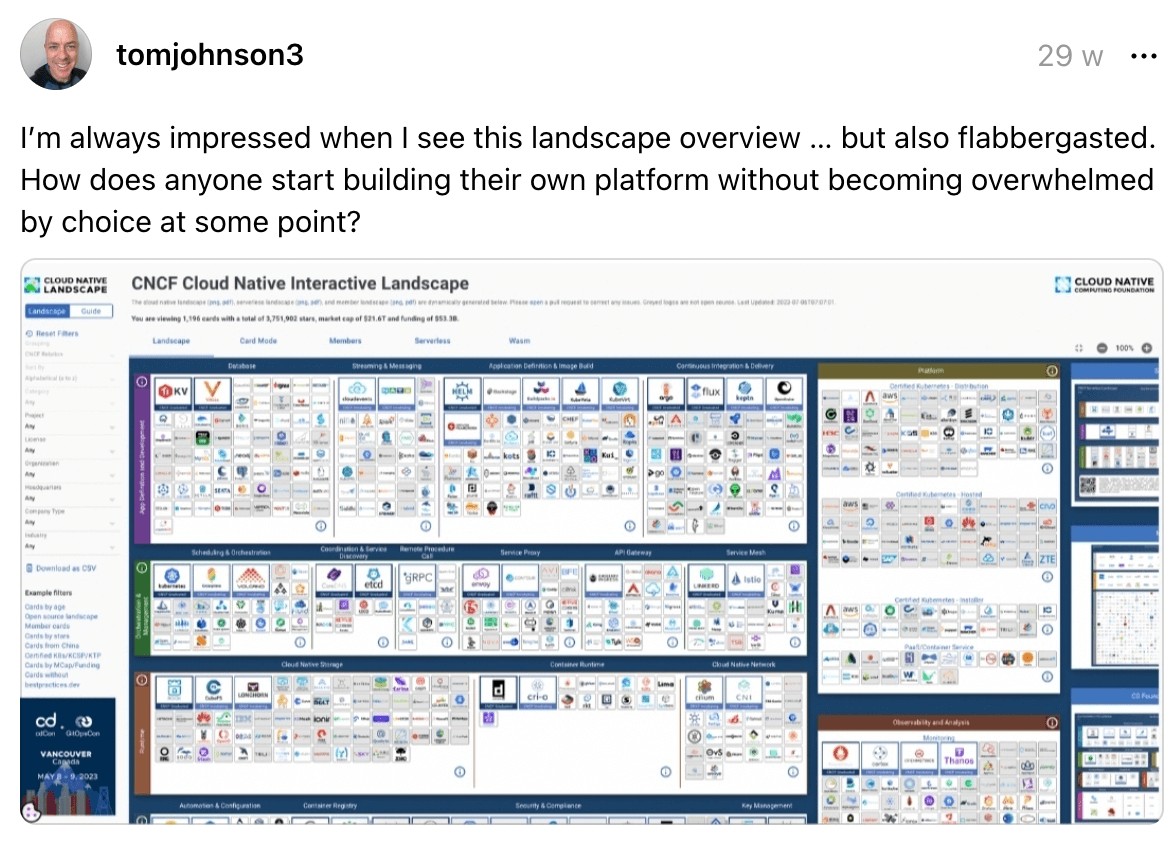

The latest CNCF report further illustrates this with its extensive listing of technologies in the cloud-native ecosystem. While having options empowers developers to tailor their tools to specific project needs, an excess of choices can be overwhelming, leading to indecision or suboptimal selections.

Research suggests that humans can effectively evaluate up to seven options when they differ along a single variable (e.g. choosing between seven green apples, each different only in price). However, the complexity increases exponentially when multiple variables are involved, especially when one alternative is better in some ways and another is better in other ways - in other words, you’re evaluation trade offs of potential solutions.

Balancing the freedom to choose with a curated set of well-recommended options can help developers navigate this landscape more effectively. Encouraging a culture of sharing knowledge and best practices within the community can also guide developers in making choices that are both informed and manageable.

(4) Different Set of Skills

Backend development extends beyond coding server-side logic, it can encompass system architecture design, trade-off analysis for scalability, security, and performance, database selection, data modeling, API development, error handling, data validation, query optimization, etc.

With the rise of cloud computing, backend developers also need to master deploying and managing cloud-based applications, understand container technologies, and know how to use orchestration tools effectively.

Besides all these technical skills, other key skills valued in modern backend development include:

- Handling legacy systems and Architectural Technical Debt. Most backend developers work on existing ("brownfield") projects, with “greenfield” projects few and far between. Therefore, it’s important to know how to adapt and evolve an architecture to accommodate new requirements, while also addressing the accumulated architectural technical debt.

- Assembling software by choosing the right technologies. Programming used to require writing code from scratch, line by line, building everything “in-house”. With the advent of software-as-a-service (SaaS) and open source software, software development has evolved to an assembly-like approach. Building and managing composable platforms is a new skill backend developers need to master, selecting and glueing together components, libraries, and frameworks to create a seamless whole.

- Architectural design. Backend software engineers are designers too and they play a crucial role in architecting systems that are scalable, efficient, and secure. Understanding system design is increasingly important with the rise of AI coding assistants: as coding tasks are accelerated, backend devs can focus on big-picture, high-level system design to stay relevant and and productive.

- Collaboration across teams. Distributed systems are built by large distributed teams that comprise many different stakeholders, such as Frontend Developers, DevOps, Platform Engineers, UX designers, UI designers, Product Managers, QA testers and more. Effective communication and collaboration with a such a diverse group is essential for the successful delivery of complex distributed systems.

Conclusion

Over the last two decades, backend development has evolved from relatively straightforward server-side coding to a complex field demanding a wide array of technical and soft skills. Today's backend developers face the challenge of creating sophisticated systems that meet ever-increasing user demands, managing the intricacies of modern software architectures, and making informed decisions amidst a plethora of technological options.

Entering the software development arena might be more accessible now than ever before, but excelling in backend development demands a deep understanding of various technologies and the ability to adapt to a fast-paced and continually changing landscape.

Success in this role hinges not only on technical expertise in areas like system architecture and cloud computing but also on strong collaboration and communication skills essential for working with diverse teams.

The role of the backend developer has expanded significantly, necessitating a blend of technical prowess, strategic thinking, and collaborative skills to be successful.