Guide

Tools for API Testing: The Must-Have Features

Table of Contents

As systems have grown more complex and decentralized, APIs have become increasingly important for communicating between application components, microservices, and external integrations. This is why they must be tested thoroughly to create a stable and reliable system for end users.

When overlooked, undetected defects in API behavior can propagate through layers of the application stack and surface as elusive and costly failures late in the development lifecycle. Such failures can be avoided if API testing is started early and implemented properly. In addition, comprehensive API testing can result in faster and more reliable UIs and help detect security issues and regressions.

An important step in implementing API testing is selecting the tooling. Because different testing scenarios require different capabilities, there is no one-size-fits-all solution to API testing. Instead, teams must ensure the testing platform or platforms they select provide the required functionality. In this article, we present the key features to look for in an API testing tool to ensure successful testing.

Summary of key tools for API testing

The table below summarizes the essential features of an effective API testing tool. In the sections that follow, we explore each feature in greater depth.

| Desired feature | Description |

|---|---|

| Response validation and assertions | Ensures that the API returns the expected data and does not expose sensitive information through robust, configurable checks |

| Environment management | Centralizes environment variables to reliably test development, staging, and production environments |

| Request creation with multiple formats and protocols | Supports diverse communication protocols, request formats, and authentication methods to facilitate efficient and flexible test design |

| Request tracing throughout the entire system | Captures comprehensive traces, logs, and UI activity in a shareable view to allow developers to track and debug issues quickly |

| Automation and CI/CD integration | Allows developers to create automated test suites and schedule trigger-based API testing in a CI/CD platform to catch regressions early and reduce manual labor |

| Performance testing capabilities | Evaluates how an API performs under load to identify bottlenecks, ensure stability, and validate error handling during traffic spikes |

Response validation and assertions

Validating API responses ensures both accuracy and reliability. The tool you select should have built-in assertions or validation functions to help catch invalid or sensitive information returned. This includes checking status codes, response times, data formats, and specific values within the response body.

For instance, consider the following simple curl request:

$ curl -X GET "https://jsonplaceholder.typicode.com/posts/1"The request returns the following data:

{

"userId": 1,

"id": 1,

"title": "sunt aut facere repellat provident occaecati excepturi optio reprehenderit",

"body": "quia et suscipit\nsuscipit recusandae consequuntur expedita et cum\nreprehenderit molestiae ut ut quas totam\nnostrum rerum est autem sunt rem eveniet architecto"

}While the response is well-structured JSON, relying on manual inspection or verbose output to spot issues like a missing key or an exposed header is inefficient and error-prone. Although making the request verbose can reveal more details, it often results in an overwhelming amount of raw data, much of which may be irrelevant to the test at hand.

To address this, it’s a good practice to include assertions that validate the returned data programmatically and confirm that it meets expected conditions. For example, we can validate the data from the request above using the following script:

import axios from 'axios';

import assert from 'assert';

async function testPostsAPI() {

try {

const response = await axios.get('https://jsonplaceholder.typicode.com/posts/1');

assert.strictEqual(response.status, 200, 'Expected status code 200');

const data = response.data;

assert.ok(data.userId !== undefined, 'Missing "userId" field');

assert.ok(data.id !== undefined, 'Missing "id" field');

assert.ok(typeof data.title === 'string', 'Invalid or missing "title" field');

assert.ok(typeof data.body === 'string', 'Invalid or missing "body" field');

console.log('All response assertions passed');

} catch (err) {

console.error('Test failed:', err.message);

}

}

testPostsAPI();In this script, we verify that the request was successful, ensure that all required keys are present in the JSON response, and confirm that each value has the expected type. If the API returns static or deterministic data, we can go a step further and assert that the exact text values match the expected output.

Assertions should also be used to verify that the API fails as expected. For example, if a user sends an authenticated request without the required authentication header, the API should return an error response with an appropriate status code (e.g., 401 or 403) and message.

Proper assertions are also needed to catch edge cases. For example, a test should validate the API’s behavior if it expects an integer ID in the body but instead receives a string. In a surprising number of cases, these types of rudimentary checks are not consistently enforced at the API level, which can result in requests failing unpredictably.

Full-stack session recording

Learn moreEnvironment management

Real-world applications are deployed across multiple environments with different configurations, and APIs might function differently depending on the specific configuration. Staging environments might include verbose logging or mock services, while production environments might be optimized for security and performance. It is critical to ensure that APIs work correctly in each of these environments.

Manually updating the configurations while switching between environments is inefficient and error-prone. For this reason, it is important for a testing tool to support centralized management of environment-specific variables and configurations, such as:

- Base URLs

- Authentication credentials

- API tokens

- Headers

- Request bodies

This capability allows developers to test the same API across different environments without changing the test script. It also helps pinpoint environment-specific issues during testing and ensures that tests execute in the correct context without cross-contaminating data or misrouting requests.

Request creation with multiple formats and protocols

To support the complexity and diversity of modern systems, an API testing tool should be able to handle multiple protocols (HTTP/HTTPS, gRPC, GraphQL, WebSockets, etc.) and different request body formats, including JSON, XML (for legacy systems), and form data. It should also handle authentication mechanisms like API keys, OAuth tokens, and JSON Web tokens to ensure secure access and proper authorization during testing.

Here’s an example of a curl request that includes headers and a JSON body:

curl -X POST "https://reqres.in/api/users" \

-H "x-api-key: reqres-free-v1" \

-H "Content-Type: application/json" \

-d '{"name": "Alice", "job": "QA Engineer"}'Although curl can be used to validate authentication methods like API keys, OAuth tokens, and JWTs in manual API testing and simple automation scripts, it lacks a graphical user interface and built-in capabilities for advanced test management, reporting, and orchestration. This makes it less suitable for large-scale automated testing in complex distributed environments without additional tooling or wrappers.

For example, when testing multiple endpoints with various test cases, managing all the requests with different data types is challenging using only a command-line tool. Consider the case of testing the same endpoint multiple times, with cases like:

- Valid inputs

- Missing fields

- Incorrect data types

- Different authentication headers

- Edge cases

Interact with full-stack session recordings to appreciate how they can help with debugging

EXPLORE THE SANDBOX(NO FORMS)

With curl, you would need to manually rewrite or copy-paste the command each time, changing the request body and headers for each test. Handling different data formats or injecting dynamic variables requires additional scripting or manual effort, adding complexity and increasing the risk of errors.

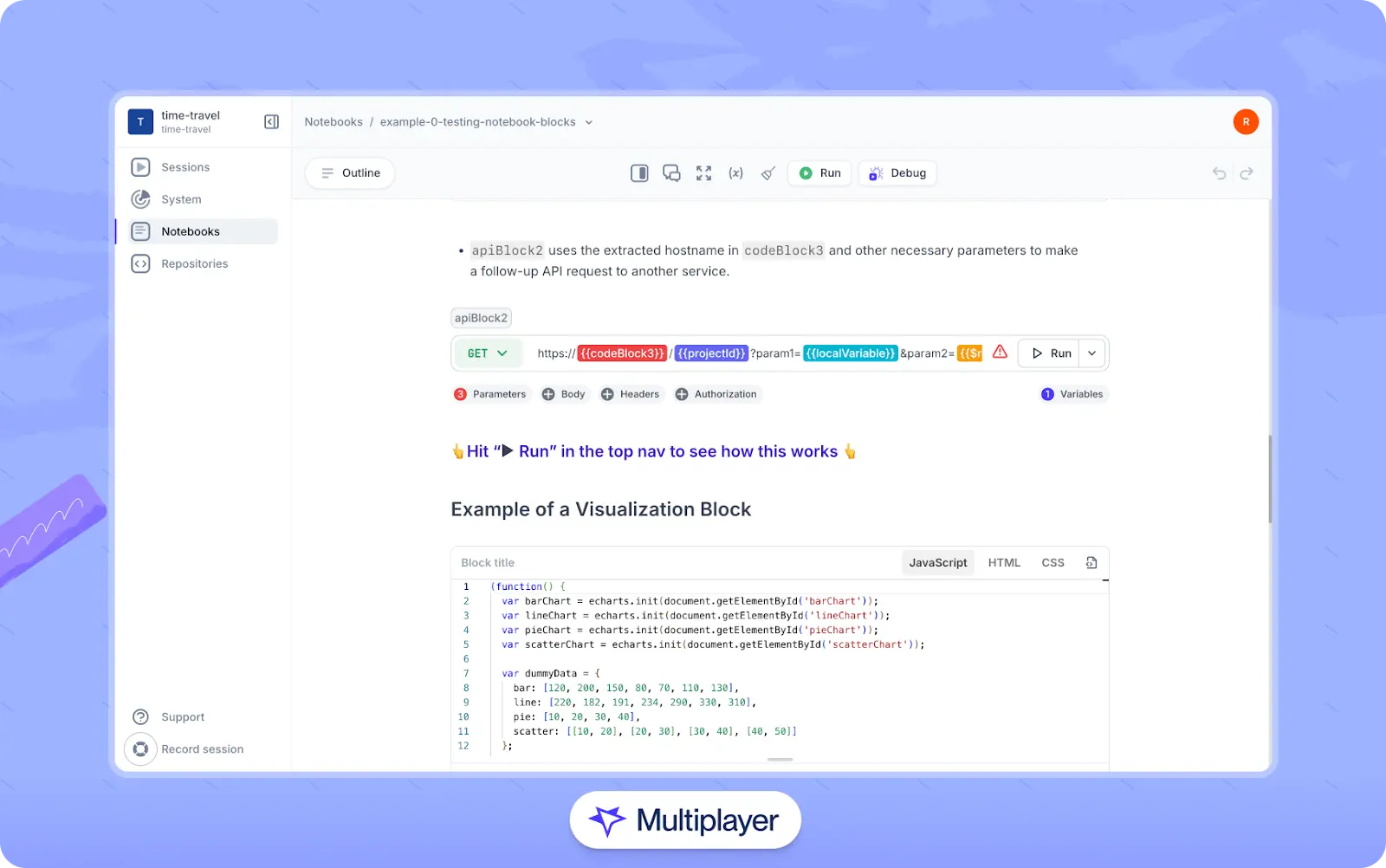

Tools like Multiplayer’s notebooks address these issues by providing an integrated environment to design, test, and debug API workflows without relying on external tools or manual scripts. Users can create and chain API requests, manage variables centrally, and execute code in organized blocks. Notebooks also support live debugging across systems and offer AI-assisted generation of API calls from natural language descriptions.

Multiplayer’s notebooks

Request tracing throughout the entire system

In distributed systems, pinpointing the source of a failure can be challenging. Issues may originate from the frontend, backend, or the integration layer between them. User-facing discrepancies can arise where an API performs correctly in isolation but exhibits unexpected behavior when integrated with the frontend due to differences in data formats, timing, or state dependencies.

To debug such issues effectively, you need distributed tracing, which provides end-to-end visibility into a request’s path across different components within a distributed system. However, implementing distributed tracing effectively presents challenges. Each service must be instrumented with tracing libraries that propagate context across HTTP or messaging protocols and store trace data reliably.

Here’s how distributed tracing typically works:

- Instrumentation: Each service in the system embeds a tracing library within its codebase to emit trace data at key points, such as when receiving a request or calling another service.

- Correlation IDs: Every incoming request is assigned a unique identifier that is propagated with the request as it moves across services. This ID is attached to logs and traces, making it easier to stitch the request’s flow together while debugging.

- Trace context propagation: The correlation ID and other trace metadata persist as the request passes from one service to another.

- Service maps: Once traces are successfully collected, visualization tools generate service maps that show how different components interact.

For a more in-depth look at distributed tracing, see our free guide.

In the absence of distributed tracing, the debugging process becomes fragmented. Developers must use screen recorders to capture the issue, manually save the browser console and network logs, and then attach them to a Jira ticket. Other team members must then reproduce the issue, review the logs, and figure out what went wrong. In the best-case scenario, the bug is resolved through a process that requires a lot of manual, time-consuming work. However, in many cases, the issue cannot be reliably reproduced, the logs lack sufficient context, and valuable debugging time is lost in back-and-forth communication

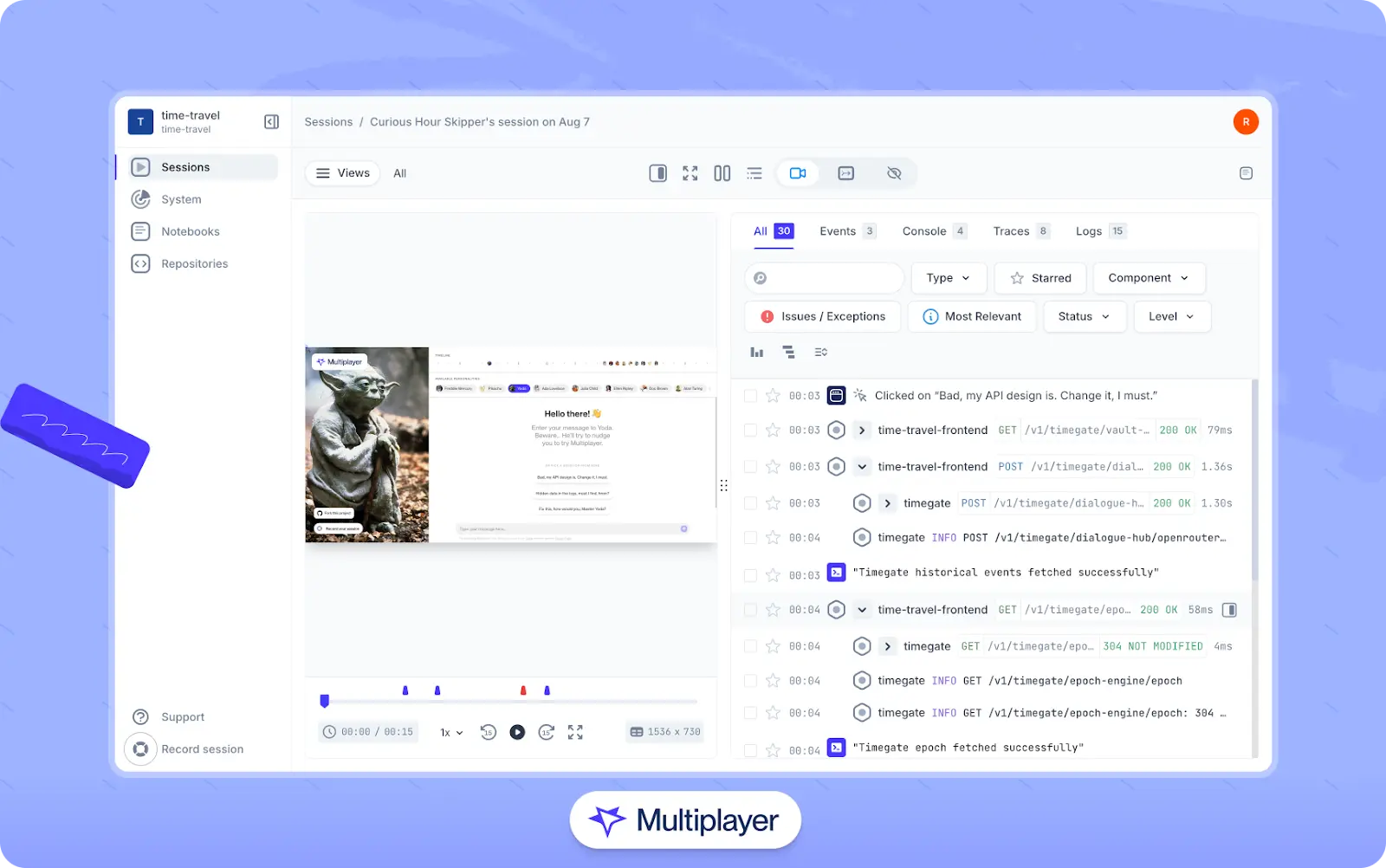

Multiplayer’s full-stack session recordings alleviate these pain points by bringing the bug reporting and resolution process into a single system. They utilize data collected from OpenTelemetry to allow teams to:

- Record sessions, capturing the exact steps to reproduce an issue

- Collect comprehensive data by gathering frontend screens, backend traces, metrics, logs, and full request/response content and headers to provide full visibility into the problem.

- Share information efficiently because all session recordings and traces are centralized. A single invitation to a team member replaces all the back-and-forth and debugging noise.

- Auto-generate test scripts by generating executable notebooks from session replays that include runnable test scripts with real API calls, payloads, and code logic.

Multiplayer’s full-stack session recordings

One click. Full-stack visibility. All the data you need correlated in one session

RECORD A SESSION FOR FREEAutomation and CI/CD integration

Running the same test scripts repeatedly as APIs evolve is time-consuming. When test runs become repetitive and require significant manual effort, it is often an indicator that automation should be introduced into the testing strategy. Choosing a tool that supports both writing and executing automated test scripts significantly improves testing workflows and frees up time for higher-value tasks.

The tool should support scripting in a familiar language like JavaScript so that developers can write test scripts, organize test suites, and maintain tests easily over time. In addition, it should integrate with CI/CD platforms like GitHub Actions, Jenkins, or Travis to ensure that tests are automatically triggered whenever code or APIs change and catch regressions early.

Here is a simple example using Node.js and assert to test the ReqRes API:

import assert from 'assert';

import fetch from 'node-fetch';

async function validateUserDetails() {

try {

const response = await fetch('https://reqres.in/api/users/2');

// Assert HTTP status

assert.strictEqual(response.status, 200);

const data = await response.json();

// Validate structure

assert.ok(data.hasOwnProperty('data'));

assert.ok(data.hasOwnProperty('support'));

const user = data.data;

// Validate user fields

assert.strictEqual(user.id, 2);

assert.strictEqual(typeof user.email, 'string');

assert.ok(user.email.includes('@'));

assert.strictEqual(typeof user.first_name, 'string');

assert.strictEqual(typeof user.last_name, 'string');

assert.strictEqual(typeof user.avatar, 'string');

assert.ok(/^https?:\/\//.test(user.avatar));

const support = data.support;

// Validate support info

assert.strictEqual(typeof support.url, 'string');

assert.ok(/^https?:\/\//.test(support.url));

assert.strictEqual(typeof support.text, 'string');

console.log('All assertions passed!');

} catch (err) {

console.error('Test failed:', err.message);

}

}

validateUserDetails();This test can then be integrated into a CI pipeline, ensuring that any unintended changes in the API, like missing keys or type mismatch, are caught early. If regression happens, then the pipeline will fail, notifying the team immediately.

Performance testing capabilities

Systems that support many users must ensure that their APIs can handle heavy traffic and concurrent user operations. Therefore, it is critical to include a testing tool in your workflow that can simulate load commensurate with expected production traffic to measure API performance under stress. Key metrics to track include response time, throughput, and resource utilization.

Performance testing should focus on the endpoints that experience high levels of requests, are exposed externally to users or third parties, and have strict service-level agreements (SLAs) for response times and availability. Tools used for this purpose should support distributed load generation to simulate realistic usage from multiple geographic regions, helping uncover issues that only arise at scale.

Modern performance testing tools apply patterns such as ramp-up (gradually increasing virtual users) and ramp-down (gradually decreasing them) to observe how the system handles spikes, recovers, and scales. Tests should encompass both expected load conditions and simulate worst-case scenarios. This helps the team recognize any weak points in the system architecture and plan for resource allocation, improve scalability, and put recovery mechanisms in place in case the system becomes overwhelmed.

Some of the open-source tools for performance testing include K6 and Apache JMeter, which support CI/CD integration and provide detailed system performance statistics.

Stop coaxing your copilot. Feed it correlated session data that’s enriched and AI-ready.

START FOR FREELast thoughts

API testing helps developers create stable, efficient, and reliable systems. Before selecting an API testing tool, teams must determine the current system's requirements, plan for future growth, and select a suite of tools that offer the right balance of functionality, scalability, and integration with existing workflows.

Tools like Multiplayer provide many features within a single tool that can be easily integrated into your testing workflow, allowing teams to integrate traditional API testing functionalities with advanced, real-time debugging capabilities.

API testing is not just about picking a single powerful tool; it’s about selecting one that aligns with your team’s workflow, grows with your system, and simplifies testing, development, and debugging from end to end.