Guide

API Testing Automation: Best Practices & Examples

Table of Contents

Modern software systems depend heavily on APIs to integrate services and deliver functionality. However, guaranteeing API correctness, performance, and security in distributed environments and at scale presents a significant challenge because the pace and complexity of development far exceed the capabilities of manual testing. Automated API testing addresses this gap by enabling continuous, reliable validation across environments and development stages.

This article provides best practices for strategic automation, including test isolation, security, data management, and CI/CD integration. It also highlights different types of API testing and discusses common challenges such as flakiness and dynamic data.

Summary of API testing automation best practices

The table below summarizes the best practices discussed in this article.

| Best practice | Description |

|---|---|

| Start early and focus on high-value APIs | Target critical APIs—such as auth, billing, and third-party integrations—where failures have a real impact. |

| Write isolated and assertive tests | Keep tests focused, avoid external dependencies, and use strong assertions to reduce flakiness. |

| Mock, stub, and virtualize dependencies | Replace unreliable or slow systems with mocks to improve test speed, reliability, and control. |

| Validate authentication and authorization mechanisms | Test auth, access control, and full integration workflows to ensure secure and correct behavior across systems. |

| Use data-driven tests with clean parameterizations | Vary inputs using structured data, avoid hardcoded values, and keep test logic clean and reusable. |

| Monitor, debug, and evolve the test suite | Track flaky tests, log issues clearly, and update tests as APIs change to keep coverage relevant. |

| Capture and document bugs | Leverage full-stack session recordings, detailed request/response data, and environment context to reliably reproduce test failures and reduce time to resolution. |

Common challenges

Before diving into best practices, let’s examine some common API testing automation challenges.

When automating API tests, failures that don’t point to real problems waste time and weaken reliability. Infrastructure instability, unpredictable data, and design inconsistencies often introduce false positives, missed bugs, or brittle tests that fail for the wrong reasons. Recognizing these common pain points helps teams design more resilient and maintainable test suites.

Here are three factors that can hinder test effectiveness.

Infrastructure issues

Infrastructure flakiness is the top reason teams lose trust in their test suites. It leads teams to ignore failures entirely because “those tests flake sometimes”—and that’s dangerous. Once that trust is gone, automation becomes a source of noise.

Shared staging environments often get reset mid-run, become misconfigured, or quietly drift from production. Tests that pass locally suddenly fail in CI due to minor differences or race conditions.

In addition, relying on real third-party APIs, live databases, or even internal microservices introduces significant risk. Test jobs often fail because of third-party rate limits, expired OAuth tokens, or an external sandbox going down.

Poor data integrity

Reliable API testing requires intentional control of the data lifecycle; without it, your suite becomes a collection of guesses rather than validations. Poor test data silently breaks otherwise well-structured tests, especially when dealing with dynamic values, versioned APIs, or shared state across environments.

APIs often return time-sensitive, stateful, or auto-generated fields such as timestamps, UUIDs, tokens, or calculated totals. If the assertions compare entire responses blindly, this often results in brittle tests. Tests also become brittle when they depend on pre-existing records that can change, expire, or be deleted. In shared test environments, one team’s cleanup is another team’s mysterious failure.

If your system supports multiple API versions, your test suite must follow suit. Different versions might change field names, behavior, or response formats: What passes in v1.0 might break in v2.1 and silently regress when testing a single version.

Design flaws

Design issues silently undermine automation from the start by increasing test setup friction, introducing ambiguous behavior, or forcing QA to reverse-engineer the API just to understand what “correct” looks like.

APIs today are rarely public or simple. They use OAuth2, signed JWTs, rotating secrets, or complex multi-tenant auth flows, each of which adds friction to test setup and execution. For example, tokens expiring mid-run, test users with inadequate permissions, and reliance on a hardcoded admin token that is valid only in staging are common sources of test suite failures.

In addition, API specs rarely cover what happens when inputs are empty, maxed out, or logically flawed (e.g., deleting an already-deleted resource). If your tests only cover the happy path, you’re only validating the ideal scenarios.

Finally, many teams treat API documentation as optional or forget to update it entirely. When documentation is missing, out-of-date, or ambiguous, test writers are left guessing at expected behavior. Similarly, contract drift is also a common issue in microservice architecture. The API changes (intentionally or not), but no one tells the consumer or updates the tests. A renamed field, removed attribute, or changed enum value can quietly break systems downstream.

Types of API testing

Different types of tests validate various aspects of an API’s behavior. Understanding these categories will help you design a test suite that catches regressions and ensures long-term reliability across use cases, environments, and integration points.

| Testing type | What it does | What to look for |

|---|---|---|

| Functional | Verifies that each API endpoint behaves as expected under normal conditions | Correct HTTP status codes, accurate response payloads, field-level validation, and business logic enforcements |

| Performance and load | Evaluates how the API performs under stress or volume | Latency, throughput, error rate under load, and system behavior during spikes or degradation |

| Security | Ensures that the API is protected against unauthorized access and common vulnerabilities | Authentication (OAuth, API keys), authorization, rate limiting, injection prevention, and data leakage |

| Contract | Validates that the API conforms to its specification (e.g., OpenAPI, GraphQL schema) | Schema validation, required fields, enum values, data types, version compliance, and backward compatibility |

| Integration | Tests the API’s interaction with other services, databases, or external systems | System-level workflows, data consistency across services, API dependencies, and integration failure behavior |

| Error/fault tolerance | Evaluates how the API behaves in the face of failures or invalid internal conditions | Graceful degradation, clear error messages, circuit breakers, retries, and fallback logic |

| Negative and edge cases | Sends invalid, malformed, or extreme input to test the system's robustness | 4xx errors, validation failures, buffer limits, timeouts, unsupported operations, and logical boundary tests |

Not every project requires all testing types. Instead, your testing strategy should reflect your system’s architecture, risk profile, and available resources. For example, performance testing is likely most critical for a public-facing service, while contract testing is beneficial in a microservices environment. Due to team and resource constraints, smaller teams might prioritize functional and security tests for critical endpoints. The key is to apply the right mix of tests that maximizes value without overextending your efforts.

Start early and focus on high-value APIs

Avoid the temptation to cover every API from the outset; instead, prioritize your efforts on high-risk APIs to maximize impact and mitigate the most significant risks. To effectively prioritize, consider endpoints that handle:

- Authentication and authorization flows: Login, token refresh, and permission checks

- Transactional APIs: Any APIs that deal with currency, provisioning, or state changes

- External integrations: APIs that depend on third-party services or internal microservices

- Stateful or cross-system workflows: Onboarding, billing, fulfillment, etc.

In addition, focus on business-critical endpoints, revenue-generating APIs, and APIs that underpin your application's fundamental operations. These include:

- APIs that directly serve your users and impact their experience and satisfaction

- Endpoints that handle sensitive data (e.g., PII, financial information, and healthcare records)

- Endpoints that are subject to strict regulations like GDPR or HIPAA, where noncompliance can result in hefty fines and legal repercussions

Write isolated and assertive tests

Each test should validate one specific behavior. When a test exercises multiple behaviors at once (e.g., CRUD), a failure in any step makes it unclear where the real issue lies. Moreover, if these steps have side effects, they may unexpectedly break other tests.

Design tests that focus on a single operation and assertion with the minimal setup data required for that scenario. Consider the test script below:

import pytest

import requests

from unittest.mock import patch

API_BASE = "https://api.example.com"

@pytest.fixture

def user_data():

return {

"id": "user_id",

"email": "user@example.com",

"name": "Test User"

}

def fetch_user(user_id):

response = requests.get(f"{API_BASE}/users/{user_id}")

response.raise_for_status()

return response.json()

@patch("requests.get")

def test_fetch_user_returns_expected_email(mock_get, user_data):

mock_get.return_value.status_code = 200

mock_get.return_value.json.return_value = user_data

user = fetch_user(user_data["id"])

assert user["email"] == "user@example.com", (

f"Expected email 'user@example.com', got '{user['email']}'"

)This test asserts only the expected value of a single field (“email”). If it fails, the output is specific and actionable: It tells you exactly what was expected and what was returned. If all tests are written in this manner, the suite will be easier to debug, parallelize, and maintain over time.

Use strong, meaningful assertions

Shallow assertions, like checking only for a “200 OK” status code, can mask defects by passing tests even when APIs return incorrect data, omit required fields, or violate business logic. Strong assertions validate the API’s behavior comprehensively so that tests fail when the system misbehaves and pass only when it meets expectations. For example:

import requests

import pytest

def test_deactivate_user():

response = requests.post("https://api.example.com/v1/users/123/deactivate")

# Weak assertion example: Only checks status

assert response.status_code == 200

# Strong assertion example: Verify business logic and key fields

assert response.status_code == 200, "Expected 200 OK"

assert response.json()["status"] == "inactive", "User must be deactivated"

assert response.json()["user_id"] == 123, "Incorrect user_id"The code above explicitly validates the response structure, status code, and critical fields. Assert business behavior—e.g., “the user is deactivated”—rather than superficial details like “response includes user_id”.

Full-stack session recording

Learn moreYou can also strengthen your assertions using schema validation tools such as JSON Schema or OpenAPI validators, which help catch subtle structural changes:

import requests

import pytest

import jsonschema

USER_SCHEMA = {

"type": "object",

"properties": {

"user_id": {"type": "integer"},

"status": {"type": "string"}

},

"required": ["user_id", "status"]

}

def test_user_creation():

response = requests.post("https://api.example.com/v1/users", json={"email": "test@example.com"})

assert response.status_code == 201, "Expected 201 Created"

jsonschema.validate(response.json(), USER_SCHEMA)

assert response.json()["status"] == "active", "User must be active"Example JSON schema validation for an API test

A precise assertion gives you high confidence that a test passes only when the system behaves correctly and fails when it doesn't.

Avoid test brittleness

While assertions should be specific, they must also tolerate expected variations in non-critical fields. For example, timestamps, UUIDs, or dynamic tokens shouldn’t break the test unless the test’s purpose is to explicitly verify a particular format or the presence of a specific value. To avoid these false failures, use pattern-based matching for dynamic values, exclude volatile fields from deep equality checks, and keep tests focused on functional intent rather than internal implementation details.

Mock, stub, and virtualize dependencies

Relying on live third-party APIs or backend dependencies during testing introduces latency, instability, and non-deterministic behavior. Rather than calling real endpoints, which may be rate-limited, unavailable, or subject to changing behavior, you can use mock servers or stubbed responses that replicate the contract without real execution. Key scenarios to test using mocks include the following:

- Timeouts: Validate that clients enforce timeouts and degrade gracefully.

- Transient server errors: Test retry strategies with backoff and jitter.

- Malformed or unexpected payloads: Ensure that schema validation and error reporting behave as expected.

- Circuit breakers and fallback logic: Trigger cascading failure conditions to verify that bulkheads or alternate services engage.

For integration-level tests involving multiple teams, you can virtualize entire API backends using mocking tools. These virtual services can simulate asynchronous flows, error injections, and version-specific responses and allow you to spin up local or remote mock servers that simulate API behavior with a high degree of fidelity. Virtual APIs can also replicate:

- Asynchronous behavior: Simulate event-driven systems by configuring delayed responses, webhook callbacks, or message queue behavior.

- Version-specific contracts: Run parallel tests against multiple versions of an API to verify backward compatibility.

- Controlled failures: Inject errors like 500s, malformed payloads, or slow responses to test your clients' resiliency and fallback logic.

Interact with full-stack session recordings to appreciate how they can help with debugging

EXPLORE THE SANDBOX(NO FORMS)

Validate authentication and authorization mechanisms

Authentication logic often involves multiple layers: session tokens, API keys, OAuth flows, or custom token validation. Make sure to test both successful and failed login attempts, token expiration, and invalid credentials. Tests should:

- Validate successful logins using valid credentials

- Simulate invalid credentials, token expiration, and missing tokens

- Assert correct HTTP status codes (401, 403) and response messages

- Detect unexpected behavior in refresh token or reauthentication flows

The following script validates token expiration handling using the requests library to ensure that the system correctly rejects invalid or expired tokens without leaking information about valid credentials.:

import requests

import pytest

API_URL = "https://api.example.com/protected"

@pytest.mark.parametrize("token", [

"expired_token_value",

"invalid_token",

""

])

def test_authentication_failure(token):

headers = {"Authorization": f"Bearer {token}"}

response = requests.get(API_URL, headers=headers)

assert response.status_code in [401, 403]APIs must also enforce strict authorization boundaries and robust input validation. Use role-based test accounts to attempt privilege escalation (e.g., regular users accessing admin routes), submit malformed data to verify schema enforcement, and check for data leakage by accessing other users’ resources. In addition, you can use time-based throttling headers (Retry-After, X-RateLimit-Remaining) to verify rate-limiting logic.

Simulate complex integration workflows

Modern APIs often coordinate multiple services (e.g., billing, messaging, audit logging, notifications). A broken link in one step can cause downstream drift.

To guard against this, integration tests must simulate realistic, multi-step flows across services and assert both expected outputs and state transitions. These flows should replicate asynchronous interactions, idempotent retries, and potential partial failures.

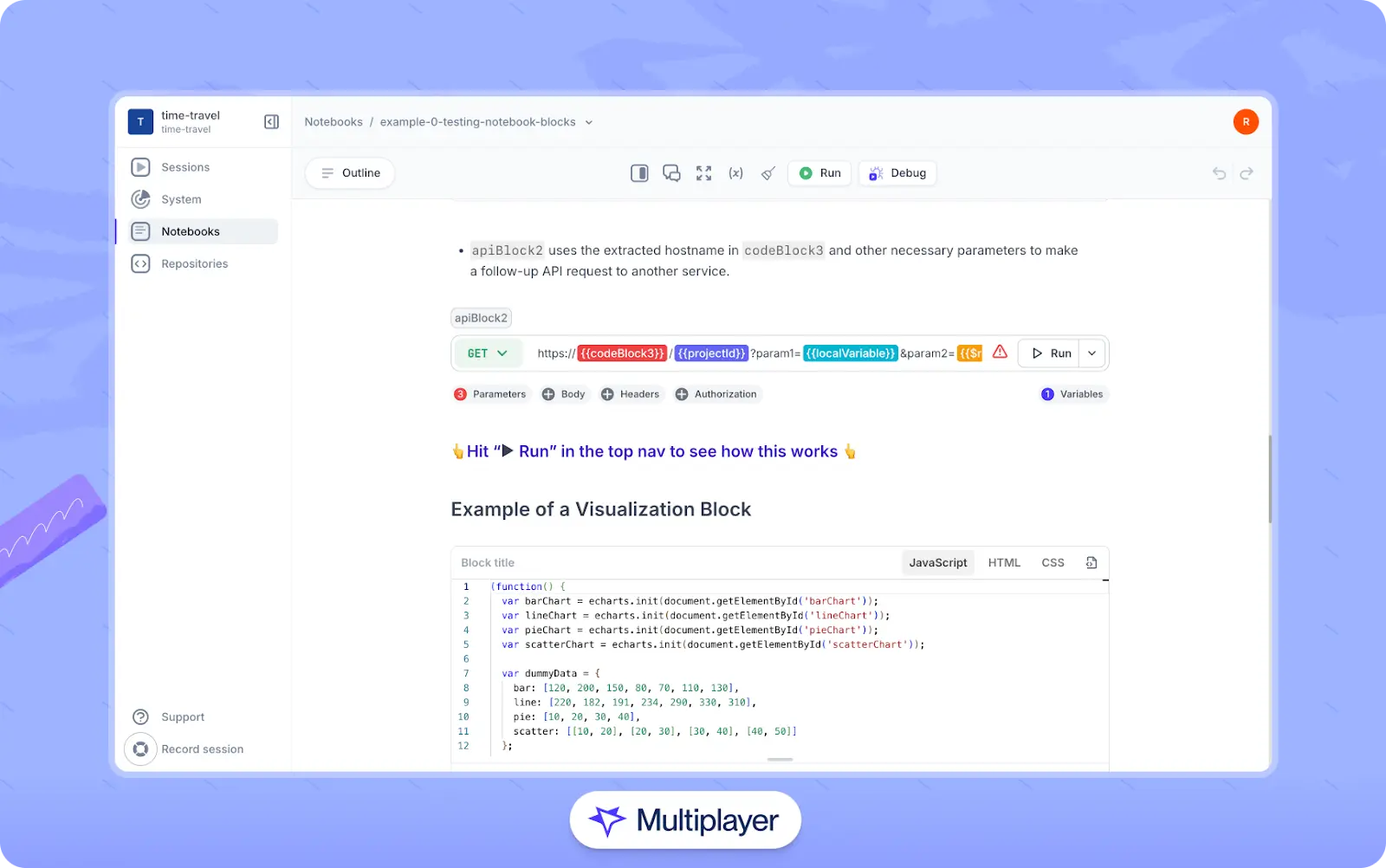

Tools like Multiplayer’s notebooks allow you to orchestrate these scenarios interactively by chaining requests and visualizing intermediate states. In addition, notebooks help you document the entire integration path and version test sequences, making them reproducible across environments and useful during incident retrospectives.

Multiplayer Notebooks

Use data-driven tests with clean parameterization

Data-driven testing allows you to cover a broad range of edge cases, locales, API versions, and user types while keeping test logic minimal and reusable. Instead of baking values directly into test cases, define fixtures or use factories that generate structured inputs. Here’s a basic example using Python's pytest framework with parametrize:

import pytest

import requests

@pytest.mark.parametrize("payload, expected_status", [

({"email": "valid@example.com", "password": "secure123"}, 200),

({"email": "", "password": "secure123"}, 400), # Missing email

({"email": "user@example.com", "password": ""}, 400), # Missing password

({"email": "invalid_email_format", "password": "pass"}, 422),

])

def test_user_login(payload, expected_status):

response = requests.post("https://api.example.com/login", json=payload)

assert response.status_code == expected_statusThis simple pattern drives multiple assertions off one reusable logic block. Instead of duplicating test_user_login_* scenarios, you cover them systematically with clean inputs.

For more complex scenarios, such as validating regional formats, localization, or API version drift, consider loading structured JSON or YAML fixtures and looping over them in tests:

import json

import pytest

import requests

with open("tests/data/edge_cases.json") as f:

test_cases = json.load(f)

@pytest.mark.parametrize("case", test_cases)

def test_api_behavior(case):

response = requests.post(case["endpoint"], json=case["payload"])

assert response.status_code == case["expected"]Parameterization improves test coverage and maintainability. Combined with mocks and integration hooks, it lets you simulate unpredictable real-world behavior under controlled, repeatable conditions.

One click. Full-stack visibility. All the data you need correlated in one session

RECORD A SESSION FOR FREEMonitor, debug, and evolve the test suite

It is common practice to integrate automated testing into CI/CD pipelines. However, doing so without proper oversight can lead to technical debt. As test suites grow in size and complexity, test infrastructure should be instrumented with runtime metrics, failure diagnostics, and stability trends. To do this effectively, implement the following techniques:

- Identify obsolete tests: Regularly audit test results and failure logs to identify tests that always pass or fail or that cover logic no longer present in the system.

- Tag and group tests for selective execution: As your suite grows, it becomes inefficient to run every test on every code change. Use tagging (e.g., @smoke, @auth, @payment) to group tests by function and enable targeted runs.

- Track test runtime and CI feedback latency: One of the most common anti-patterns in growing systems is a test suite that quietly slows down CI/CD pipelines. Monitor test execution time on every run; when runtimes exceed 30–45 minutes, that’s a strong signal to split tests across parallel jobs or evaluate test dependencies and slow fixtures.

It is also important to tag flaky tests in CI pipelines using tools like GitHub Actions and escalate them whenever necessary. Record test stability metrics—like failure frequency, duration, and affected environments—and establish a “quarantine” process in which unstable tests are moved to a separate suite until they’re fixed.

For example, to implement basic flakiness tracking in Python with pytest, you can use a plugin hook to log results across test runs:

# conftest.py

import logging

import json

from datetime import datetime

from uuid import uuid4

import pytest

logging.basicConfig(level=logging.INFO, format='%(message)s')

logger = logging.getLogger("flaky_tests")

@pytest.hookimpl(hookwrapper=True)

def pytest_runtest_makereport(item, call):

"""Log flaky test failures with structured metadata."""

outcome = yield

result = outcome.get_result()

if result.when == "call" and result.failed:

test_id = str(uuid4())

failure_details = {

"test_id": test_id,

"nodeid": item.nodeid,

"environment": item.config.getoption("--env", "unknown"),

"failure_time": datetime.utcnow().isoformat(),

"exception": str(call.excinfo.value) if call.excinfo else "Unknown"

}

logger.info(json.dumps({"event": "flaky_test", "data": failure_details}))

if item.config.getoption("--ci"):

with open("flaky_tests.json", "a") as f:

json.dump(failure_details, f)

f.write("\n")This example is tailored for API testing with environment context and observability in mind. It captures critical data (test ID, environment, failure time, exception) and writes failure details to a flaky_tests.json log file when the --ci flag is set. This output can be picked up by CI pipelines (e.g., GitHub Actions) to track trends, raise alerts, or trigger quarantine workflows.

Capture and document bugs

Failing API tests must provide actionable insights beyond flagging regressions; they should provide enough context to support efficient debugging. Effective failure reports should include request/response data, environment metadata, and relevant correlations that can help trace the issue across systems.

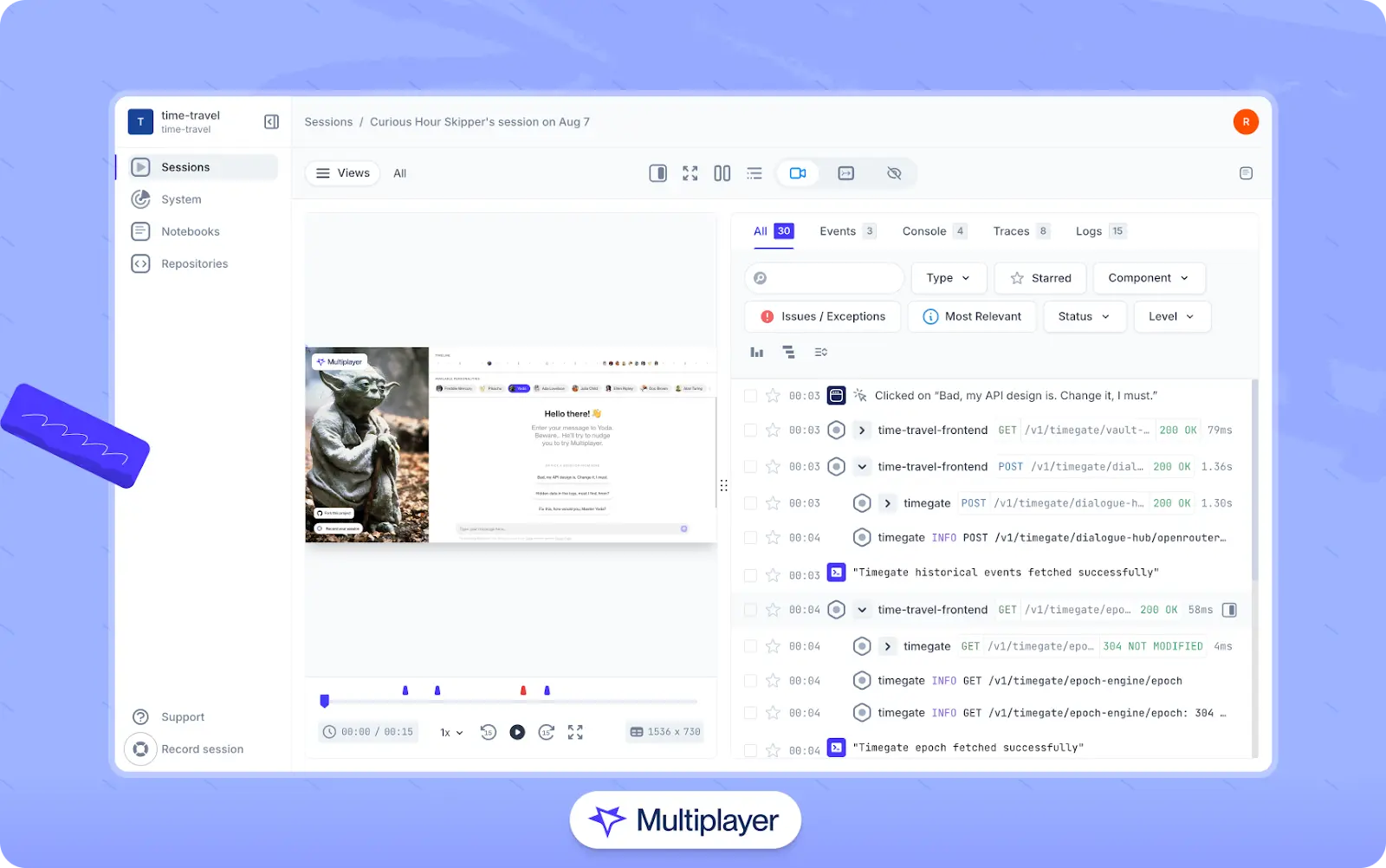

Once an automated test run catches a failure, tools like Multiplayer’s full-stack session recordings can help developers investigate it. These replays capture headers, payloads, traces, metrics, and logs, which allows teams to replay flaky behavior and understand its impact across service boundaries.

Debugging using session replays of the Multiplayer full-stack session recordings

The full-stack session recordings also integrate with notebooks to document test failures with supporting telemetry, exploratory steps, and annotations. Notebooks are executable and versioned, making them useful for creating reproducible bug reports within a shared, traceable context.

Stop coaxing your copilot. Feed it correlated session data that’s enriched and AI-ready.

START FOR FREELast thoughts

Effective API testing in modern distributed systems means building isolated, assertive, and resilient tests. It also means using tools that provide visibility into how your APIs behave in production.

This article provided a practical roadmap for building a reliable API testing strategy in distributed systems. It included methods to mitigate common challenges like infrastructure flakiness, unpredictable data, and evolving API contracts. It also explored different types of API testing with clear, actionable best practices such as prioritizing high-value endpoints, isolating tests, mocking dependencies, validating security flows, and maintaining test data hygiene.

Multiplayer’s notebooks and full-stack session recordings bring these capabilities together. From test authoring and live validation to debugging flaky test cases and complex workflows, they offer the shared context teams need to move fast without sacrificing quality. You get deterministic test runs, reusable workflows, and centralized insights, all integrated with the systems you already use.

By combining automation with visibility and deep system understanding, you can replace reactive debugging with proactive quality engineering, so you can ship more quickly and safely in every environment.