Guide

AI Coding Tools: Reviews & Best Practices

Table of Contents

AI coding tools are reshaping how developers write and maintain software. What began as autocomplete has matured into assistants that generate functions, refactor projects, and even act as lightweight "agents" across codebases.

Adoption has been rapid. GitHub Copilot has millions of users, while newer entrants, such as Cursor, Claude Code, and OpenAI Codex, are gaining traction because of capabilities like deeper multi-file reasoning and custom workflows. For many teams, these assistants have become everyday companions, reducing boilerplate and accelerating routine development.

This guide compares today's most popular AI coding tools, highlights their strengths and limitations, and demonstrates how to extend them with runtime context for more reliable debugging and feature development.

Summary of top AI coding tools

The table below summarizes the AI coding tools we will explore in this article. After taking a closer look at each, we will discuss practical tips for engineers looking to integrate AI tools into their workflows.

| AI coding tool | Summary |

|---|---|

| GitHub Copilot | Seamless IDE integration and polished completions, best for inline code but weaker in cross-file reasoning. |

| Cursor | A feature-rich AI IDE with agents and custom rules. It offers strong multi-file support but has a steeper learning curve. |

| Claude Code | A CLI-based assistant well-suited for analyzing entire projects and large logs. It is ideal for deep debugging but less intuitive than IDE-based tools. |

| OpenAI Codex | A versatile assistant spanning IDE, CLI, and cloud modes, offering multi-file reasoning and automation. |

GitHub Copilot

GitHub Copilot, introduced in 2021 by GitHub and OpenAI, was the first widely adopted AI coding assistant. What began as a simple autocomplete plugin has grown into a suite of AI capabilities available across major editors, including VS Code, JetBrains IDEs, Neovim, and Visual Studio.

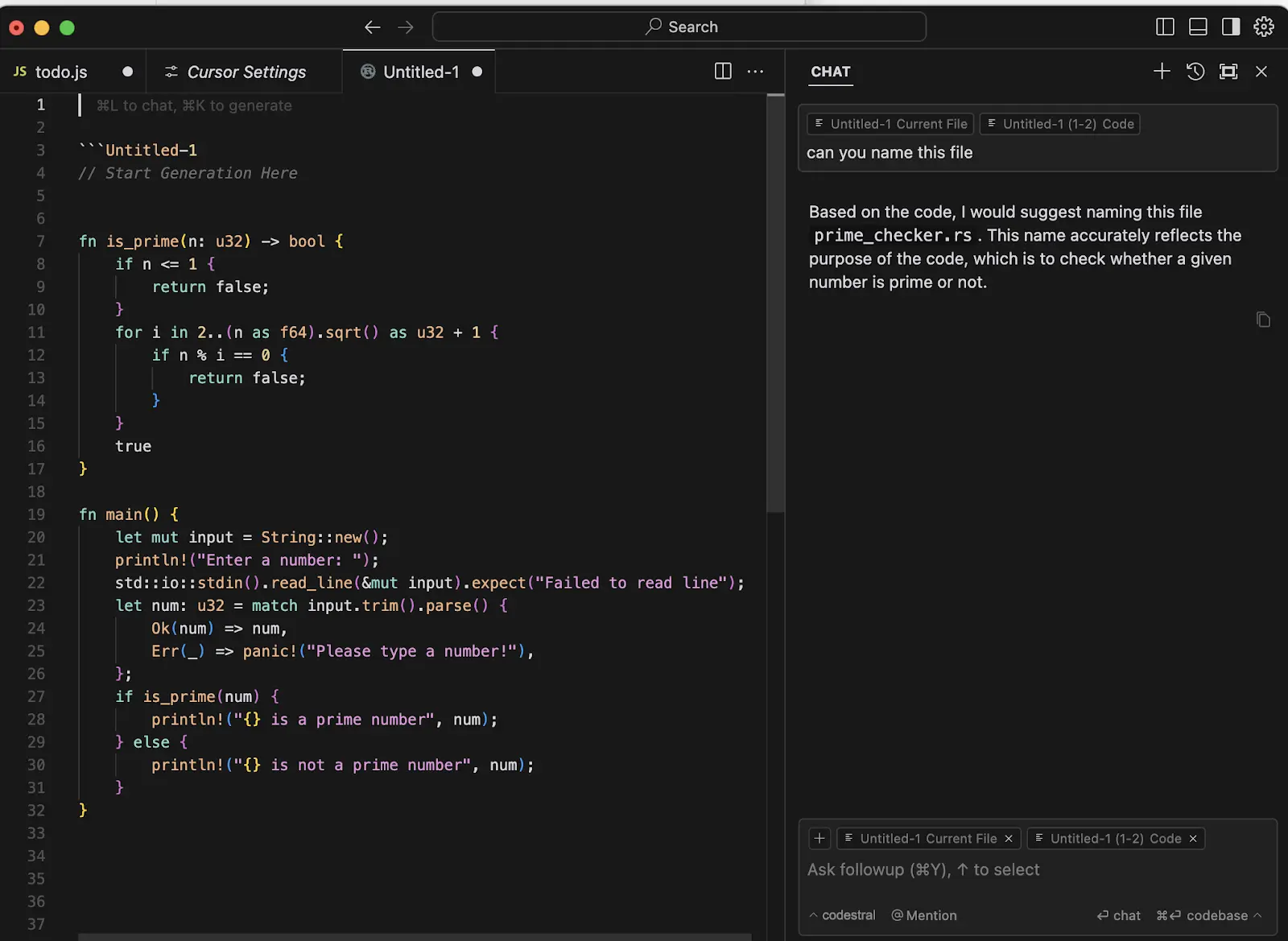

GitHub Copilot in VS Code

Today, Copilot supports three distinct modes of interaction. The first is the original inline completion, where it predicts your next line or even an entire function based on the surrounding context. This remains its core strength: type a comment like "// parse CSV into object," and Copilot will often draft a working implementation instantly. Additionally, Copilot Chat provides a sidebar for explaining code, answering "why" questions, or suggesting test cases. The newest addition (as of Q3 2025), agent mode, is more autonomous. Agent mode can carry out multi-step edits across multiple files, run tests, and apply refactors with developer oversight.

A notable advancement in late 2024 and 2025 is the introduction of model switching, which allows developers to choose between lighter, faster Codex-style models for routine completions or GPT-4 Turbo for more complex reasoning tasks. This flexibility enables Copilot to adapt to a wide range of workflows, from generating quick boilerplate to addressing project-wide changes.

Where Copilot excels is in an everyday development flow. It can reduce the friction of repetitive tasks (such as generating unit test scaffolds, filling in boilerplate, and suggesting idiomatic API calls) and free engineers to focus on higher-level logic. Its integration with IDEs means you rarely leave your editor, and its wide adoption means it benefits from drawing on many user sessions to generate suggestions. Many teams treat Copilot as an "always-on pair programmer" that accelerates the basics without demanding new habits.

While this AI coding tool has many benefits, Copilot's limitations are equally important to understand. Despite recent upgrades, Copilot still struggles with true multi-file reasoning. Agent mode helps, but it can misfire on large or complex refactors. Like any LLM, it sometimes produces code that appears correct but fails at runtime, making human review and testing essential.

Overall, Copilot is best suited for developers and teams who want a low-friction, enterprise-ready assistant embedded in their existing tools. It won't design your system or debug intricate runtime issues, but for day-to-day productivity inside the IDE, it remains the most seamless and polished option available.

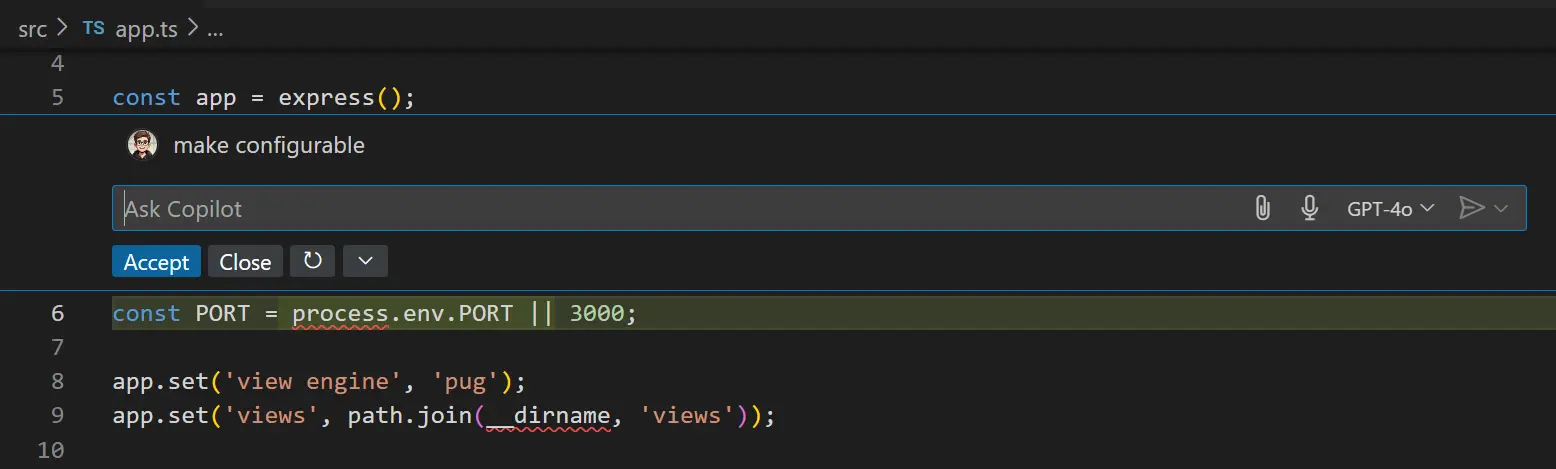

Cursor

Cursor is often described as the "power-user's AI IDE." Released in 2023 by the startup Anysphere, it originated as a fork of VS Code, with AI integrated into nearly every feature. While GitHub Copilot adds AI to an existing editor, Cursor reimagines the editor itself as an AI-native environment. The result is a tool that feels more experimental and ambitious, but also more demanding of the developer using it.

Cursor AI Code Editor

The centerpiece of Cursor is its ability to handle multi-file reasoning. Developers can select entire directories or repositories and include them in the AI's context, allowing it to answer questions or make changes across a large project rather than just within a single file. For example, you can ask Cursor to "add an authentication feature," and its agent mode will propose edits in multiple files, create new components, and even update configuration, all within the same workflow. It is capable of reading broadly, planning changes, and then carrying them out with your approval.

Cursor also supports background agents that run continuously in the editor. These agents can surface insights such as potential bugs, style issues, or refactoring opportunities without needing an explicit prompt. This makes Cursor feel more proactive, complementing its interactive agent mode with automated guidance.

Cursor also introduces customizability that appeals to advanced teams. It uses an AGENTS.md file and a dedicated rules directory to encode project-specific conventions or style guides, providing the AI with a clear understanding of how your team prefers to work. This level of fine-grained control makes it easier to maintain consistency between AI-generated code and human-written code. Combined with features like "Fix with AI" buttons, proactive bug-finding experiments, and integrated commit message generation, Cursor helps developers push automation further.

The tradeoff with this AI coding tool is complexity. Cursor's breadth of features means new users can feel overwhelmed at first. Compared to Copilot's low-friction setup, Cursor has a steeper learning curve: not just in commands, but also in understanding when to trust the agent and when to step in. It also tends to be heavier on resources: indexing large codebases for multi-file reasoning consumes more memory and CPU than a lightweight plugin. Rapid feature releases sometimes introduce rough edges, though many would argue that this is the price of innovation.

Despite those challenges, many experienced engineers find themselves returning to Cursor because of its reliability in large projects. Its suggestions are often more accurate than competitors when the context spans multiple files. For teams working on complex codebases, the investment pays off: once mastered, Cursor can deliver project-wide refactors, guided onboarding, and high-quality code suggestions that reduce manual toil.

If Copilot is the smooth, everyday companion, Cursor is the demanding but powerful tool that rewards those willing to take the time to learn it. It's best suited for engineers who want maximum control and are comfortable trading simplicity for depth.

Full-stack session recording

Learn moreClaude Code

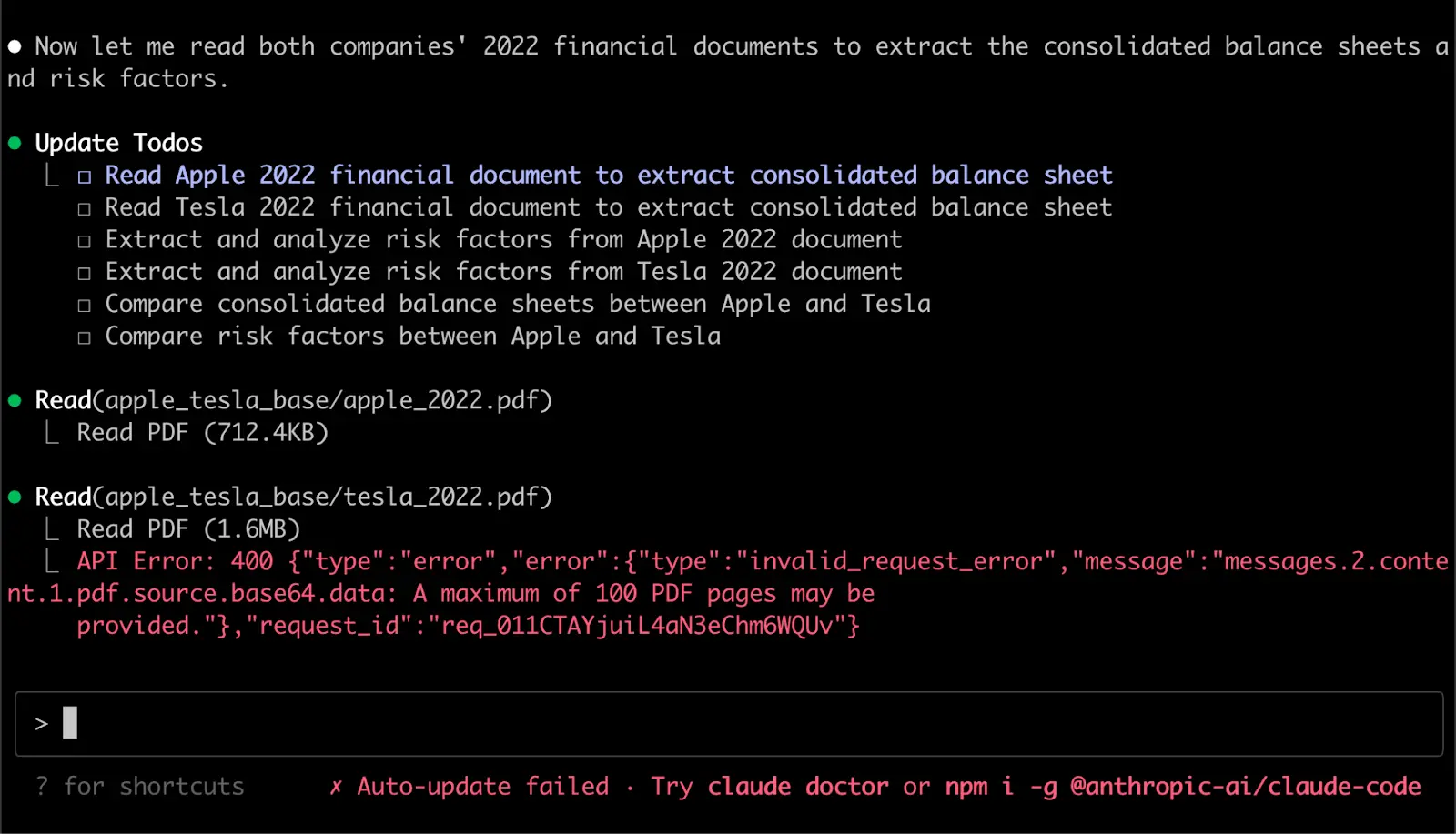

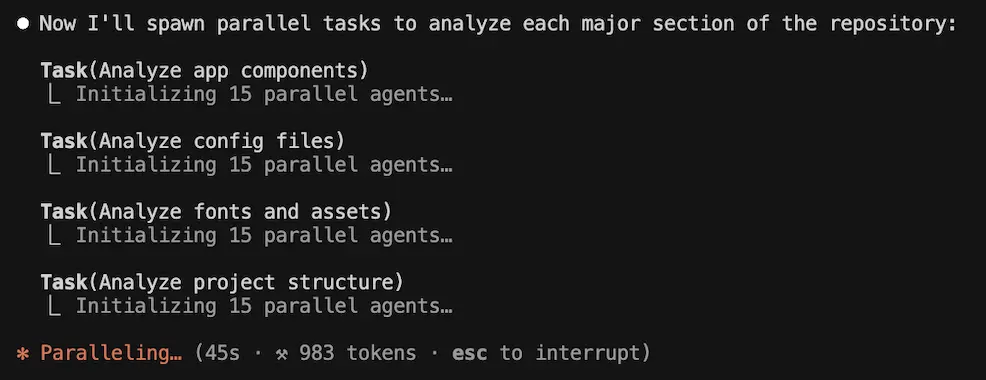

Developed by Anthropic, Claude Code is essentially a command-line assistant powered by the Claude family of large language models. What sets it apart is its terminal-based workflow, making it well-suited for analyzing entire repositories, massive log files, or long configuration scripts directly from the command line.

Claude Code displayed in a terminal's command-line interface (CLI).

Working with Claude Code feels more like collaborating with a knowledgeable colleague in your shell than using an autocomplete tool. You launch it in the terminal, then ask questions or assign tasks through conversational commands. Because it can open and read files, it is particularly useful for analyzing entire projects. For example, you might feed it a multi-thousand-line log and ask, "Where is the error happening and what's the likely cause?" It can highlight the relevant lines, connect them to source code, and even suggest a fix. Some teams also integrate it into CI pipelines to automate code reviews or generate structured comments on pull requests.

The strength of this model-driven, CLI-first approach is flexibility. Claude Code is not limited by the boundaries of an IDE window. It can read across services, scripts, and repositories, and because it runs in a shell, it can also execute commands with the necessary permissions. This makes it well-suited for tasks such as refactoring APIs across multiple microservices or running automated test suites as part of its reasoning loop. Its ability to juggle large contexts also makes it valuable for architectural exploration: you can ask it to explain how a subsystem fits together without manually grepping through dozens of files.

The tradeoff with this AI coding tool is usability. Without a graphical interface or inline autocomplete, Claude Code is less intuitive, especially for developers used to real-time suggestions. It requires more deliberate prompting and can feel slower for small tasks. The cost of running very large contexts is also higher: long sessions can quickly consume tokens, which is important for teams watching their budgets. Like Cursor's agent mode, Claude Code can also run shell commands, which means it requires cautious oversight to avoid unintended changes.

Claude Code is best suited for experienced engineers who live comfortably in the terminal and often deal with sprawling codebases or heavy debugging scenarios. Where Copilot accelerates the everyday and Cursor automates the IDE, Claude Code excels in deep reasoning across vast amounts of text and code. It's an assistant more designed for scale and precision, even if it means sacrificing convenience.

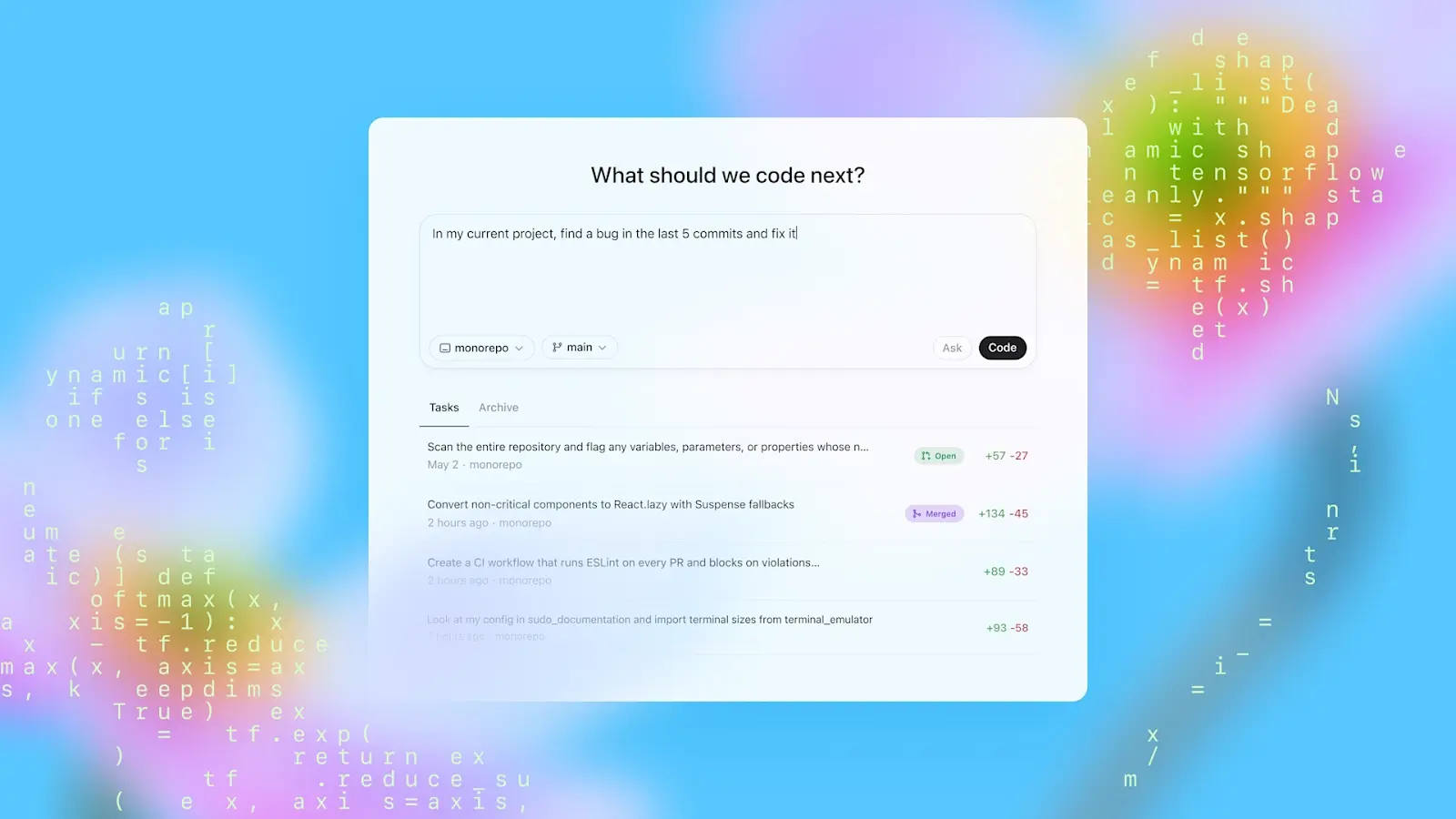

OpenAI Codex

OpenAI Codex has re-emerged as a full coding platform built on GPT-5-Codex, a model optimized specifically for software engineering. Once the engine behind GitHub Copilot, Codex is now positioned as a standalone assistant included with ChatGPT's paid plans. It bridges natural language prompts to working code, acting as a flexible pair programmer.

Codex spans three environments: an IDE extension (focused on VS Code and derivatives) for inline completions and multi-file edits, a CLI tool for terminal-first workflows, and a cloud agent that runs tasks in sandboxed containers. This tri-modal presence makes it one of the few assistants usable across editor, shell, and hosted automation contexts.

The OpenAI Codex user interface.

Its strengths lie in agentic workflows: Codex doesn't just suggest code, it can run builds, execute tests, review pull requests, and propose multi-file refactors. Developers can even tag Codex in GitHub issues or PRs, and it will analyze the repo, suggest fixes, or open a new PR automatically. This makes it feel less like autocomplete and more like a junior engineer who iterates on tasks.

The tradeoffs associated with this entry in our AI coding tools list are cost, maturity, and breadth of integration. Official support is strongest for VS Code; JetBrains and other IDEs lag. Long tasks may hit usage limits, and results still require careful review. Still, Codex offers a unique balance of automation, flexibility, and integration, making it a compelling option for teams that want both everyday completions and deeper agent-driven assistance.

Interact with full-stack session recordings to appreciate how they can help with debugging

EXPLORE THE SANDBOX(NO FORMS)

Where AI coding tools fall short

AI coding assistants can dramatically speed up routine development, but they are not silver bullets. Their weaknesses become most apparent when projects transition from static code to real-time runtime behavior and architectural decision-making. Understanding these gaps helps teams determine when to leverage AI and when to rely on traditional software engineering practices. Let's take a look at a few of the shortcomings of AI coding tools.

Limited runtime visibility

AI assistants generally reason from static code and lack native runtime observability. Some can integrate with CLI tools (e.g., gcloud, aws) to fetch logs or system state, but these remain workarounds rather than built-in runtime awareness. If a bug emerges only under specific input conditions or concurrency states, the AI often proposes generic fixes rather than identifying the true cause. Developers should still depend on debuggers, profilers, and observability tools for runtime insight.

Susceptible to hallucinations

Even polished assistants can suggest code that compiles but fails in practice. While modern agents can mitigate this by running tests or executing code locally, hallucinations are still possible, making human review and testing essential before merging AI-generated code.

Narrow debugging context

When debugging, developers triangulate across logs, traces, metrics, and history. AI tools have traditionally seen only the immediate code snippet or file, missing correlations across services or layers. This is beginning to improve as assistants integrate with observability tools and runtime data, but the gap remains significant today, making them less effective at diagnosing complex distributed or performance-related issues.

Performance blind spots

AI-generated code is rarely optimized for runtime efficiency. Assistants cannot evaluate memory usage, concurrency behavior, or scaling bottlenecks. Engineers must still measure and optimize performance using traditional profiling and monitoring tools.

Weak at system design

These assistants are strong within an existing code structure. With integrations (e.g., via MCP), AI tools can ingest Jira tickets, PRDs, and other docs to ground their suggestions. However, they still lack the tacit context and tradeoff judgment that architects apply: SLO/latency and cost budgets, compliance nuances, vendor/infrastructure constraints, team topology, and long-term maintainability.

In short, AI tools accelerate coding and can assist with design exploration. However, high-stakes architectural and system design decisions still require human judgment.

Practical tips for engineers working with AI coding tools

As we have seen, AI coding tools can accelerate work, but they need guidance and oversight. Let's explore some practices that help engineers get the most out of AI while avoiding common pitfalls.

Ensure high-quality inputs

Garbage in, garbage out. If you paste a vague error message, the assistant will produce vague advice. Supplying a clean stack trace, relevant log snippet, and a description of the environment often transforms the AI's answer from generic guesswork into a targeted solution.

Use effective prompts

A bad prompt like "Fix my app" leaves the model guessing. A better prompt is, "Why is login failing with this stack trace?" and then provide the trace.

Other concrete examples:

- Bad: "Make this faster."

Good: "This function takes five seconds to process 1,000 records. Suggest optimizations or data structures to reduce runtime." - Bad: "Add user profiles."

Good: "We need a user profile page where users upload an avatar. Frontend is React, backend is Node.js. Suggest how to handle image upload (to S3) and display on the dashboard." - Bad: "Fix my app."

Good: "Here is a stack trace and the captured runtime logs for a failed session. Why is the login failing, and what is the likely cause?"

The more details you include (e.g. error messages, inputs, or architecture) the more specific and useful the AI tool's answer becomes.

Always review and test generated code

AI can generate code that compiles but hides subtle (or major) issues. For example, an early Copilot suggestion built an HTTP POST body by concatenating raw user input. This looked fine at a glance, but introduced a serious injection vulnerability. Without manual review, such insecure code could have shipped to production unnoticed. Treat AI output like a junior teammate's contribution: review, test, and validate before merging.

One click. Full-stack visibility. All the data you need correlated in one session

RECORD A SESSION FOR FREECombine assistants in practice

Different tools shine in different roles. A practical workflow is to let Copilot handle inline completions while Claude Code analyzes multi-file traces or log dumps. Cursor can perform large-scale refactors, while Windsurf provides structured guidance for feature work.

Copilot suggesting code inline in VS Code.

Claude Code analyzing files in the terminal.

This combination covers both everyday productivity and deep software debugging, showing how assistants complement each other rather than compete.

Keep ownership of final code decisions

AI is a helper, not an autopilot. Engineers remain responsible for code quality, security, and maintainability. Treat AI-generated code as a draft: useful for speed, but always subject to your judgment. The best results come when you combine AI acceleration with human accountability.

Adding runtime context to enhance AI workflows

A final way to help AI coding assistants generate better suggestions is to provide them with contextual runtime data, such as logs, traces, sessions, and even frontend screens related to a given user session. Doing so can help in several scenarios:

Debugging

Imagine a login error that occurs only when a specific API call times out under heavy load. An AI assistant working only from source code might suggest checking null values or adding retries. However, without runtime data, it cannot determine that the root cause was a timeout in Service B. When provided with runtime context, the assistant views the captured trace: "Service B timed out when called by Service A." Now the suggestion becomes precise: handle the timeout in the API client and adjust the retry logic.

System understanding

Large systems evolve, and even senior developers may not know all the dependencies. Runtime data provides AI assistants with a map, showing which services called which, what inputs triggered the bug, and what downstream impacts followed. This makes explanations richer. Instead of saying "Function X failed," the assistant can explain, "Function X failed because Service Y changed its schema last week, which broke downstream parsing in Service Z."

Feature development

Runtime context is not only for bug fixing. Annotated screens and developer notes help AI assistants align with intent during feature work. For example, when adding a new profile page, captured user actions, along with design annotations, enable the AI to propose the backend endpoint, database schema, and frontend rendering in a single, coherent plan. Without those annotations, it might only scaffold generic code.

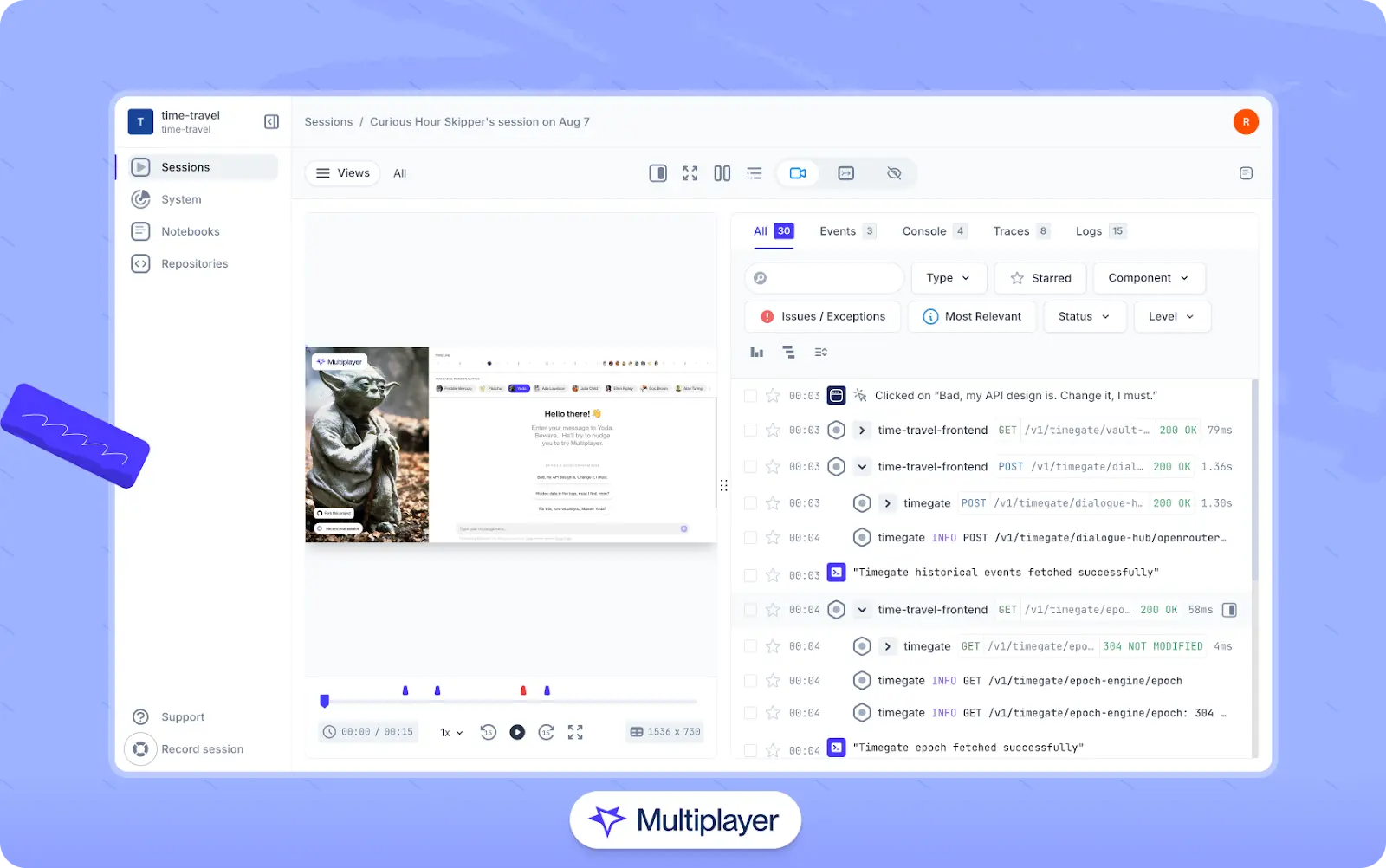

Capturing the data

While the benefits of providing AI assistants with this type of data can be substantial, the process of collecting the data itself can be tedious. Instrumenting multiple layers, correlating logs, and ensuring that engineers have access to all relevant data takes time. Without the right tools, it is easy for important signals to get buried.

To address these issues, a new generation of tools automatically collects data that is:

- Full stack: Data spans all layers: frontend screens, request/response pairs, header content, and backend traces. An AI assistant that sees only one layer might guess wrong, but an assistant with full-stack visibility can more likely trace a bug from a UI button click all the way to the backend issue that triggered the error.

- Correlated: Instead of dumping thousands of logs, some tools can surface only the exact slice of runtime data tied to a given session. Feeding that targeted dataset to the AI keeps suggestions focused and avoids noise.

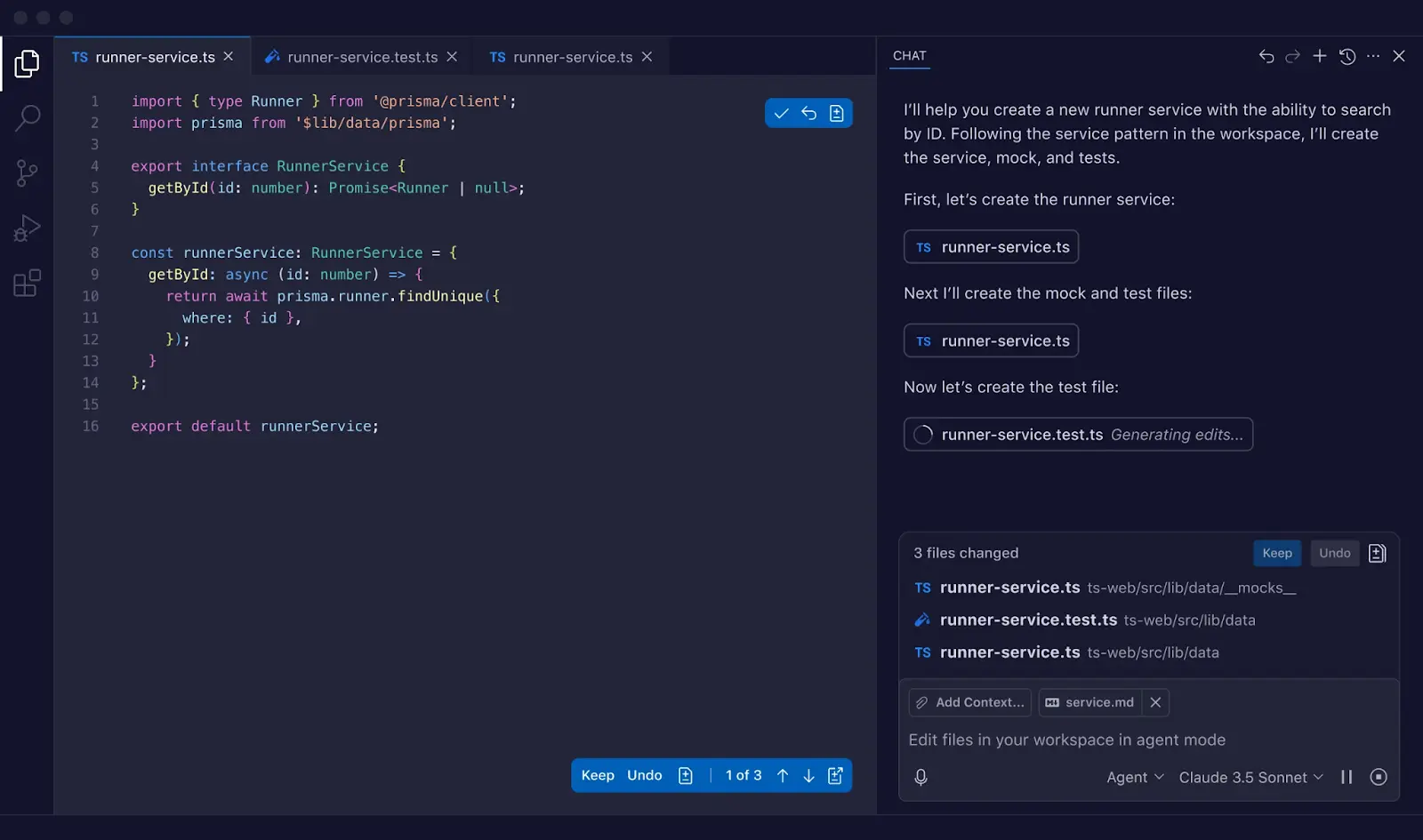

Tools like Multiplayer support these capabilities by capturing full stack session recordings. Recordings can be captured via a browser extension, a JavaScript client library, or language-specific libraries for CLI applications and easily shared with team members for different use cases. They can also be enriched with developer annotations, sketches, or TODO notes to let the AI understand design intent. This avoids "correct but misaligned" code that works technically but doesn't match the team's standards.

Multiplayer's full-stack session recordings

Stop coaxing your copilot. Feed it correlated session data that’s enriched and AI-ready.

START FOR FREEConclusion

AI coding tools have already changed how many engineers work, accelerating repetitive tasks and amplifying productivity in everyday workflows. GitHub Copilot offers seamless IDE integration, Cursor enables powerful multi-file reasoning, Claude Code provides deep analysis from the command line, and OpenAI Codex bridges IDE, CLI, and cloud automation modes. Each brings something distinct to the table, and most teams benefit from combining multiple tools rather than relying on a single assistant.

Still, these tools have limits. They lack native runtime visibility, can produce subtle bugs or security flaws, and struggle with high-stakes architectural decisions. Addressing these gaps requires pairing AI suggestions with traditional debugging practices, rigorous testing, and human oversight. Providing AI tools with runtime context—such as session recordings, traces, and logs—can significantly improve their effectiveness, enabling them to diagnose real issues instead of generating generic fixes.

As AI coding tools continue to mature, expect deeper integrations with debugging platforms, observability systems, and other development tools. Teams that adopt thoughtfully—using AI to accelerate routine work while retaining responsibility for quality, security, and design—will see the most benefit. The most effective workflows combine AI acceleration with engineering judgment, using assistants as amplifiers rather than replacements.